在《云安全攻防入门》一文中,我们主要介绍了云计算的基本概念以及云原生相关的基础知识,本文主要介绍k8s云原生攻防相关靶场和实践。

一、k8s靶场环境的搭建(metarget)

1.1 K8S搭建方式概述

官方文档逐步搭建

minikube

kind

metarget(推荐)

1.2 Metarget

1.2.1 简介

Metarget 是一个专注于云原生安全的脆弱基础设施自动化构建框架,其主要用途是为安全研究、攻防演练和产品测试提供高效的环境支持。Metarget的名称来源于meta-(元)加target(目标,靶机),是一个脆弱基础设施自动化构建框架,主要用于快速、自动化搭建从简单到复杂的脆弱云原生靶机环境。

项目目标:

- 实现云原生组件脆弱场景的自动化构建(特定环境)。

- 在1的基础上,对接容器化应用脆弱场景,实现云原生多层次脆弱场景的自动化构建(靶机)。

- 在1、2的基础上,实现多节点+多层次脆弱云原生集群的自动化构建(靶场)。

其中,目标1主要面向安全研究人员,方便进行漏洞学习、调试,特定PoC、ExP的编写和测试;目标2和3主要面向想要磨练云原生安全攻防实战技能的红蓝对抗、渗透测试人员。

1.2.2 安装

- 推荐Ubuntu 18.04 【 https://releases.ubuntu.com/18.04/】

拉取仓库,安装必要库文件:

git clone https://github.com/Metarget/metarget.git

cd metarget/

pip3 install -r requirements.txt # 指定源下载更快 -i https://pypi.tuna.tsinghua.edu.cn/simple- 验证安装是否成功

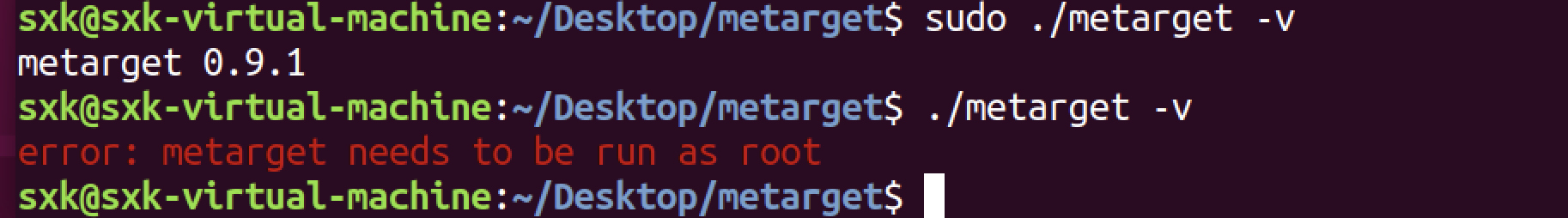

sudo ./metarget -v

- 使用Metarget,搭建脆弱场景,例如:

./metarget cnv install cve-2019-57361.2.3 使用方法

1)基本用法

sudo ./metarget -h

sudo ./metarget -h

usage: metarget [-h] [-v] subcommand ...

automatic constructions of vulnerable infrastructures

positional arguments:

subcommand description

gadget cloud native gadgets (docker/k8s/...) management

cnv cloud native vulnerabilities management

appv application vulnerabilities management

optional arguments:

-h, --help show this help message and exit

-v, --version show program's version number and exit2)管理云原生组件

- 基本用法

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo ./metarget gadget -h

usage: metarget gadget [-h] subcommand ...

positional arguments:

subcommand description

list list supported gadgets

install install gadgets

remove uninstall gadgets

optional arguments:

-h, --help show this help message and exit- 执行

./metarget gadget list了解当前支持的云原生组件

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo ./metarget gadget list

List of all supported components:

docker k8s kata kernel containerd runc可以看到支持的云原生组件有docker k8s kata kernel containerd runc.

执行./metarget gadget list docker/k8s/containerd/runc/kernel了解对应组件支持安装的版本。

- 安装指定版本Docker

./metarget gadget install docker --version 18.03.1执行成功后,版本为18.03.1的Docker将被安装在当前Linux系统上。

- 安装指定版本Kubernetes

./metarget gadget install k8s --version 1.16.5执行成功后,版本为1.16.5的单节点Kubernetes将被安装在当前Linux系统上。

Kubernetes通常需要配置大量参数,Metarget项目提供了部分参数供指定:

-v VERSION, --version VERSION

gadget version

--cni-plugin CNI_PLUGIN

cni plugin, flannel by default

--pod-network-cidr POD_NETWORK_CIDR

pod network cidr, default cidr for each plugin by

default

--taint-master taint master node or not

--domestic magicMetarget支持部署多节点Kubernetes集群环境,如果想要部署多节点,在单节点部署成功后,将tools目录下生成的install_k8s_worker.sh脚本复制到每个工作节点上执行即可。

- 安装指定版本的Kata-containers

./metarget gadget install kata --version 1.10.0执行成功后,版本为1.10.0的Kata-containers将被安装在当前Linux系统上。

你也可以通过--kata-runtime-type选项指定kata运行时的类型(如qemu、clh、fc等),默认值为qemu。

考虑到特殊的网络环境,国内的朋友如果无法下载Kata-containers安装包,可以通过--https-proxy参数指定代理,也可以预先从Github上下载Kata-containers压缩包放置在data/目录下,Metarget将自动使用已下载的包。

- 安装指定版本Linux内核

./metarget gadget install kernel --version 5.7.5执行成功后,版本为5.7.5的内核将被安装在当前Linux系统上。

注意:

当前Metarget采用两种方法安装内核:

- apt

- 在apt无备选包的情况下,直接远程下载Ubuntu deb内核包进行安装

内核安装成功后需要重新启动系统以生效,Metarget会提醒是否自动重启系统。

- 安装指定版本containerd/runc

./metarget gadget install containerd --version 1.2.6

# 执行成功后,版本为1.2.6的containerd将被安装在当前Linux系统上。

./metarget gadget install runc --version 1.1.6

# ./metarget gadget install runc --version 1.1.63)管理云原生组件的脆弱场景

- 基本用法

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo ./metarget cnv -h

usage: metarget cnv [-h] subcommand ...

positional arguments:

subcommand description

list list supported cloud native vulnerabilities

install install cloud native vulnerabilities

remove uninstall cloud native vulnerabilities

optional arguments:

-h, --help show this help message and exit- 执行

./metarget cnv list了解当前支持的云原生组件脆弱场景。

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo ./metarget cnv list

+-------------------------------+-------------------+-----------------------------+

| name | class | type |

+-------------------------------+-------------------+-----------------------------+

| cap_dac_read_search-container | config | container_escape |

| cap_sys_admin-container | config | container_escape |

| cap_sys_module-container | config | container_escape |

| cap_sys_ptrace-container | config | container_escape |

| k8s_backdoor_cronjob | config | persistence |

| k8s_backdoor_daemonset | config | persistence |

| k8s_node_proxy | config | privilege_escalation |

| k8s_shadow_apiserver | config | persistence |

| privileged-container | config | container_escape |

| cve-2018-15664 | docker | container_escape |

| cve-2019-13139 | docker | command_execution |

| cve-2019-14271 | docker | container_escape |

| cve-2020-15257 | docker/containerd | container_escape |

| cve-2019-16884 | docker/runc | container_escape |

| cve-2019-5736 | docker/runc | container_escape |

| cve-2021-30465 | docker/runc | container_escape |

| cve-2024-21626 | docker/runc | container_escape |

| cve-2020-27151 | kata-containers | container_escape |

| kata-escape-2020 | kata-containers | container_escape |

| cve-2016-5195 | kernel | container_escape |

| cve-2016-8655 | kernel | privilege_escalation |

| cve-2017-1000112 | kernel | container_escape |

| cve-2017-16995 | kernel | privilege_escalation |

| cve-2017-6074 | kernel | privilege_escalation |

| cve-2017-7308 | kernel | privilege_escalation |

| cve-2018-18955 | kernel | privilege_escalation |

| cve-2020-14386 | kernel | container_escape |

| cve-2021-22555 | kernel | container_escape |

| cve-2021-3493 | kernel | privilege_escalation |

| cve-2021-4204 | kernel | privilege_escalation |

| cve-2022-0185 | kernel | container_escape |

| cve-2022-0492 | kernel | container_escape |

| cve-2022-0847 | kernel | container_escape |

| cve-2022-0995 | kernel | privilege_escalation |

| cve-2022-23222 | kernel | privilege_escalation |

| cve-2022-25636 | kernel | privilege_escalation |

| cve-2022-27666 | kernel | privilege_escalation |

| cve-2023-3269 | kernel | privilege_escalation |

| cve-2023-5345 | kernel | privilege_escalation |

| cve-2017-1002101 | kubernetes | container_escape |

| cve-2018-1002100 | kubernetes | container_escape |

| cve-2018-1002105 | kubernetes | privilege_escalation |

| cve-2019-1002101 | kubernetes | container_escape |

| cve-2019-11246 | kubernetes | container_escape |

| cve-2019-11249 | kubernetes | container_escape |

| cve-2019-11251 | kubernetes | container_escape |

| cve-2019-11253 | kubernetes | denial_of_service |

| cve-2019-9512 | kubernetes | denial_of_service |

| cve-2019-9514 | kubernetes | denial_of_service |

| cve-2019-9946 | kubernetes | traffic_interception |

| cve-2020-10749 | kubernetes | man_in_the_middle |

| cve-2020-8554 | kubernetes | man_in_the_middle |

| cve-2020-8555 | kubernetes | server_side_request_forgery |

| cve-2020-8557 | kubernetes | denial_of_service |

| cve-2020-8558 | kubernetes | exposure_of_service |

| cve-2020-8559 | kubernetes | privilege_escalation |

| cve-2021-25741 | kubernetes | container_escape |

| mount-docker-sock | mount | container_escape |

| mount-host-etc | mount | container_escape |

| mount-host-procfs | mount | container_escape |

| mount-var-log | mount | container_escape |

| no-vuln-ubuntu | no-vuln | test |

+-------------------------------+-------------------+-----------------------------+- 安装脆弱性环境示例

./metarget cnv install cve-2019-5736 #执行成功后,存在CVE-2019-5736漏洞的Docker将被安装在当前Linux系统上。

./metarget cnv install cve-2018-1002105 #执行成功后,存在CVE-2018-1002105漏洞的Kubernetes单节点集群将被安装在当前Linux系统上。

./metarget cnv install cve-2016-5195 #执行成功后,存在CVE-2016-5195漏洞的Linux内核将被安装在当前系统上。- Kata-containers安全容器逃逸

./metarget cnv install kata-escape-2020执行成功后,存在CVE-2020-2023/2025/2026等漏洞的Kata-containers将被安装在当前系统上。

考虑到特殊的网络环境,国内如果无法下载Kata-containers安装包,可以通过--https-proxy参数指定代理,也可以预先从Github上下载Kata-containers压缩包放置在data/目录下,Metarget将自动使用已下载的包。

4)管理“云原生应用”的脆弱场景

- 基础用法

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo ./metarget appv -h

usage: metarget appv [-h] subcommand ...

positional arguments:

subcommand description

list list supported application vulnerabilities

install install application vulnerabilities

remove uninstall application vulnerabilities

ps show running application vulnerabilities

optional arguments:

-h, --help show this help message and exit

执行./metarget appv list了解当前支持的云原生应用脆弱场景。

在构建云原生应用的脆弱场景前,需要先安装Docker及Kubernetes,可以使用Metarget相关命令来完成。

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo ./metarget appv list

+---------------------------------------+----------------+-----------------+

| name | class | type |

+---------------------------------------+----------------+-----------------+

| cve-2015-5254 | activemq | rce |

| cve-2016-3088 | activemq | rce |

| apereo-cas-4-1-rce | apereo-cas | rce |

| aria2-rce | aria2 | rce |

| cve-2017-3066 | coldfusion | rce |

| cve-2019-3396 | confluence | rce |

| cve-2021-26084 | confluence | rce |

| cve-2017-12636 | couchdb | rce |

| discuz-wooyun-2010-080723 | discuz | rce |

| cve-2017-6920 | drupal | rce |

| cve-2018-7600 | drupal | rce |

| cve-2018-7602 | drupal | rce |

| cve-2019-6339 | drupal | rce |

| cve-2019-17564 | dubbo | deserialization |

| dvwa | dvwa | comprehensive |

| ecshop-xianzhi-2017-02-82239600 | ecshop | rce |

| cve-2014-3120 | elasticsearch | rce |

| cve-2015-1427 | elasticsearch | rce |

| cve-2018-1000006 | electron | rce |

| cve-2018-15685 | electron | rce |

| fastjson-1-2-24-rce | fastjson | rce |

| fastjson-1-2-47-rce | fastjson | rce |

| cve-2020-17518 | flink | rce |

| cve-2020-17519 | flink | rce |

| cve-2018-16509 | ghostscript | rce |

| cve-2018-19475 | ghostscript | rce |

| cve-2019-6116 | ghostscript | rce |

| gitea-1-4-rce | gitea | rce |

| cve-2023-4911 | glibc | local-root |

| cve-2017-17562 | goahead | rce |

| cve-2021-42342 | goahead | rce |

| cve-2021-43798 | grafana | fileread |

| h2database-h2-console-unacc | h2database | unauthorized |

| cve-2020-29599 | imagemagick | rce |

| imagemagick-imagetragick | imagemagick | rce |

| influxdb-unacc | influxdb | unauthorized |

| cve-2017-7525 | jackson | rce |

| cve-2017-12149 | jboss | rce |

| cve-2017-7504 | jboss | rce |

| cve-2017-1000353 | jenkins | rce |

| cve-2018-1000861 | jenkins | rce |

| cve-2024-23897 | jenkins | rce |

| cve-2018-1297 | jmeter | rce |

| cve-2015-8562 | joomla | rce |

| jupyter-notebook-rce | jupyter | rce |

| cve-2019-7609 | kibana | rce |

| cve-2021-3129 | laravel | rce |

| cve-2018-10933 | libssh | bypass |

| cve-2020-7961 | liferay-portal | rce |

| cve-2017-5645 | log4j | rce |

| cve-2021-44228 | log4j | rce |

| mojarra-jsf-viewstate-deserialization | mojarra | deserialization |

| cve-2019-10758 | mongo-express | rce |

| cve-2019-7238 | nexus | rce |

| cve-2020-10199 | nexus | rce |

| cve-2020-10204 | nexus | rce |

| no-vuln | no-vuln | test |

| cve-2020-9496 | ofbiz | rce |

| cve-2020-7247 | opensmtpd | rce |

| cve-2014-0160 | openssl | rce |

| cve-2022-0778 | openssl | rce |

| cve-2020-35476 | opentsdb | rce |

| cve-2012-1823 | php | rce |

| cve-2018-19518 | php | rce |

| cve-2019-11043 | php | rce |

| php-8-1-backdoor | php | rce |

| php-fpm | php | unauthorized |

| cve-2016-5734 | phpmyadmin | rce |

| phpmyadmin-wooyun-2016-199433 | phpmyadmin | rce |

| cve-2017-9841 | phpunit | rce |

| cve-2019-9193 | postgres | rce |

| cve-2017-8291 | python | rce |

| cve-2018-16509 | python | rce |

| redis-4-unacc | redis | unauthorized |

| cve-2021-22911 | rocketchat | rce |

| cve-2020-11651 | saltstack | bypass |

| cve-2020-11652 | saltstack | filewrite |

| cve-2020-16846 | saltstack | rce |

| cve-2017-7494 | samba | rce |

| scrapy-scrapyd-unacc | scrapy | unauthorized |

| cve-2016-4437 | shiro | rce |

| cve-2020-1957 | shiro | rce |

| cve-2017-12629 | solr | rce |

| cve-2019-0193 | solr | rce |

| cve-2019-17558 | solr | rce |

| spark-unacc | spark | unauthorized |

| cve-2016-4977 | spring | rce |

| cve-2017-4971 | spring | rce |

| cve-2017-8046 | spring | rce |

| cve-2018-1270 | spring | rce |

| cve-2018-1273 | spring | rce |

| cve-2022-22947 | spring | rce |

| cve-2022-22963 | spring | rce |

| cve-2022-22965 | spring | rce |

| struts2-s2-032 | struts2 | rce |

| struts2-s2-045 | struts2 | rce |

| struts2-s2-046 | struts2 | rce |

| struts2-s2-048 | struts2 | rce |

| struts2-s2-052 | struts2 | rce |

| struts2-s2-053 | struts2 | rce |

| thinkphp-2-rce | thinkphp | rce |

| thinkphp-5-0-23-rce | thinkphp | rce |

| thinkphp-5-rce | thinkphp | rce |

| cve-2020-13942 | unomi | rce |

| python-unpickle-vuln | unpickle | rce |

| cve-2017-10271 | weblogic | rce |

| cve-2018-2628 | weblogic | rce |

| cve-2020-14882 | weblogic | rce |

| cve-2019-15107 | webmin | rce |

| wordpress-pwnscriptum | wordpress | rce |

| cve-2021-21351 | xstream | rce |

| cve-2021-29505 | xstream | rce |

| cve-2017-2824 | zabbix | rce |

| cve-2020-11800 | zabbix | rce |

+---------------------------------------+----------------+-----------------+

- 脆弱性云原生应用安全示例

./metarget appv install dvwa

# 执行成功后,DVWA将以Deployment和Service资源的形式被部署在当前集群中。

- 你可以通过指定

--external选项让服务以Nodeport形式暴露出来,这样一来,你就能够通过工作节点的IP访问到该服务(默认情况下,服务类型为ClusterIP)。- 你可以指定

--host-net选项,这样一来,该脆弱应用将共享宿主机网络命名空间。- 你可以指定

--host-pid选项,这样一来,该脆弱应用将共享宿主机PID命名空间。

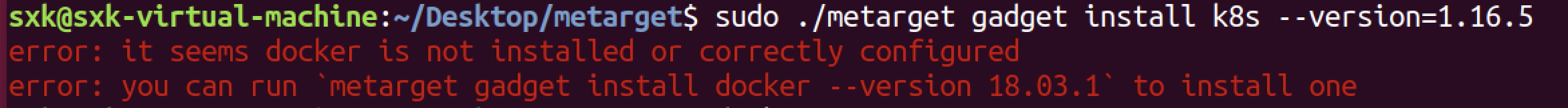

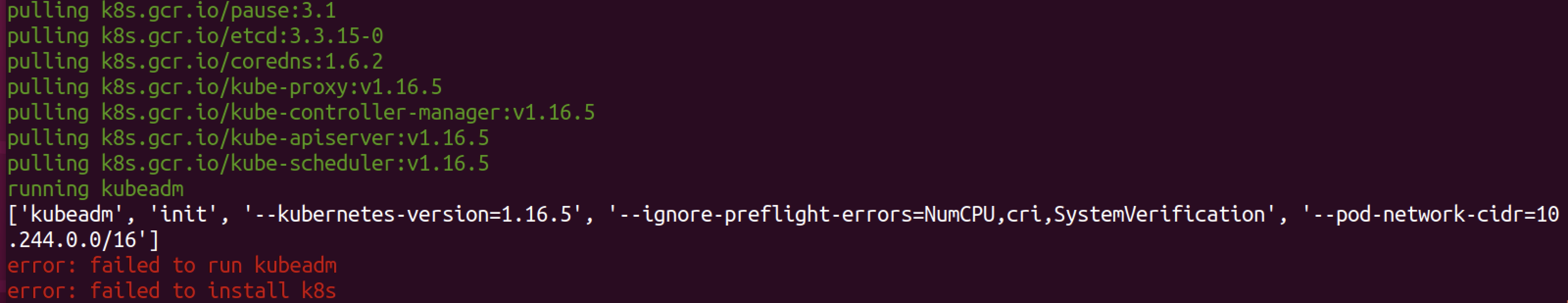

1.2.4 创建k8s环境

sudo ./metarget gadget install k8s --version=1.16.5安装docker

执行上面的命令提示如下报错信息:

sudo apt update #更新软件源列表 #建议继续之前卸载任何旧的Docker软件。 #使用命令: sudo apt-get remove docker docker-engine docker.io sudo apt install docker.io sudo systemctl status docker #查看状态 sudo systemctl enable docker #设置开启启动

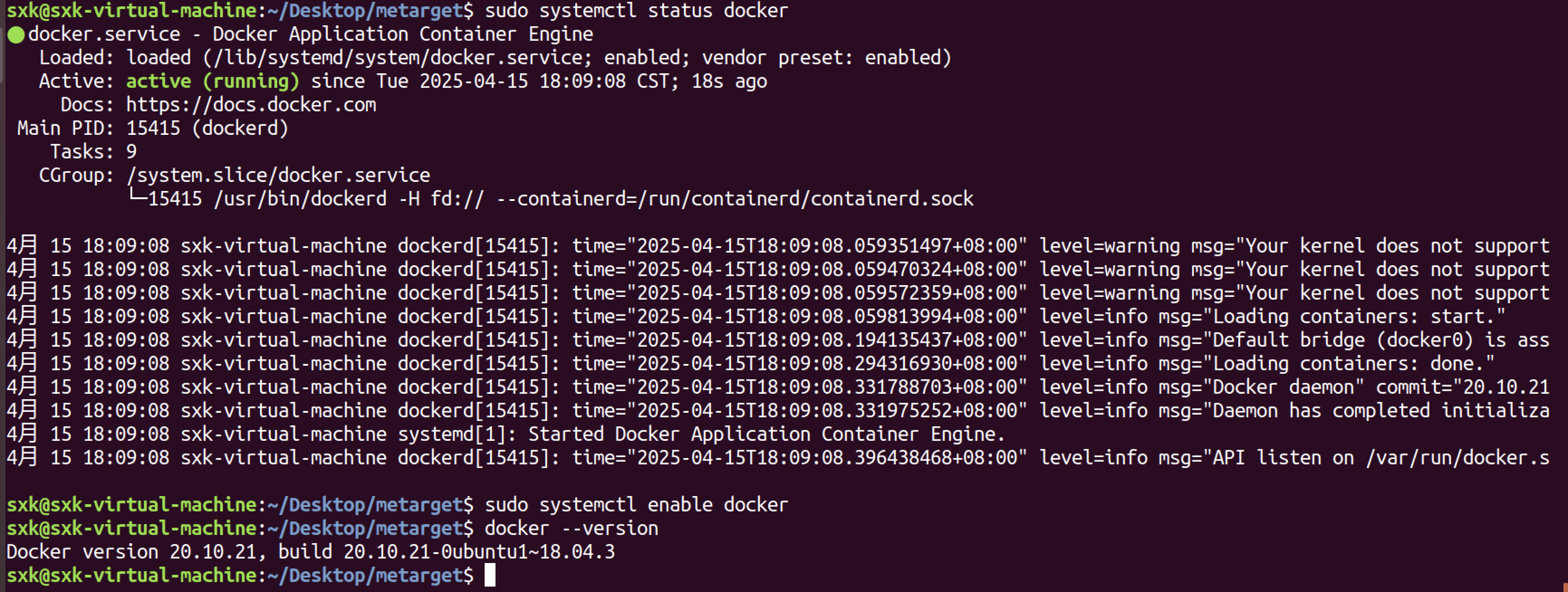

重新执行k8s环境构建命令报错。

解决镜像拉取报错问题

sudo ./metarget gadget install k8s --version=1.16.5 --verbose添加

--verbose参数查看详细信息。

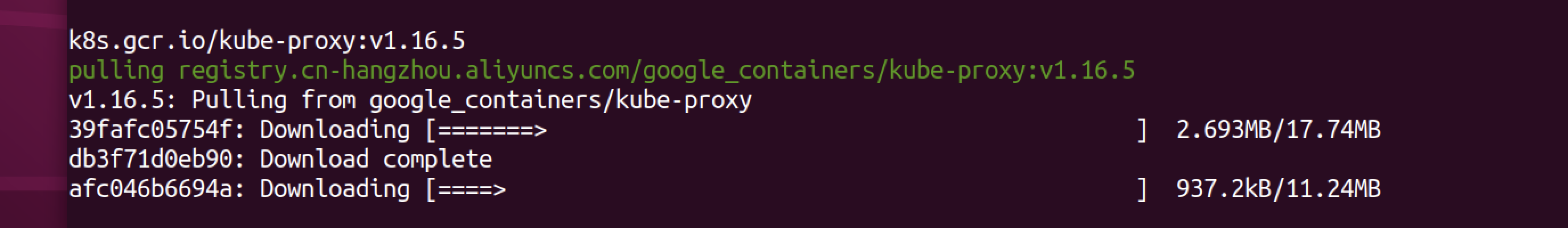

拉取镜像的时候报错,可能是网络原因。

尝试直接拉镜像:

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo docker pull k8s.gcr.io/kube-scheduler:v1.16.5 Error response from daemon: Get "https://k8s.gcr.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)确实是镜像拉不下来的问题。

阅读官方文档找到解决方案:

考虑到特殊的网络环境,国内的朋友如果无法访问Kubernetes官方镜像源,可以指定以下参数,以顺利完成Kubernetes的部署:

–domestic:当使用该选项时,Metarget将自动从国内源(阿里云)下载Kubernetes系统组件镜像,无需代理(偶尔会下载失败,需多次尝试)

如果主机能够直接访问Kubernetes官方镜像源,则不必指定该参数。

sudo ./metarget gadget install k8s --version=1.16.5 --verbose --domestic

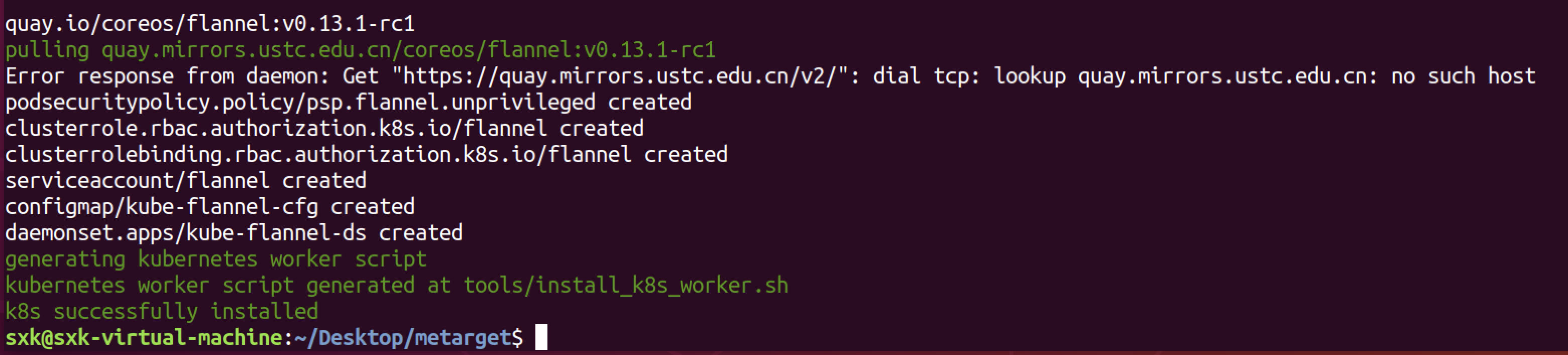

可以看到能够成功拉取到镜像了。

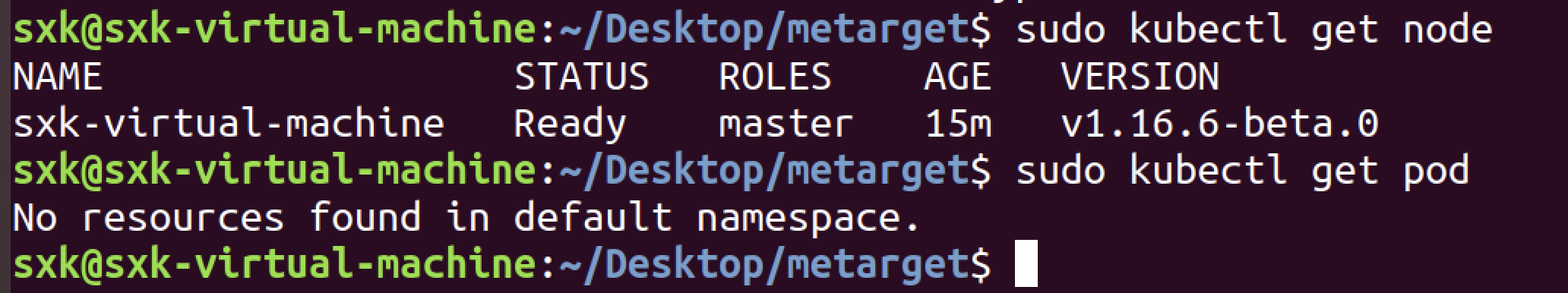

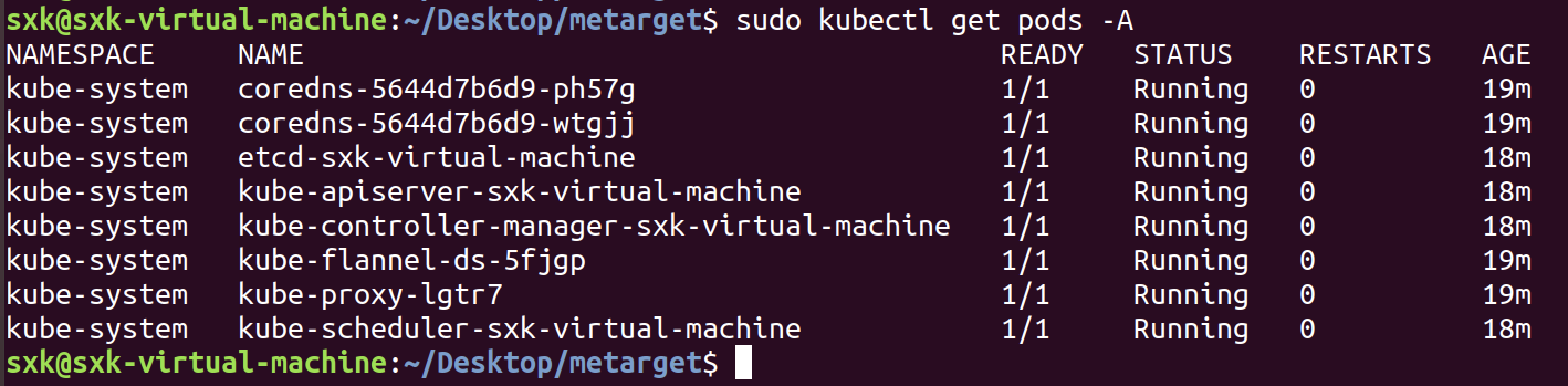

k8s 1.16.5 环境构建成功。

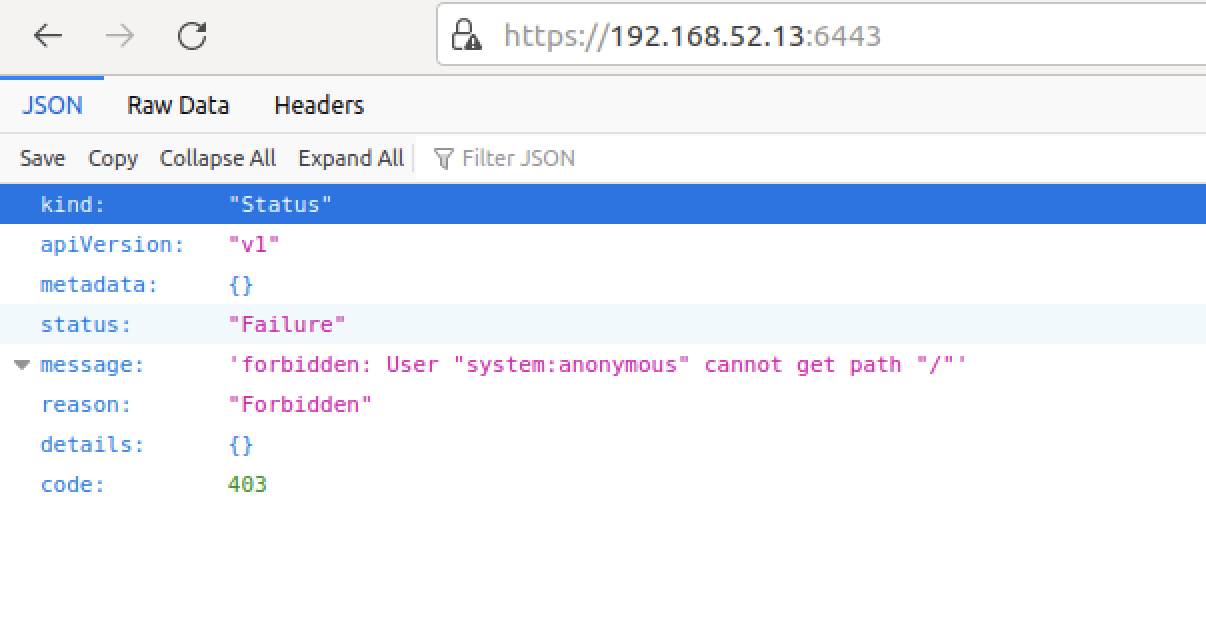

访问apiserver测试:

二、apiserver未授权访问(metarget复现失败)

2.1 概述

Kubernetes(K8s)API Server 未授权访问漏洞是云原生环境中常见的高危安全问题,其核心风险在于攻击者无需认证即可直接操作集群资源,甚至接管整个集群。

2.2 漏洞原理

默认端口配置问题:

API Server 默认监听 8080(非安全端口) 和 6443(安全端口)。

8080 端口:旧版本(v1.16.0 之前)默认开启,无需认证,直接暴露会导致未授权访问。新版本(v1.20+)已废弃 –insecure-port 参数,仅允许设置为0。

6443 端口:需 TLS 认证,但若错误配置(如将匿名用户 system:anonymous 绑定到管理员组 cluster-admin),攻击者可绕过认证。

2.3 影响版本

高风险版本:Kubernetes v1.16.0 之前的默认配置存在漏洞。

新版本风险:若手动启用 8080 端口或错误配置 RBAC,仍可能复现漏洞。

2.4 8080端口未授权访问漏洞

2.4.1 直接访问 8080 端口

攻击者通过浏览器或 kubectl 工具直接连接未认证的 8080 端口,获取集群信息(如节点、Pod、命名空间)。

kubectl -s http://<IP>:8080 get nodes # 获取节点列表2.5 漏洞复现

2.5.1 条件

- 1、k8s版本小于1.16.0

- 2、8080对公网开放。

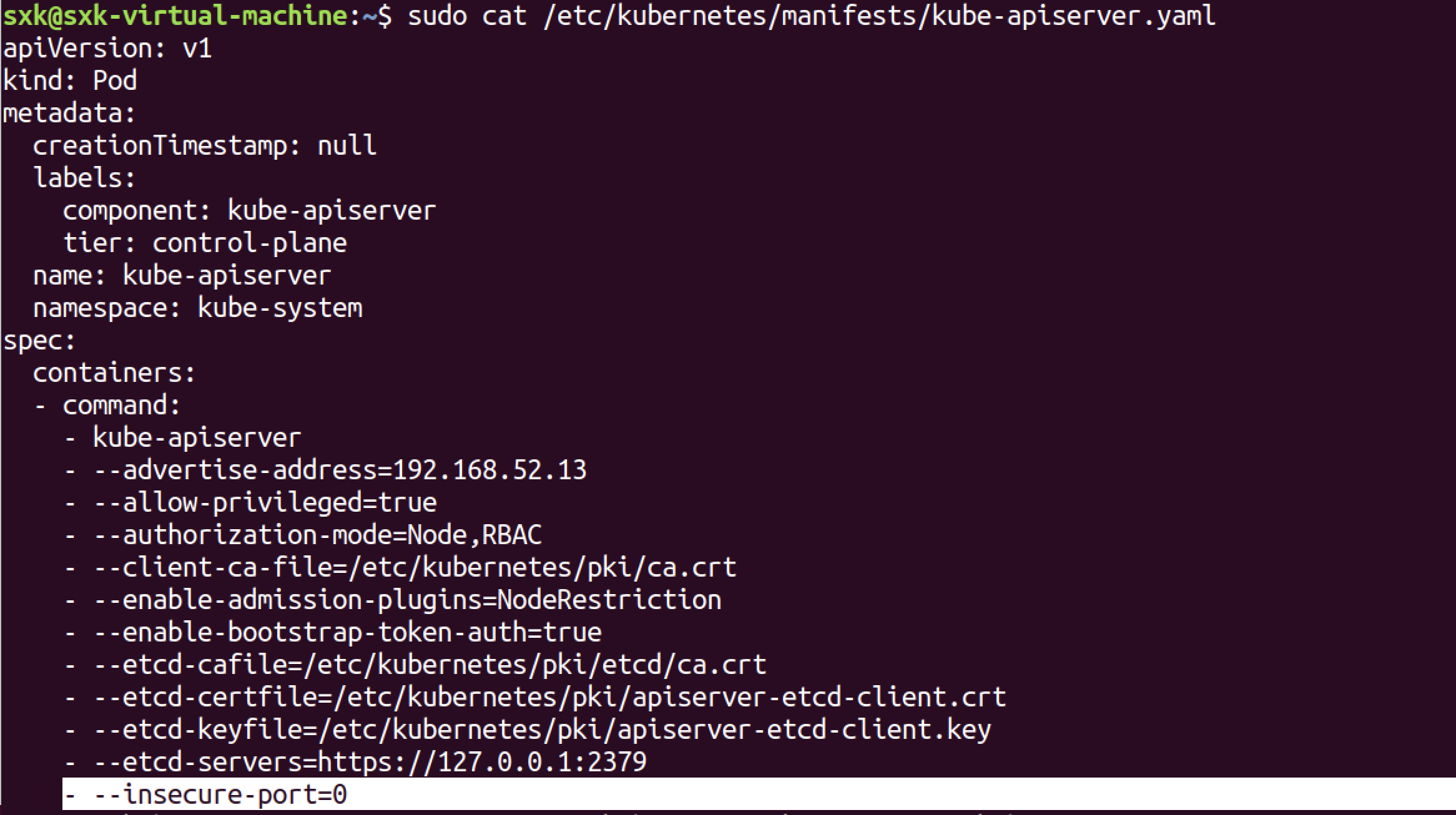

目前的k8s版本1.16.5,查看配置文件。

sudo cat /etc/kubernetes/manifests/kube-apiserver.yaml

可以看到--insecure-port参数为0,默认不开启8080。

在高版本的k8s中,甚至直接将–insecure-port这个配置删除了,需要手动添加。

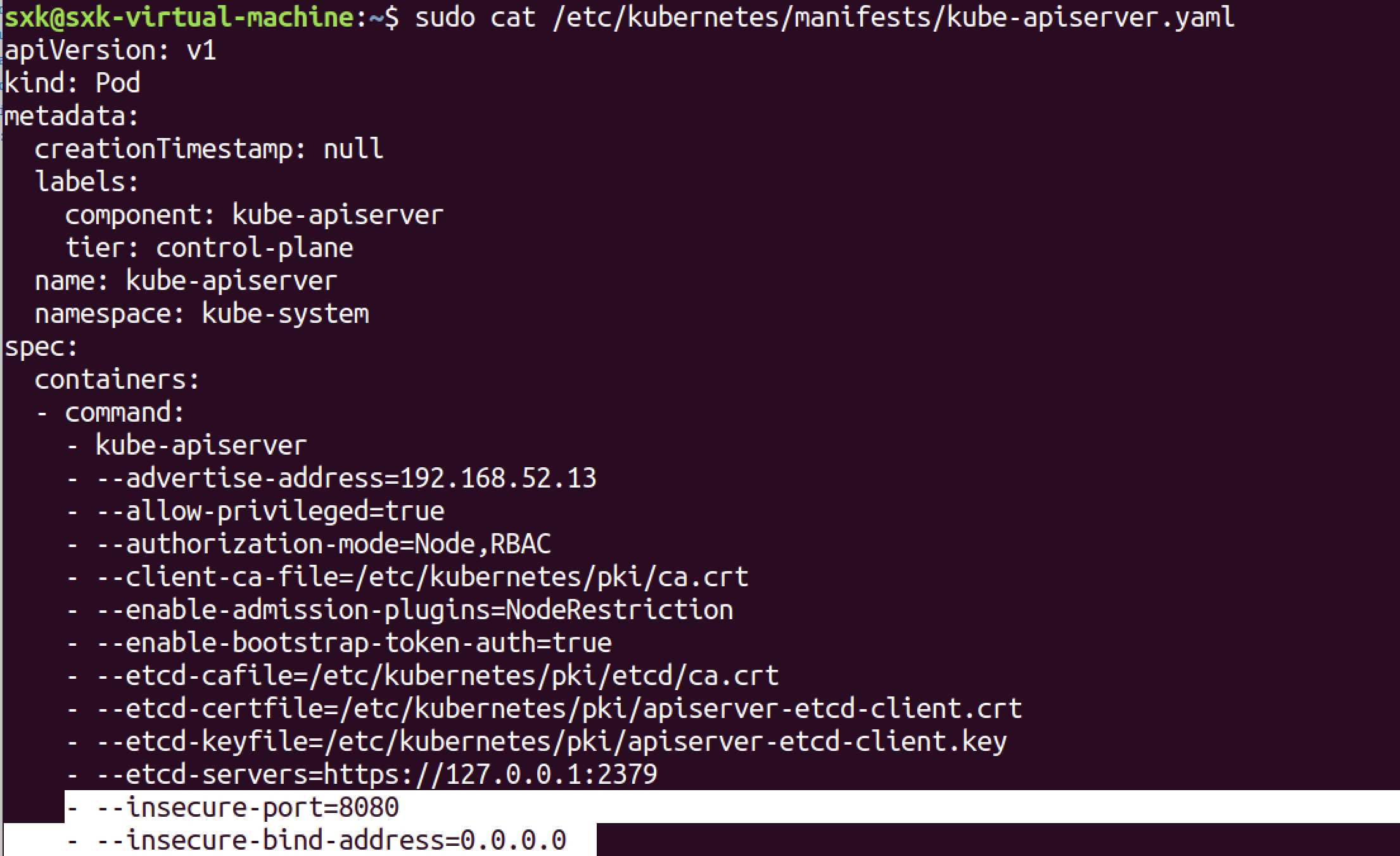

2.5.2 复现不安全配置

1)k8s 1.16.5

- --insecure-port=8080 # 启用 8080 端口

- --insecure-bind-address=0.0.0.0 # 允许所有 IP 访问(生产环境慎用)

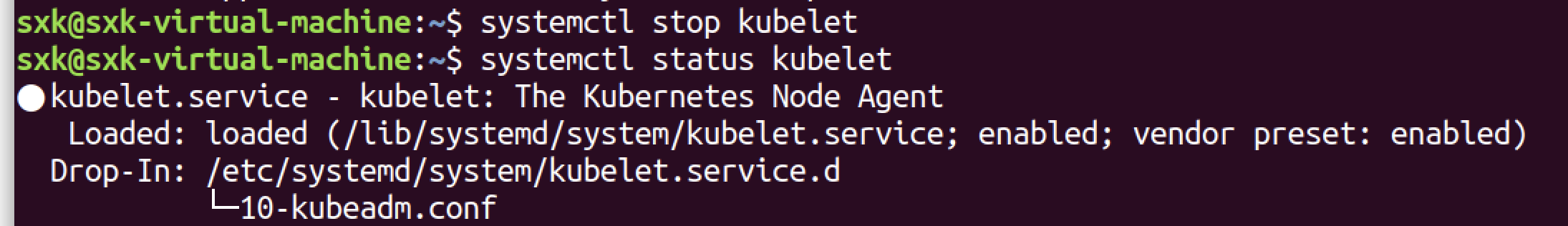

systemctl restart kubelet

操作之后8080没有启动。

关闭防火墙ufw disable无效。

停止kubelet服务后,6443端口仍然可以访问,感觉不是重启kubelet能解决的。

# 查看 kube-apiserver 进程状态

ps aux | grep kube-apiserver

# 检查容器运行状态(若以容器形式部署)

docker ps | grep apiserver都存在。

- --insecure-port=8088 # 启用 8080 端口

- --insecure-bind-address=0.0.0.0 # 允许所有 IP 访问(生产环境慎用)

systemctl restart kubelet改成8088端口,重启kubelet服务之后,并未开启8088端口。

2)k8s 1.15.0、1.14.0、1.13.0 复现失败

可能需要一个比较低的版本,重新搭建一下环境。

sudo ./metarget gadget install k8s --version=1.15.0 --verbose --domestic默认配置--insecure-port=0

8080端口配置失败原因分析

sudo journalctl -u kubelet -f | grep "apiserver" #查看日志4月 28 16:55:56 sxk-virtual-machine kubelet[43570]: E0428 16:55:56.030404 43570 pod_workers.go:191] Error syncing pod 63bc54d258d7371ae068c579971232e4 ("kube-apiserver-sxk-virtual-machine_kube-system(63bc54d258d7371ae068c579971232e4)"), skipping: unmounted volumes=[etc-pki k8s-certs usr-local-share-ca-certificates usr-share-ca-certificates ca-certs etc-ca-certificates], unattached volumes=[etc-pki k8s-certs usr-local-share-ca-certificates usr-share-ca-certificates ca-certs etc-ca-certificates]: timed out waiting for the condition 4月 28 16:55:56 sxk-virtual-machine kubelet[43570]: E0428 16:55:56.073605 43570 kubelet.go:1682] Unable to attach or mount volumes for pod "kube-apiserver-sxk-virtual-machine_kube-system(5c691af0427863a8f315ed592592d034)": unmounted volumes=[usr-local-share-ca-certificates usr-share-ca-certificates ca-certs etc-ca-certificates etc-pki k8s-certs], unattached volumes=[usr-local-share-ca-certificates usr-share-ca-certificates ca-certs etc-ca-certificates etc-pki k8s-certs]: timed out waiting for the condition; skipping pod 4月 28 16:55:56 sxk-virtual-machine kubelet[43570]: E0428 16:55:56.073681 43570 pod_workers.go:191] Error syncing pod 5c691af0427863a8f315ed592592d034 ("kube-apiserver-sxk-virtual-machine_kube-system(5c691af0427863a8f315ed592592d034)"), skipping: unmounted volumes=[usr-local-share-ca-certificates usr-share-ca-certificates ca-certs etc-ca-certificates etc-pki k8s-certs], unattached volumes=[usr-local-share-ca-certificates usr-share-ca-certificates ca-certs etc-ca-certificates etc-pki k8s-certs]: timed out waiting for the condition

2.5.3 环境搭建

sudo ./metarget gadget install docker --version 18.03.1 --domestic --verbose #安装docker

sudo ./metarget gadget install k8s --version 1.16.5 --domestic --verbose #安装k8s⚠️:有时候可能需要多次尝试,原因多为网络问题。

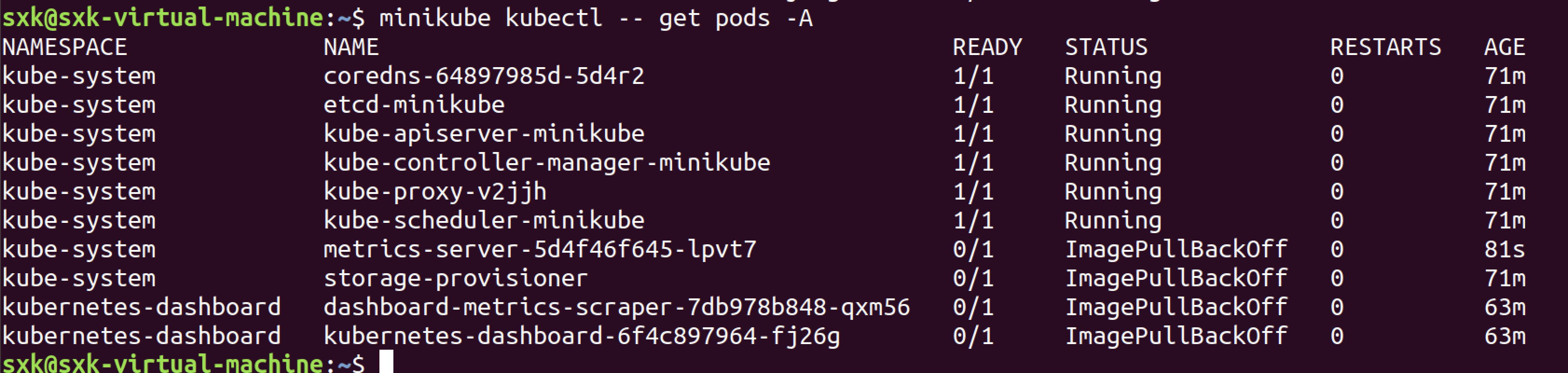

sudo kubectl get pods -A # 用于查看 Kubernetes 集群中所有命名空间下 Pod 状态的命令。

2.6 防御与修复建议

- 关闭非必要端口

禁用 8080 端口:新版本默认关闭,旧版本需检查 kube-apiserver.yaml,确保 –insecure-port=0。

限制 6443 端口访问:通过防火墙或安全组仅允许可信 IP 访问。

- 强化认证与授权

启用 TLS 加密:强制使用 6443 端口并配置有效证书。

RBAC 精细化控制:避免将 system:anonymous 绑定至高权限角色,遵循最小权限原则。

- 监控与日志审计

实时监控 API Server 日志:检测异常请求(如频繁的未授权访问尝试)。

启用审计日志(audit log):记录所有 API 请求,便于溯源。

- 升级与加固 Kubernetes

升级至最新版本:修复已知漏洞(如 v1.20+ 已废弃不安全参数)。

使用 Pod 安全策略(PSP):限制容器权限,防止恶意 Pod 逃逸。

三、KubeGoat云原生靶场搭建(minikube)

可以参考这篇文章搭建但是会踩一些坑。

https://mp.weixin.qq.com/s/qzxjZyXO3hCADK1MKtRZOg 【K8S靶场KubeGoat部署】

建议还是对照官方项目和文档操作。

https://github.com/madhuakula/kubernetes-goat 【官方项目地址】

https://madhuakula.com/kubernetes-goat/docs/ 【官方文档】

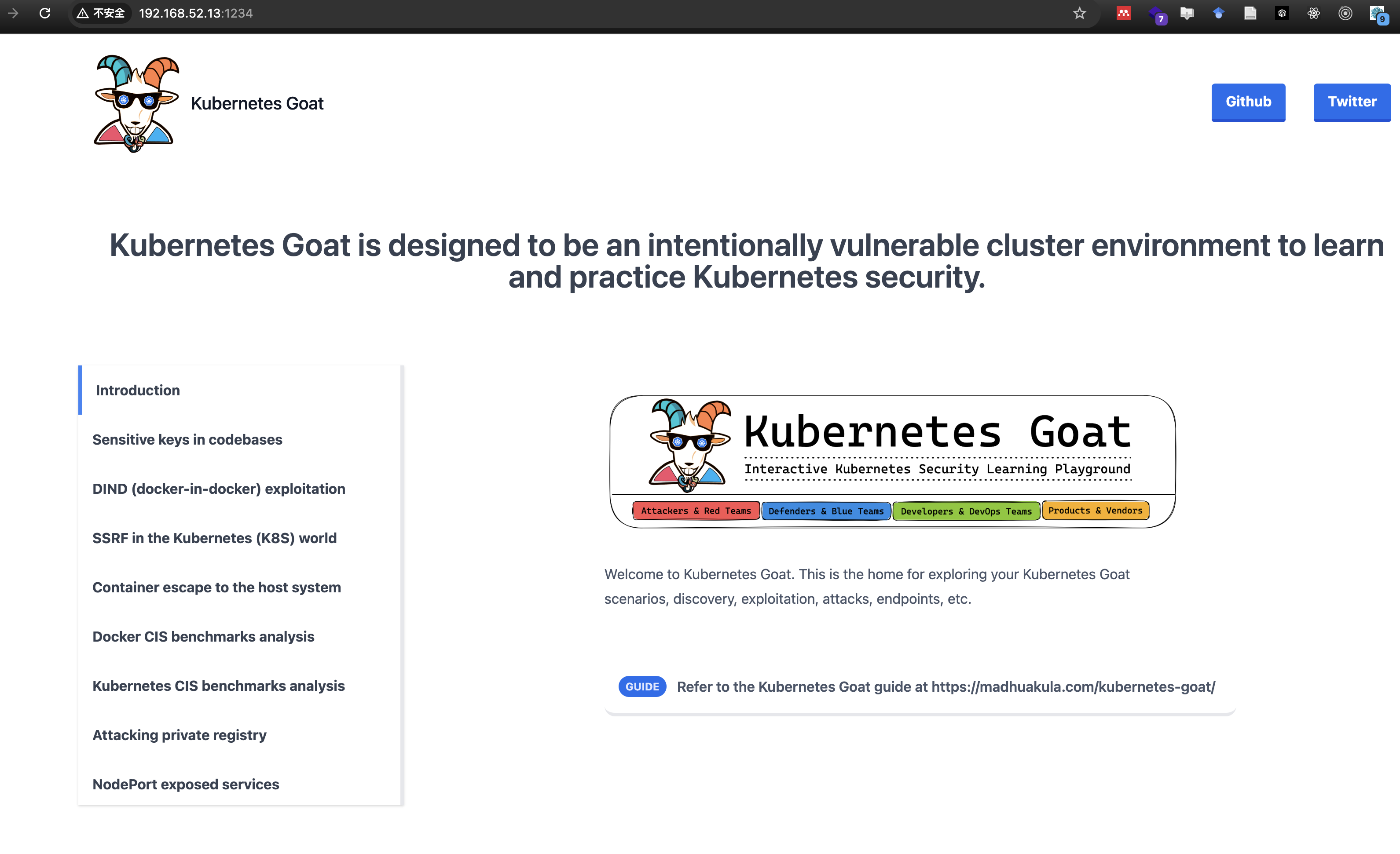

3.0 简介

Kubernetes Goat 是一个交互式 Kubernetes 安全学习平台。该项目通过精心设计的漏洞场景,专门用于展示 Kubernetes 集群、容器及云原生环境中常见的错误配置、真实漏洞与安全问题。安全、高效且能实践操作的Kubernetes安全学习始终是个难题。这个解决方案——不仅服务于安全研究人员,更通过实战场景演示攻击者如何利用漏洞、防御者如何实施防护、开发者与DevOps团队如何规避风险,最终为所有Kubernetes安全学习者提供价值。

Kubernetes Goat 目前包含 20 余个攻防场景,涵盖攻击手法、防御策略、最佳实践及工具链等核心模块。

3.1 尝试1【失败❌】

一、系统环境准备

1.更新系统与安装依赖

sudo apt-get update

sudo apt-get install -y apt-transport-https curl ca-certificates conntrack

conntrack是Kubernetes依赖的工具,若缺失会导致启动报错。

2.关闭Swap(可选)

部分教程建议关闭Swap以避免兼容性问题

sudo swapoff -a

sudo sed -i '/ swap / s/^/#/' /etc/fstab # 永久禁用

二、安装必要组件

1.安装kubectl

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

验证安装:kubectl version --client。

2.安装Docker

sudo apt-get install -y docker.io

sudo systemctl enable docker && sudo systemctl start docker

配置阿里云镜像加速器(可选但推荐)

3.安装Minikube

curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

chmod +x minikube

sudo mv minikube /usr/local/bin/

验证安装:minikube version。

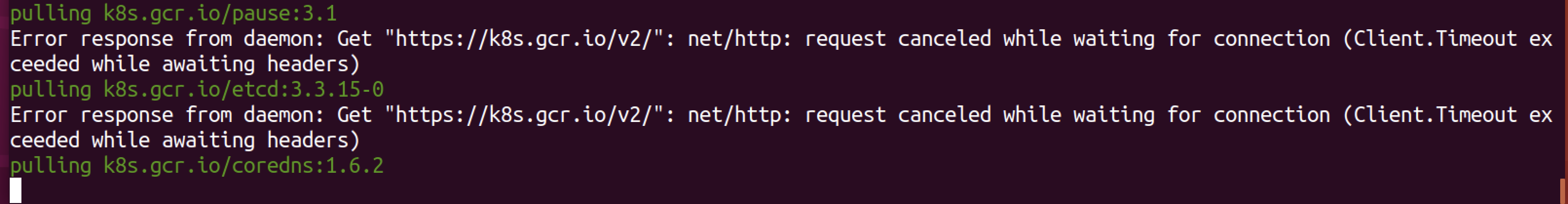

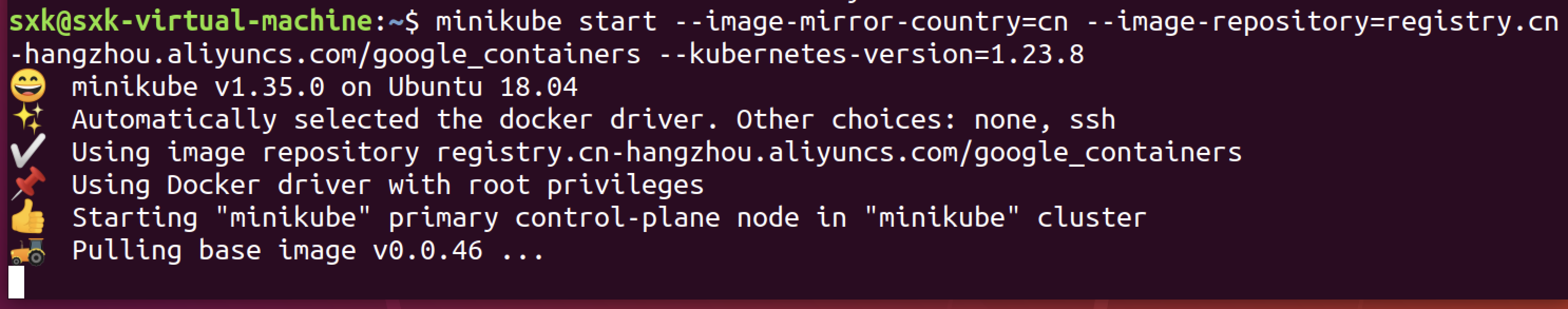

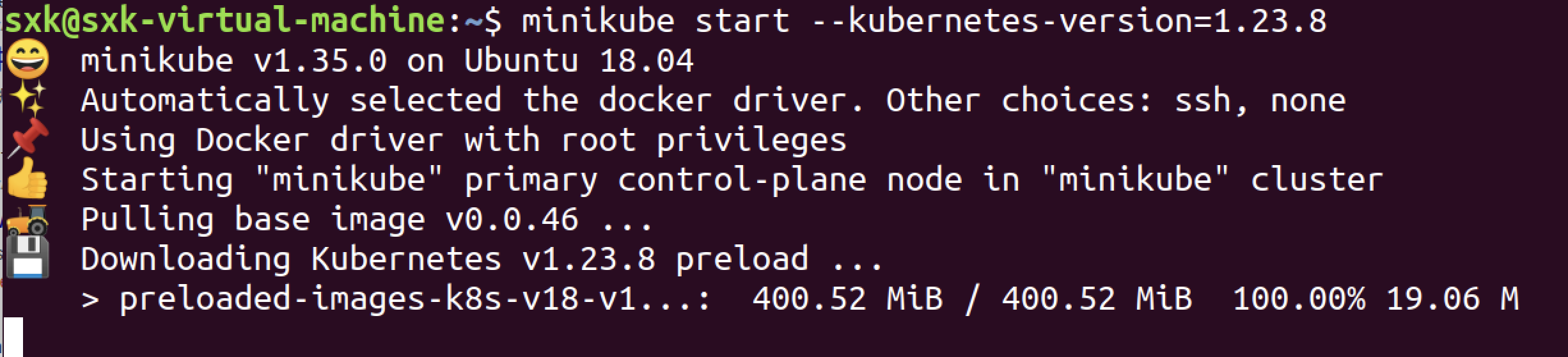

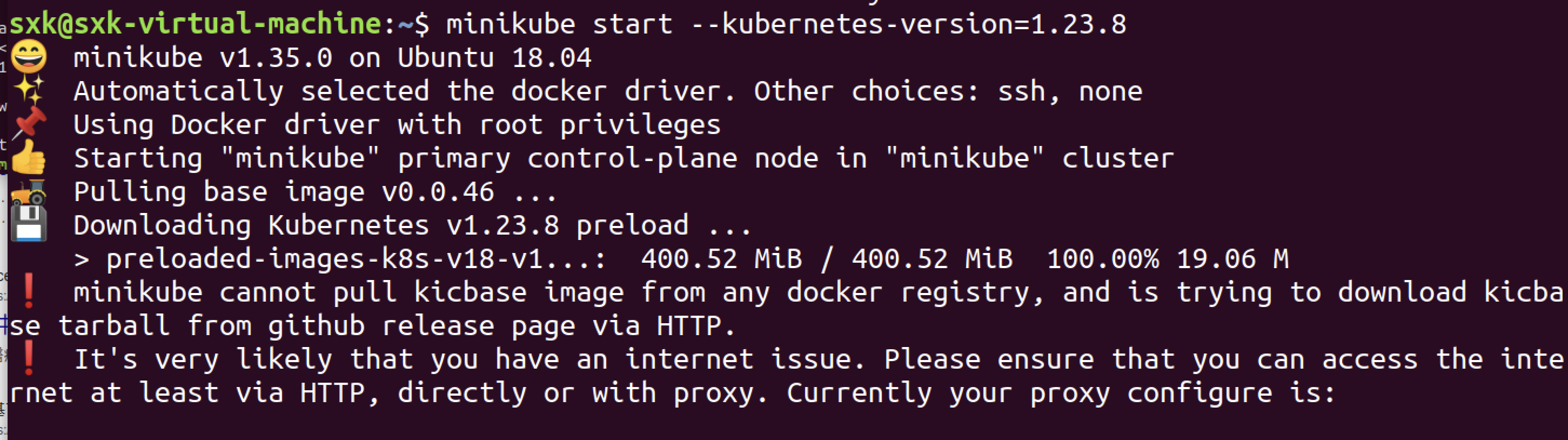

三、启动minikube

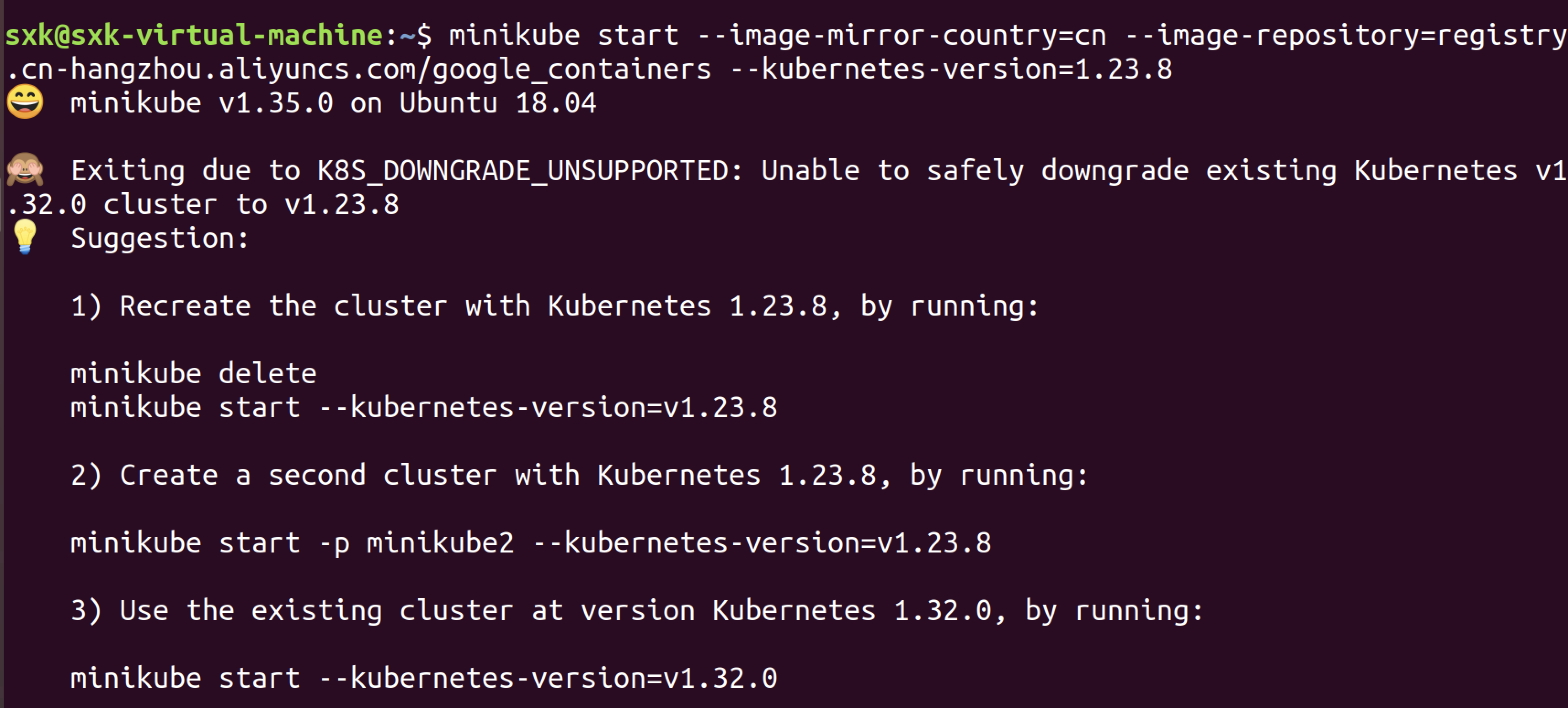

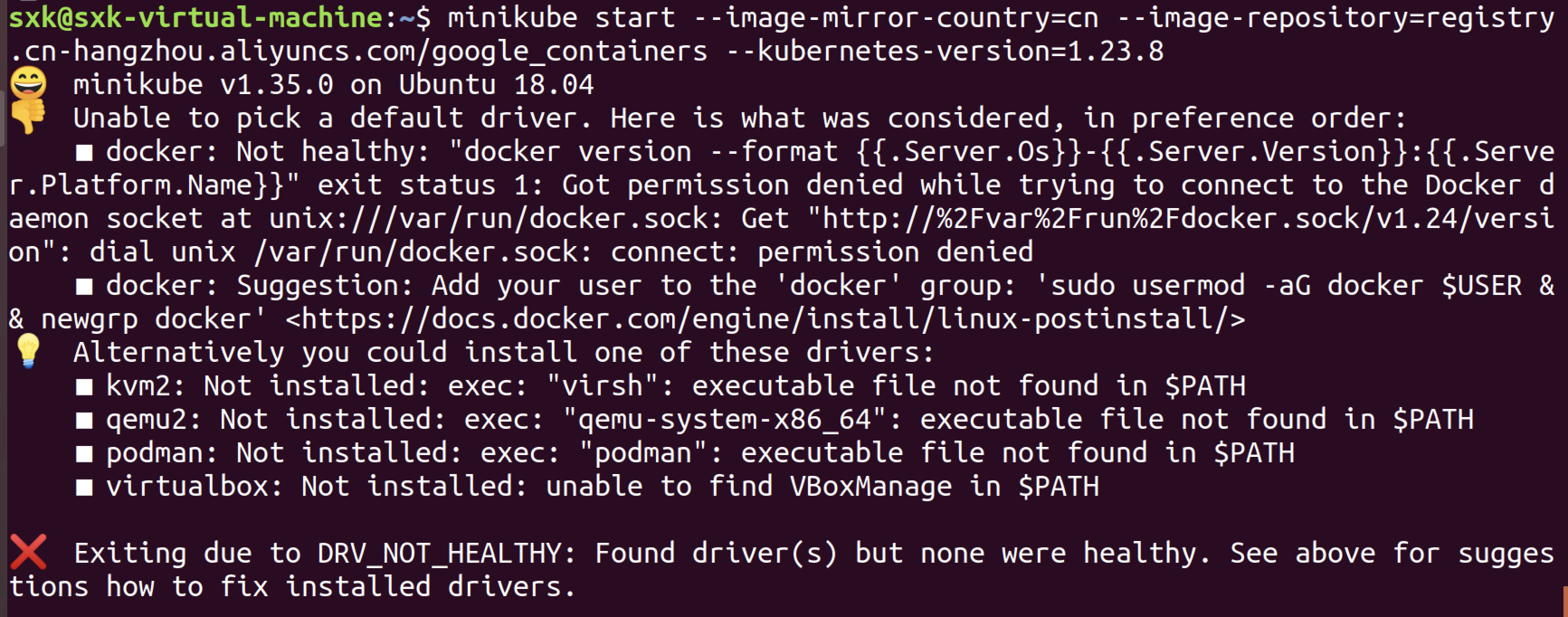

minikube start --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version=1.23.8

mikube启动失败。。。。

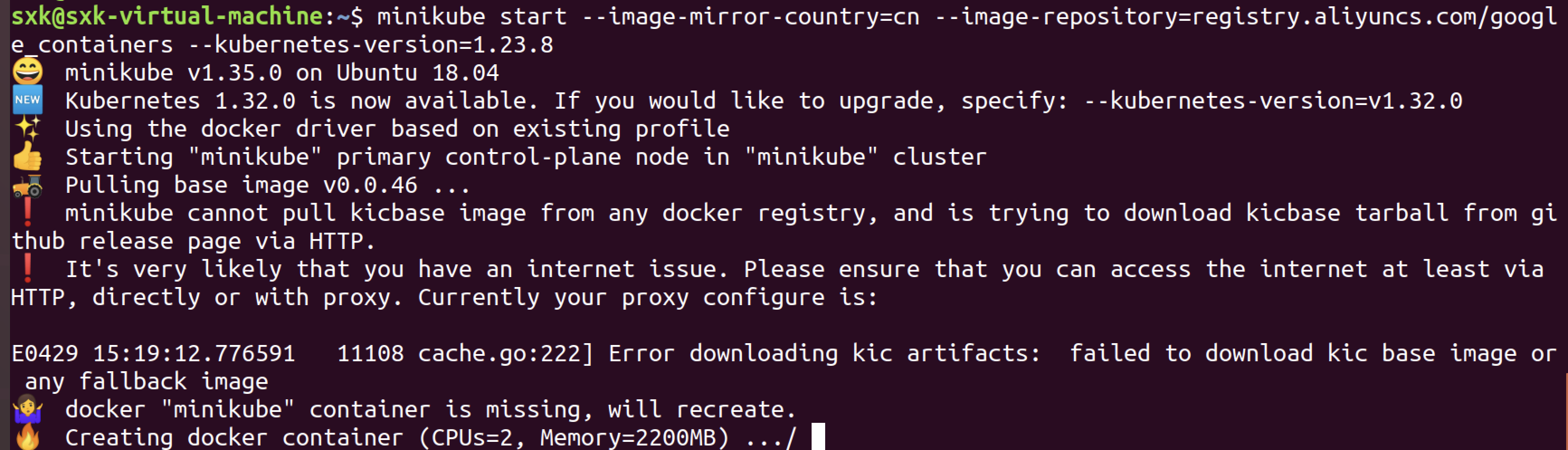

3.2 尝试2 【失败❌-镜像拉不下来】

3.2.1 安装docker和minikube

宿主机clash开启全局模式。

sudo apt install docker.io

sudo systemctl status docker #查看状态

sudo systemctl enable docker #设置开启启动

sudo apt-get install -y apt-transport-https #安装依赖

sudo apt-get install -y kubectl #安装kubelet 【找不到软件】

sudo apt-get install curl

sudo curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo tee /etc/apt/sources.list.d/kubernetes.list <<-'EOF'

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

sudo apt-get update

sudo apt-get install -y kubectl #重新安装kubelet

sudo usermod -aG docker $USER && newgrp docker #添加用户到docker组

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 #安装minikube

sudo install minikube-linux-amd64 /usr/local/bin/minikube #安装minikube

minikube version

minikube start --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version=1.23.8 #启动minikube

# 注:结合docker使用时,k8s版本最好不要用1.24及以上版本,k8s从1.24版本开始不在直接兼容docker,需要安装cri-docker。

报错信息如下:

sxk@sxk-virtual-machine:~$ minikube start --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version=1.23.8

😄 minikube v1.35.0 on Ubuntu 18.04

✨ Automatically selected the docker driver. Other choices: none, ssh

✅ Using image repository registry.cn-hangzhou.aliyuncs.com/google_containers

📌 Using Docker driver with root privileges

👍 Starting "minikube" primary control-plane node in "minikube" cluster

🚜 Pulling base image v0.0.46 ...

❗ minikube cannot pull kicbase image from any docker registry, and is trying to download kicbase tarball from github release page via HTTP.

❗ It's very likely that you have an internet issue. Please ensure that you can access the internet at least via HTTP, directly or with proxy. Currently your proxy configure is:

E0429 15:08:12.908813 10156 cache.go:222] Error downloading kic artifacts: failed to download kic base image or any fallback image

🔥 Creating docker container (CPUs=2, Memory=2200MB) ...

🤦 StartHost failed, but will try again: creating host: create: creating: setting up container node: preparing volume for minikube container: docker run --rm --name minikube-preload-sidecar --label created_by.minikube.sigs.k8s.io=true --label name.minikube.sigs.k8s.io=minikube --entrypoint /usr/bin/test -v minikube:/var registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 -d /var/lib: exit status 125

stdout:

stderr:

Unable to find image 'registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279' locally

docker: Error response from daemon: manifest for registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 not found: manifest unknown: manifest unknown.

See 'docker run --help'.

🤷 docker "minikube" container is missing, will recreate.

🔥 Creating docker container (CPUs=2, Memory=2200MB) ...

😿 Failed to start docker container. Running "minikube delete" may fix it: recreate: creating host: create: creating: setting up container node: preparing volume for minikube container: docker run --rm --name minikube-preload-sidecar --label created_by.minikube.sigs.k8s.io=true --label name.minikube.sigs.k8s.io=minikube --entrypoint /usr/bin/test -v minikube:/var registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 -d /var/lib: exit status 125

stdout:

stderr:

Unable to find image 'registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279' locally

docker: Error response from daemon: manifest for registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 not found: manifest unknown: manifest unknown.

See 'docker run --help'.

❌ Exiting due to GUEST_PROVISION: error provisioning guest: Failed to start host: recreate: creating host: create: creating: setting up container node: preparing volume for minikube container: docker run --rm --name minikube-preload-sidecar --label created_by.minikube.sigs.k8s.io=true --label name.minikube.sigs.k8s.io=minikube --entrypoint /usr/bin/test -v minikube:/var registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 -d /var/lib: exit status 125

stdout:

stderr:

Unable to find image 'registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279' locally

docker: Error response from daemon: manifest for registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase@sha256:fd2d445ddcc33ebc5c6b68a17e6219ea207ce63c005095ea1525296da2d1a279 not found: manifest unknown: manifest unknown.

See 'docker run --help'.

╭───────────────────────────────────────────────────────────────────────────────────────────╮

│ │

│ 😿 If the above advice does not help, please let us know: │

│ 👉 https://github.com/kubernetes/minikube/issues/new/choose │

│ │

│ Please run `minikube logs --file=logs.txt` and attach logs.txt to the GitHub issue. │

│ │

╰───────────────────────────────────────────────────────────────────────────────────────────╯

核心错误是 kicbase 镜像拉取失败(manifest unknown)导致的容器启动失败。

- 1)尝试更换镜像源:

registry.aliyuncs.com/google_containers

无效。

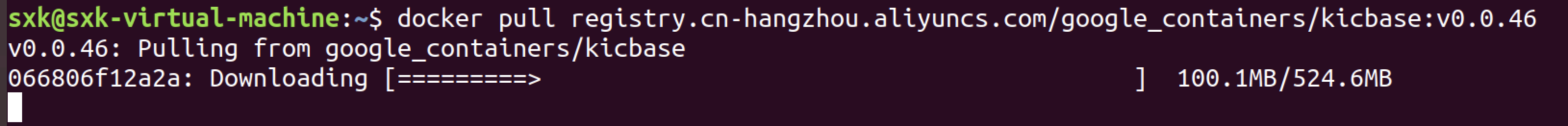

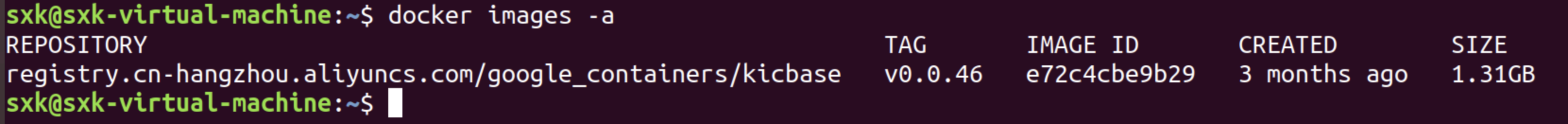

- 2)尝试先拉取镜像,再指定镜像启动

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46

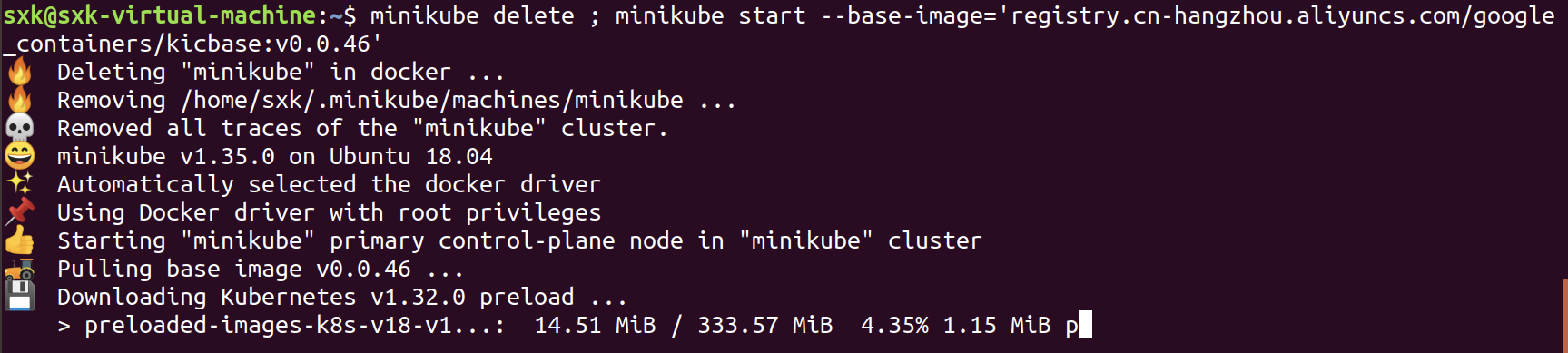

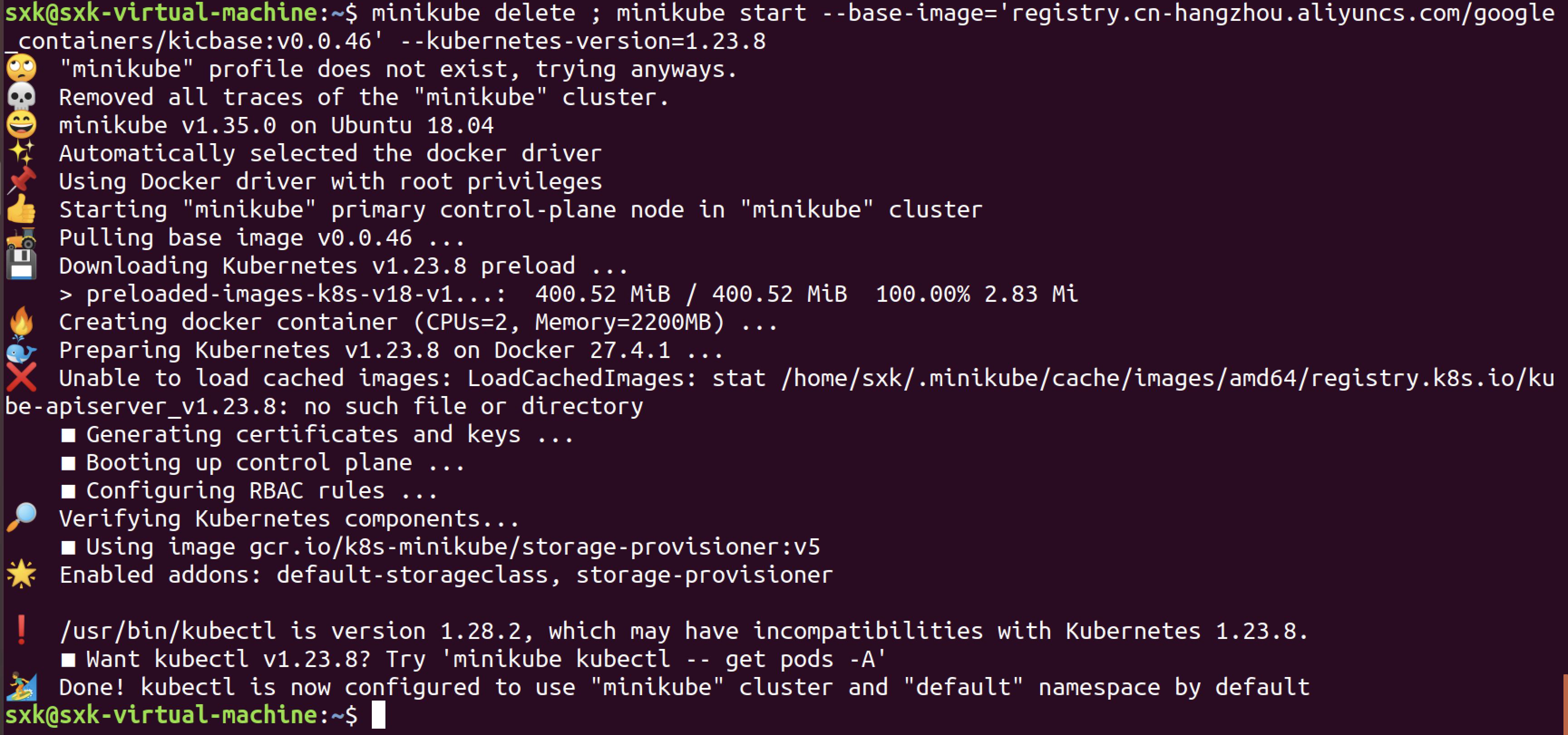

minikube delete ; minikube start --force --memory=1690mb --base-image='registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46' #--force是以root身份启动的docker的必须选项 #--memory=1690mb 是因为资源不足需要添加的限制性参数,可忽略 #--base-image为指定minikube start 采用的基础镜像,上面docker pull拉取了什么镜像,这里就改成什么镜像minikube delete ; minikube start --base-image='registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46'

可以看到Pulling base image这一步直接就完成了。

使用指定的基础镜像启动k8s集群:

minikube delete ; minikube start --base-image='registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46' --kubernetes-version=1.23.8

sxk@sxk-virtual-machine:~$ minikube status

minikube

type: Control Plane

host: Running

kubelet: Running

apiserver: Running

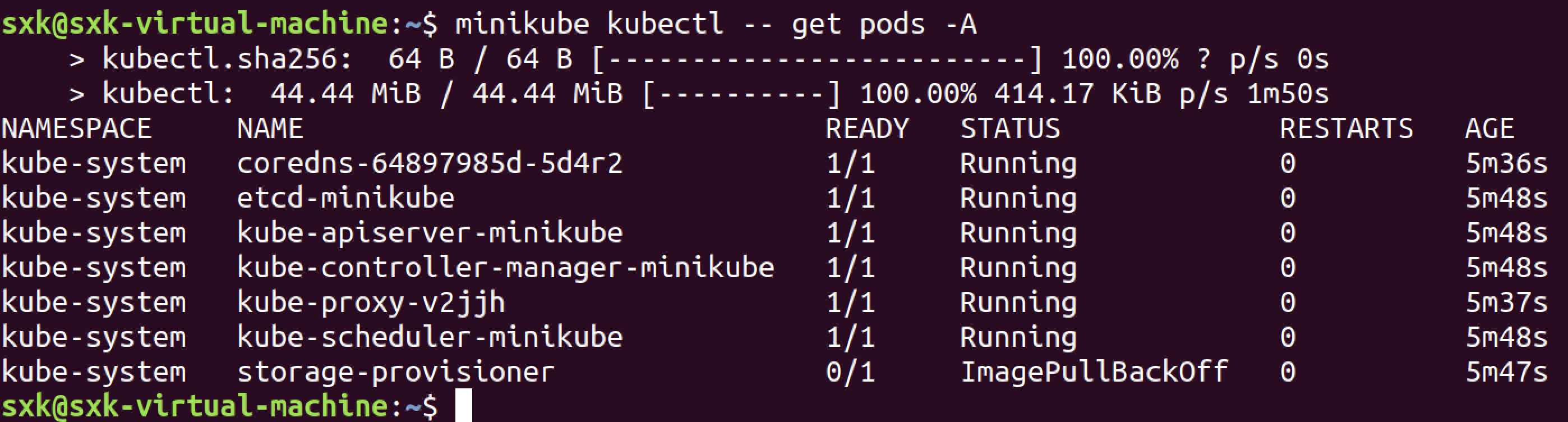

kubeconfig: Configuredminikube kubectl -- get pods -A

sxk@sxk-virtual-machine:~$ minikube kubectl get node

NAME STATUS ROLES AGE VERSION

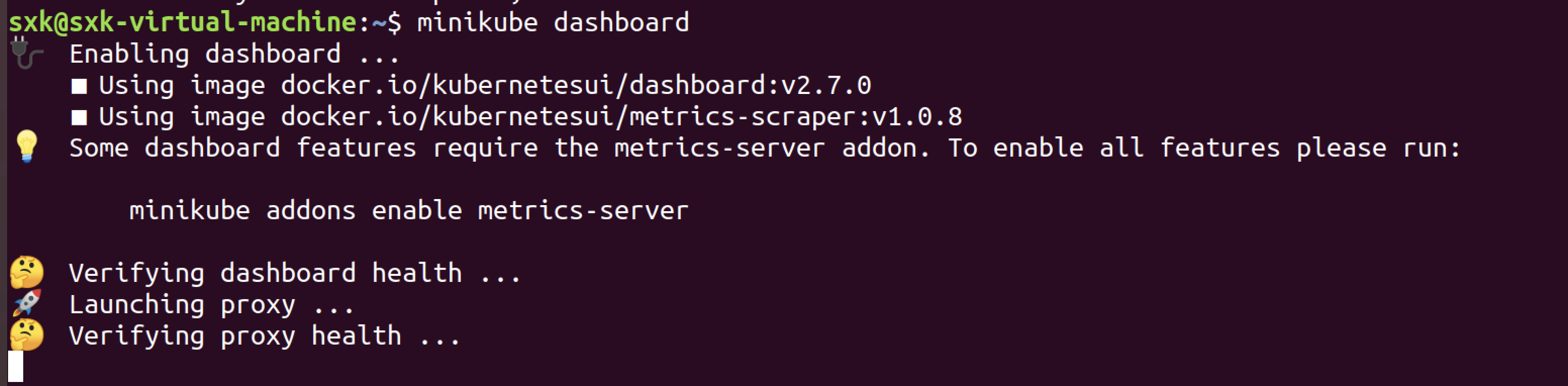

minikube Ready control-plane,master 7m50s v1.23.8尝试启动dashboard。

一直卡在最后的Verifying proxy health …

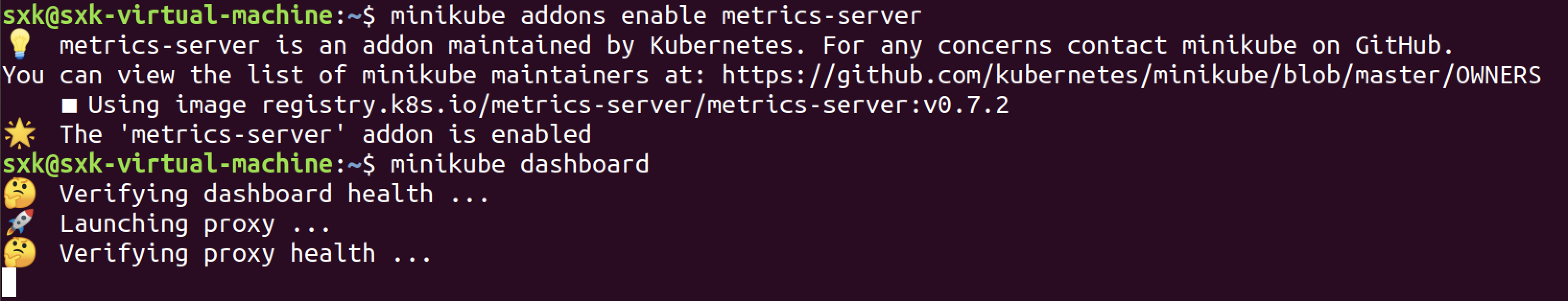

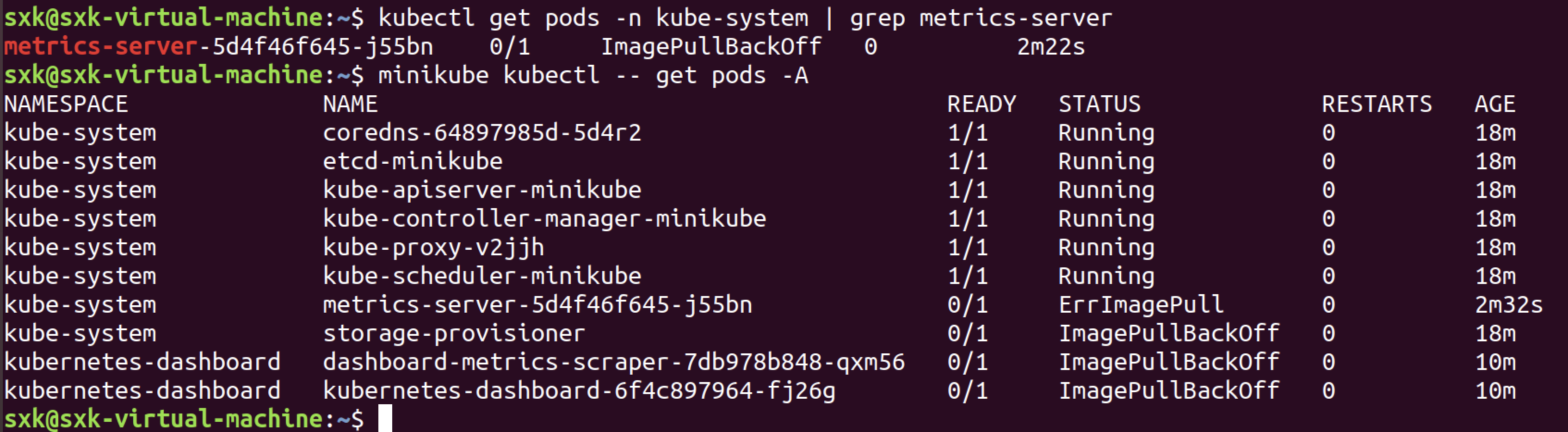

minikube addons enable metrics-server

ImagePullBackOff(镜像重试拉取仍失败)和ErrImagePull(镜像拉取失败)通常是由于镜像拉取失败导致的。

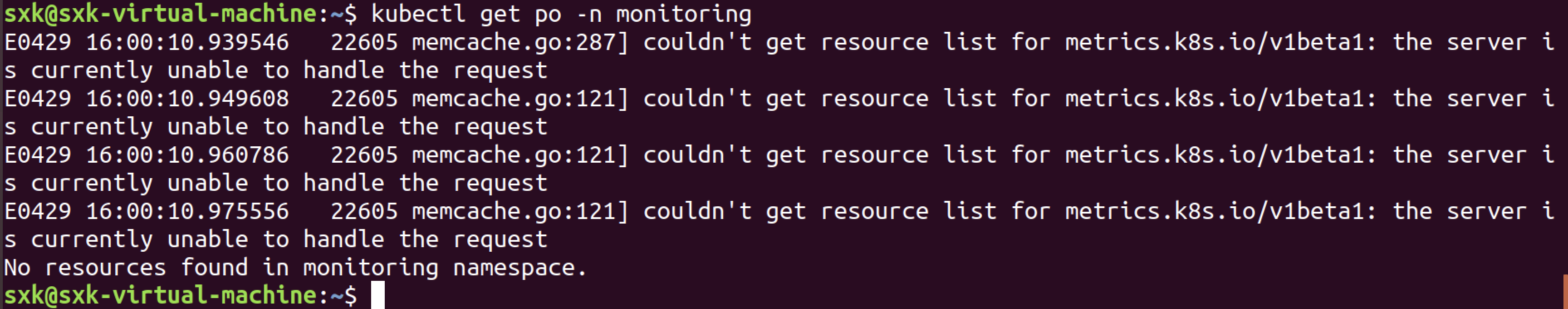

查询pod详细报错内容,kubectl get po -n monitoring。

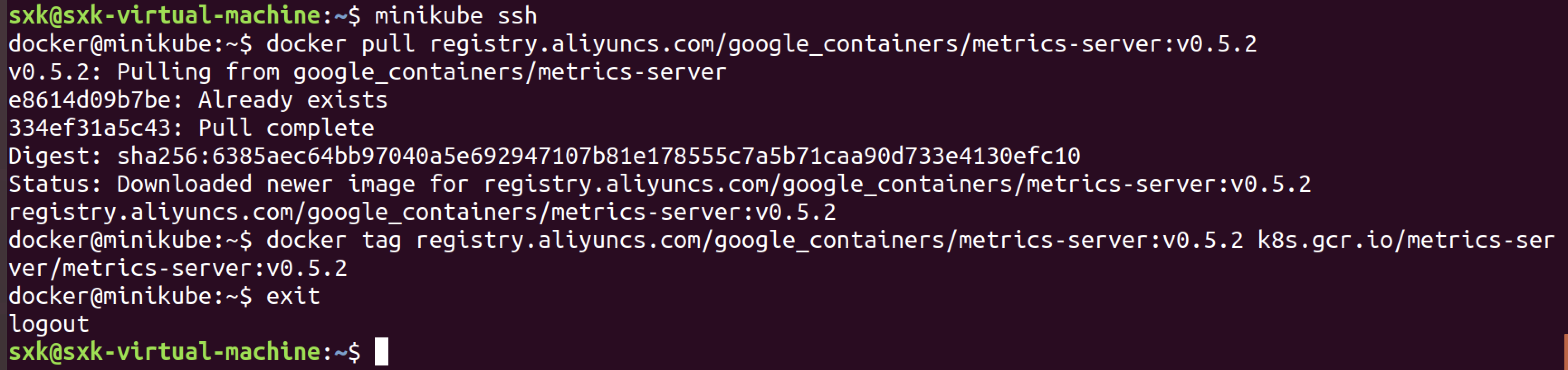

手动拉取国内镜像(替换默认镜像源):

但是仍然没解决问题。

先搁置相关的pod。

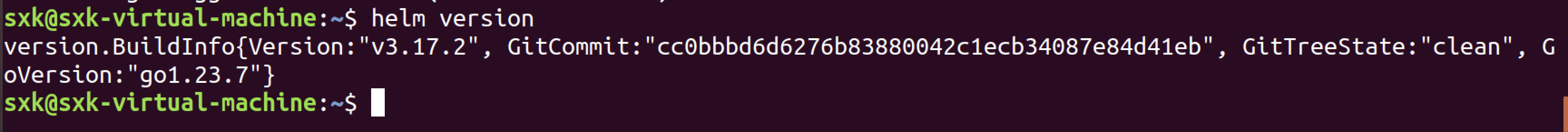

3.2.2 安装helm

curl https://baltocdn.com/helm/signing.asc | gpg --dearmor | sudo tee /usr/share/keyrings/helm.gpg > /dev/null

sudo apt-get install apt-transport-https --yes

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/helm.gpg] https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list

sudo apt-get update

sudo apt-get install helm

3.2.3 安装kubegoat靶场

git clone https://github.com/madhuakula/kubernetes-goat.git

cd kubernetes-goat

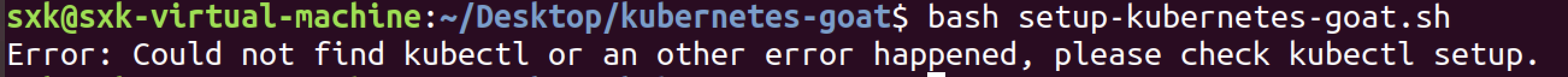

bash setup-kubernetes-goat.sh报错信息:

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat-master$ bash setup-kubernetes-goat.sh

kubectl setup looks good.

deploying insecure super admin scenario

E0429 18:11:19.085551 42958 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:19.092848 42958 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

serviceaccount/superadmin unchanged

clusterrolebinding.rbac.authorization.k8s.io/superadmin unchanged

deploying helm chart metadata-db scenario

Error: INSTALLATION FAILED: cannot re-use a name that is still in use

deploying the vulnerable scenarios manifests

E0429 18:11:19.403558 42970 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:19.421320 42970 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

job.batch/batch-check-job unchanged

E0429 18:11:19.659662 42975 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:19.681922 42975 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

deployment.apps/build-code-deployment unchanged

service/build-code-service unchanged

E0429 18:11:19.931541 42980 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:19.952041 42980 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

namespace/secure-middleware unchanged

service/cache-store-service unchanged

deployment.apps/cache-store-deployment unchanged

E0429 18:11:20.207775 42985 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:20.221211 42985 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

deployment.apps/health-check-deployment unchanged

service/health-check-service unchanged

E0429 18:11:20.457378 42990 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:20.458566 42990 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

namespace/big-monolith unchanged

role.rbac.authorization.k8s.io/secret-reader unchanged

rolebinding.rbac.authorization.k8s.io/secret-reader-binding unchanged

serviceaccount/big-monolith-sa unchanged

secret/vaultapikey unchanged

secret/webhookapikey unchanged

deployment.apps/hunger-check-deployment unchanged

service/hunger-check-service unchanged

E0429 18:11:20.717991 42995 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:20.745143 42995 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

deployment.apps/internal-proxy-deployment unchanged

service/internal-proxy-api-service unchanged

service/internal-proxy-info-app-service unchanged

E0429 18:11:20.995607 43006 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:21.011048 43006 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

deployment.apps/kubernetes-goat-home-deployment unchanged

service/kubernetes-goat-home-service unchanged

E0429 18:11:21.278312 43011 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:21.296089 43011 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

deployment.apps/poor-registry-deployment unchanged

service/poor-registry-service unchanged

E0429 18:11:21.506289 43016 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:21.529847 43016 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

secret/goatvault unchanged

deployment.apps/system-monitor-deployment unchanged

service/system-monitor-service unchanged

E0429 18:11:21.826557 43021 memcache.go:287] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

E0429 18:11:21.849136 43021 memcache.go:121] couldn't get resource list for metrics.k8s.io/v1beta1: the server is currently unable to handle the request

job.batch/hidden-in-layers unchanged

Successfully deployed Kubernetes Goat. Have fun learning Kubernetes Security!

Ensure pods are in running status before running access-kubernetes-goat.sh script

Now run the bash access-kubernetes-goat.sh to access the Kubernetes Goat environment.

但是最后的信息提示部署成功了。

Successfully deployed Kubernetes Goat. Have fun learning Kubernetes Security!

Ensure pods are in running status before running access-kubernetes-goat.sh script

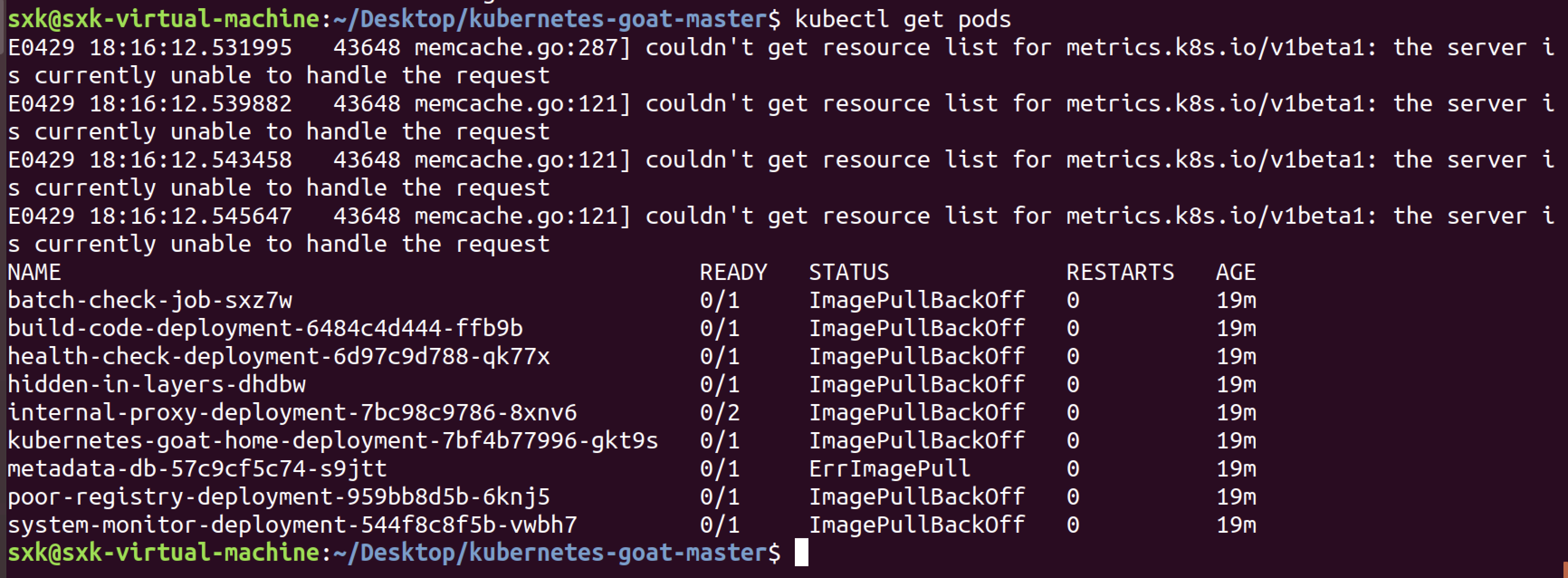

Now run the bash access-kubernetes-goat.sh to access the Kubernetes Goat environment.但是查看pods发现很多镜像都没拉取成功。

3.3 尝试3 【失败❌】

科学上网:

- clash

https://devpn.com/docs/start/ubuntu/clash/

sudo dpkg -i Clash.Verge_2.0.3_amd64.deb报错:

sxk@sxk-virtual-machine:~/Desktop/fanqiang$ sudo dpkg -i Clash.Verge_2.0.3_amd64.deb [sudo] password for sxk: Selecting previously unselected package clash-verge. (Reading database ... 141659 files and directories currently installed.) Preparing to unpack Clash.Verge_2.0.3_amd64.deb ... Unpacking clash-verge (2.0.3) ... dpkg: dependency problems prevent configuration of clash-verge: clash-verge depends on libayatana-appindicator3-1; however: Package libayatana-appindicator3-1 is not installed. clash-verge depends on libwebkit2gtk-4.1-0; however: Package libwebkit2gtk-4.1-0 is not installed. dpkg: error processing package clash-verge (--install): dependency problems - leaving unconfigured Processing triggers for gnome-menus (3.13.3-11ubuntu1.1) ... Processing triggers for desktop-file-utils (0.23-1ubuntu3.18.04.2) ... Processing triggers for mime-support (3.60ubuntu1) ... Processing triggers for hicolor-icon-theme (0.17-2) ... Errors were encountered while processing: clash-verge尝试修复:

sudo apt-get install -f sudo apt install libayatana-appindicator3-1重新执行后报错:

sxk@sxk-virtual-machine:~/Desktop/fanqiang$ sudo dpkg -i Clash.Verge_2.0.3_amd64.deb Selecting previously unselected package clash-verge. (Reading database ... 141669 files and directories currently installed.) Preparing to unpack Clash.Verge_2.0.3_amd64.deb ... Unpacking clash-verge (2.0.3) ... dpkg: dependency problems prevent configuration of clash-verge: clash-verge depends on libwebkit2gtk-4.1-0; however: Package libwebkit2gtk-4.1-0 is not installed. dpkg: error processing package clash-verge (--install): dependency problems - leaving unconfigured Processing triggers for gnome-menus (3.13.3-11ubuntu1.1) ... Processing triggers for desktop-file-utils (0.23-1ubuntu3.18.04.2) ... Processing triggers for mime-support (3.60ubuntu1) ... Processing triggers for hicolor-icon-theme (0.17-2) ... Errors were encountered while processing: clash-vergelibwebkit2gtk-4.1-0:Ubuntu 24.04 及以上版本可能移除了该库,需临时添加旧版本仓库源:

sudo tee /etc/apt/sources.list.d/ubuntu-jammy.sources <<EOF Types: deb URIs: http://archive.ubuntu.com/ubuntu/ Suites: jammy main restricted Components: universe Signed-By: /usr/share/keyrings/ubuntu-archive-keyring.gpg EOF sudo apt update sudo apt install libwebkit2gtk-4.1-0 -t jammy报错:

sxk@sxk-virtual-machine:~/Desktop/fanqiang$ sudo apt install libwebkit2gtk-4.1-0 -t jammy Reading package lists... Done Building dependency tree Reading state information... Done You might want to run 'apt --fix-broken install' to correct these. The following packages have unmet dependencies: libwebkit2gtk-4.1-0 : Depends: libjavascriptcoregtk-4.1-0 (= 2.36.0-2ubuntu1) but it is not installable Depends: bubblewrap (>= 0.3.1) Depends: xdg-dbus-proxy but it is not installable Depends: libc6 (>= 2.35) but 2.27-3ubuntu1.6 is to be installed Depends: libcairo2 (>= 1.15.12) but 1.15.10-2ubuntu0.1 is to be installed Depends: libenchant-2-2 (>= 2.2.3) but it is not installable Depends: libfreetype6 (>= 2.9.1) but 2.8.1-2ubuntu2.2 is to be installed Depends: libgcc-s1 (>= 4.0) but it is not installable Depends: libgcrypt20 (>= 1.9.0) but 1.8.1-4ubuntu1.2 is to be installed Depends: libgdk-pixbuf-2.0-0 (>= 2.22.0) but it is not installable Depends: libglib2.0-0 (>= 2.70.0) but 2.56.4-0ubuntu0.18.04.9 is to be installed Depends: libgstreamer-plugins-base1.0-0 (>= 1.20.0) but 1.14.5-0ubuntu1~18.04.3 is to be installed Depends: libgstreamer1.0-0 (>= 1.20.0) but 1.14.5-0ubuntu1~18.04.2 is to be installed Depends: libgtk-3-0 (>= 3.23.0) but 3.22.30-1ubuntu4 is to be installed Depends: libicu70 (>= 70.1-1~) but it is not installable Depends: libmanette-0.2-0 (>= 0.1.2) but it is not going to be installed Depends: libopenjp2-7 (>= 2.2.0) but it is not going to be installed Depends: libsoup-3.0-0 (>= 3.0.3) but it is not going to be installed Depends: libstdc++6 (>= 11) but 8.4.0-1ubuntu1~18.04 is to be installed Depends: libtasn1-6 (>= 4.14) but 4.13-2 is to be installed Depends: libwayland-client0 (>= 1.20.0) but 1.16.0-1ubuntu1.1~18.04.4 is to be installed Depends: libwebp7 but it is not installable Depends: libxcomposite1 (>= 1:0.4.5) but 1:0.4.4-2 is to be installed E: Unmet dependencies. Try 'apt --fix-broken install' with no packages (or specify a solution).安装失败。

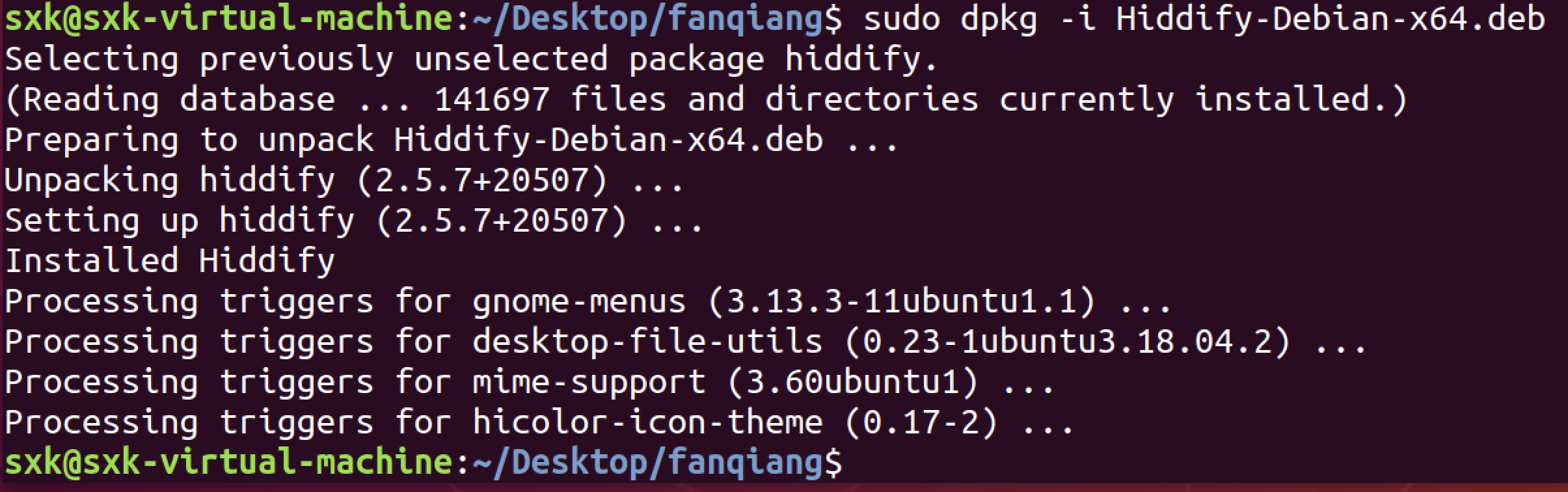

- Hiddify

https://gethiddify.com/hiddify-app/#downloads

安装完之后软件打不开。

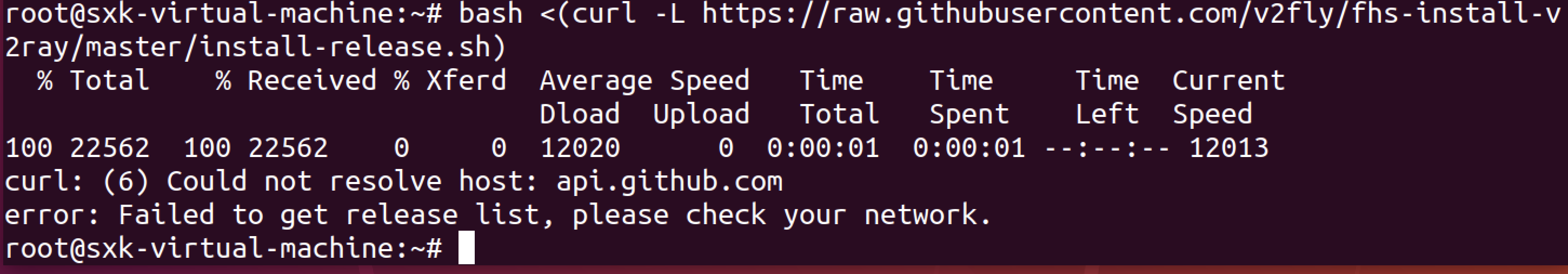

- v2ray

sxk@sxk-virtual-machine:~$ su - Password: root@sxk-virtual-machine:~# bash <(curl -L https://raw.githubusercontent.com/v2fly/fhs-install-v2ray/master/install-release.sh)还是失败。

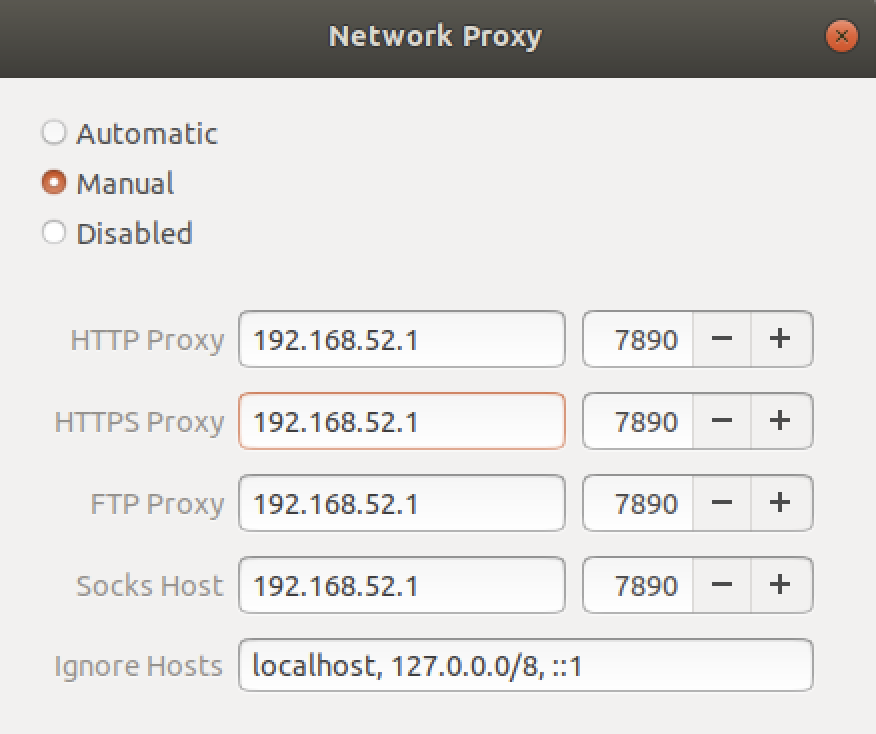

- 虚拟机共享宿主机vpn

打开VPN局域网,并记住端口号。

打开虚拟机网络设置:

3.3.1 安装docker和minikube

sudo apt install docker.io

sudo systemctl status docker #查看状态

sudo systemctl enable docker #设置开启启动

sudo apt-get install -y apt-transport-https #安装依赖

sudo apt-get install -y kubectl #安装kubelet 【找不到软件】

sudo apt-get install curl

sudo curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo tee /etc/apt/sources.list.d/kubernetes.list <<-'EOF'

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

sudo apt-get update

sudo apt-get install -y kubectl #重新安装kubelet

sudo usermod -aG docker $USER && newgrp docker #添加用户到docker组

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64 #安装minikube

sudo install minikube-linux-amd64 /usr/local/bin/minikube #安装minikube

minikube version

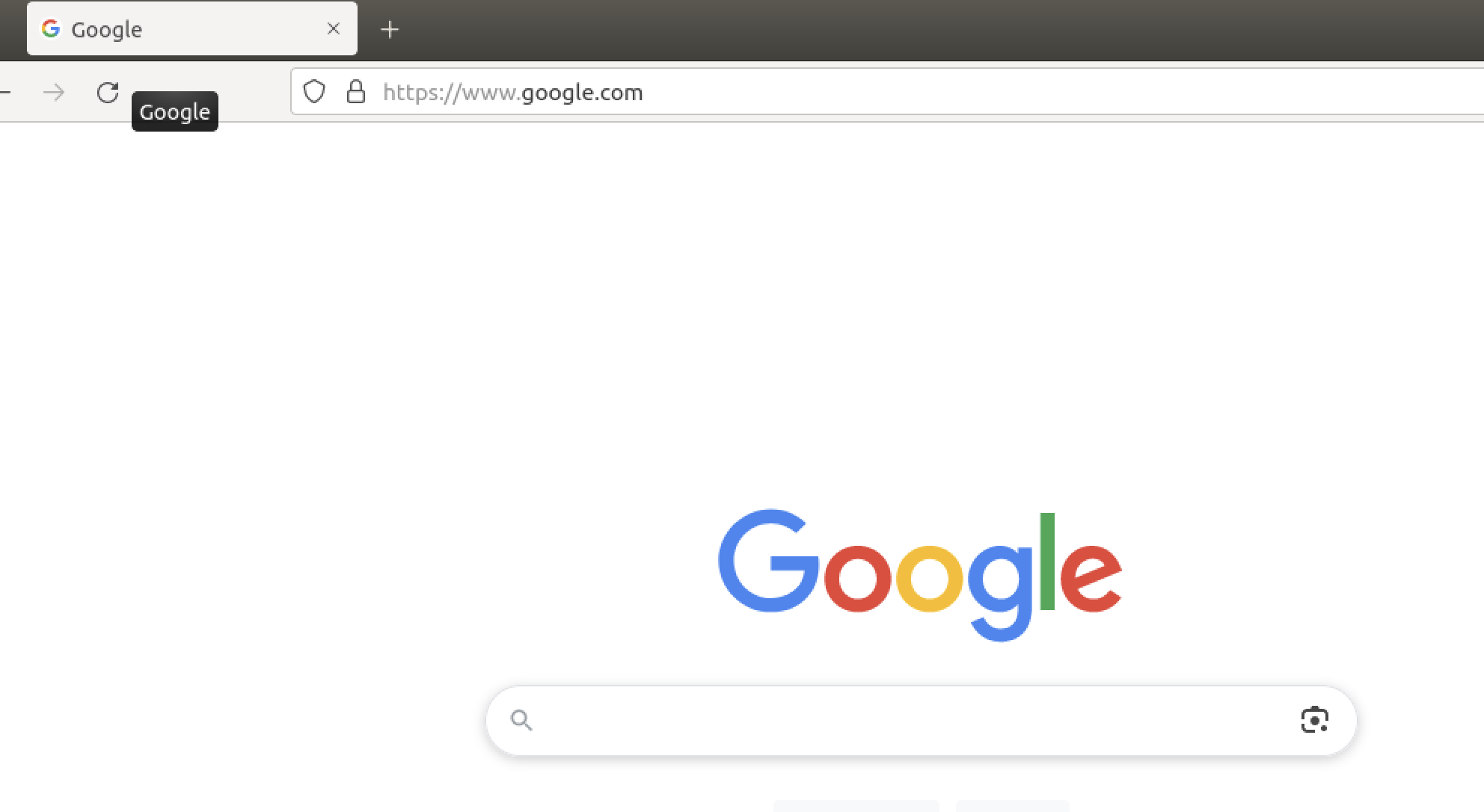

minikube start --kubernetes-version=1.23.8 #启动minikube

# 注:结合docker使用时,k8s版本最好不要用1.24及以上版本,k8s从1.24版本开始不在直接兼容docker,需要安装cri-docker。因为共享了宿主机的科学上网代理,在启动minikube时没有指定国内仓库。

但还是出现了基础镜像拉取不下来的问题。

出现了跟以前一样的问题。。。

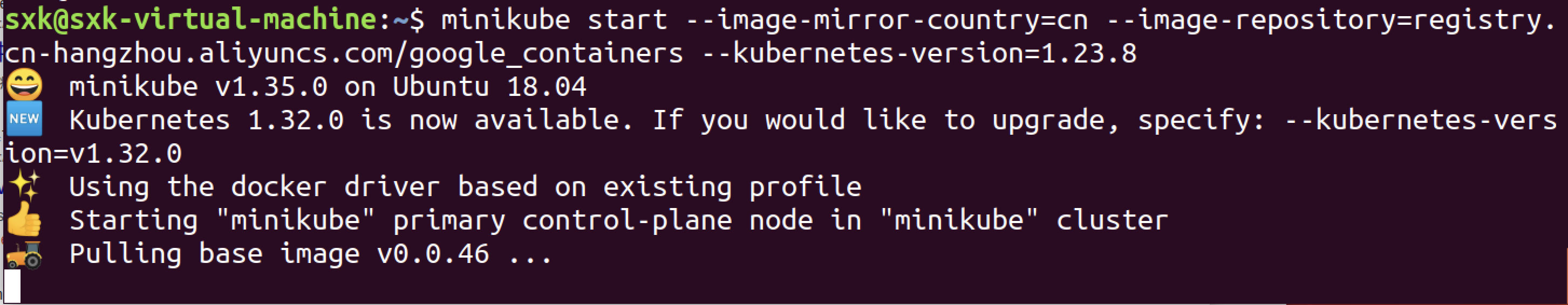

sxk@sxk-virtual-machine:~$ minikube start --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version=1.23.8

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46

minikube delete ; minikube start --base-image='registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46'

3.3.2 安装helm

curl https://baltocdn.com/helm/signing.asc | gpg --dearmor | sudo tee /usr/share/keyrings/helm.gpg > /dev/null

sudo apt-get install apt-transport-https --yes

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/helm.gpg] https://baltocdn.com/helm/stable/debian/ all main" | sudo tee /etc/apt/sources.list.d/helm-stable-debian.list

sudo apt-get update

sudo apt-get install helm

3.3.3 安装kubegoat靶场

git clone https://github.com/madhuakula/kubernetes-goat.git

cd kubernetes-goat

chmod +x setup-kubernetes-goat.sh

bash setup-kubernetes-goat.sh输出信息:

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ bash setup-kubernetes-goat.sh

kubectl setup looks good.

deploying insecure super admin scenario

serviceaccount/superadmin created

clusterrolebinding.rbac.authorization.k8s.io/superadmin created

deploying helm chart metadata-db scenario

NAME: metadata-db

LAST DEPLOYED: Wed Apr 30 11:16:55 2025

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=metadata-db,app.kubernetes.io/instance=metadata-db" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:80

deploying the vulnerable scenarios manifests

job.batch/batch-check-job created

deployment.apps/build-code-deployment created

service/build-code-service created

namespace/secure-middleware created

service/cache-store-service created

deployment.apps/cache-store-deployment created

deployment.apps/health-check-deployment created

service/health-check-service created

namespace/big-monolith created

role.rbac.authorization.k8s.io/secret-reader created

rolebinding.rbac.authorization.k8s.io/secret-reader-binding created

serviceaccount/big-monolith-sa created

secret/vaultapikey created

secret/webhookapikey created

deployment.apps/hunger-check-deployment created

service/hunger-check-service created

deployment.apps/internal-proxy-deployment created

service/internal-proxy-api-service created

service/internal-proxy-info-app-service created

deployment.apps/kubernetes-goat-home-deployment created

service/kubernetes-goat-home-service created

deployment.apps/poor-registry-deployment created

service/poor-registry-service created

secret/goatvault created

deployment.apps/system-monitor-deployment created

service/system-monitor-service created

job.batch/hidden-in-layers created

Successfully deployed Kubernetes Goat. Have fun learning Kubernetes Security!

Ensure pods are in running status before running access-kubernetes-goat.sh script

Now run the bash access-kubernetes-goat.sh to access the Kubernetes Goat environment.

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$

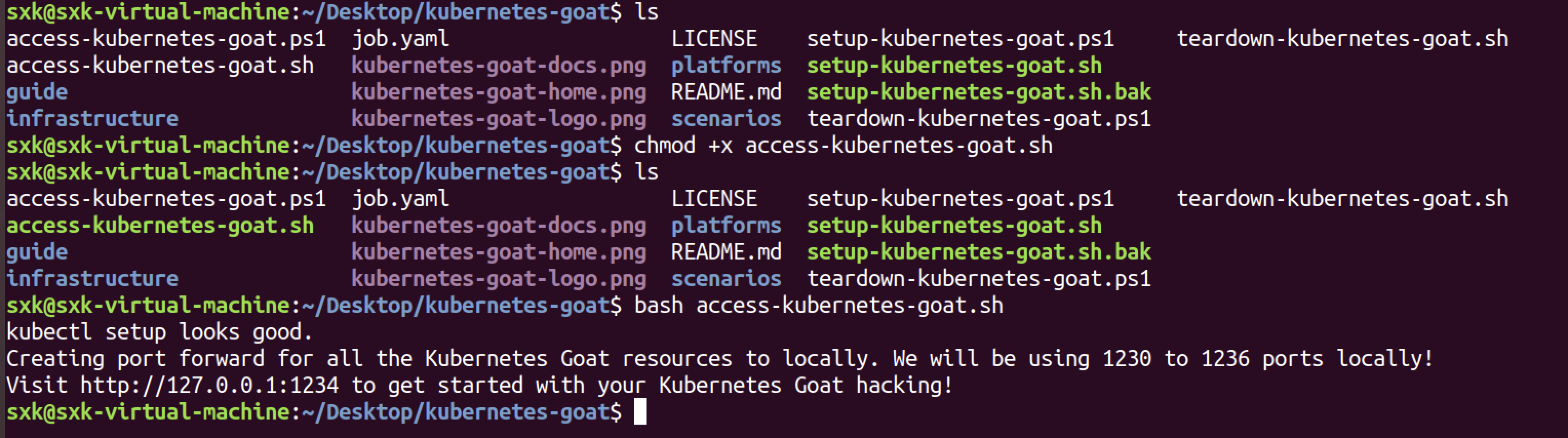

成功安装。

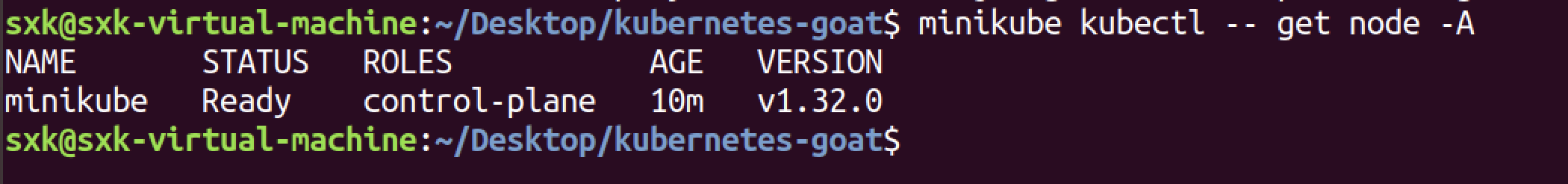

查看node:

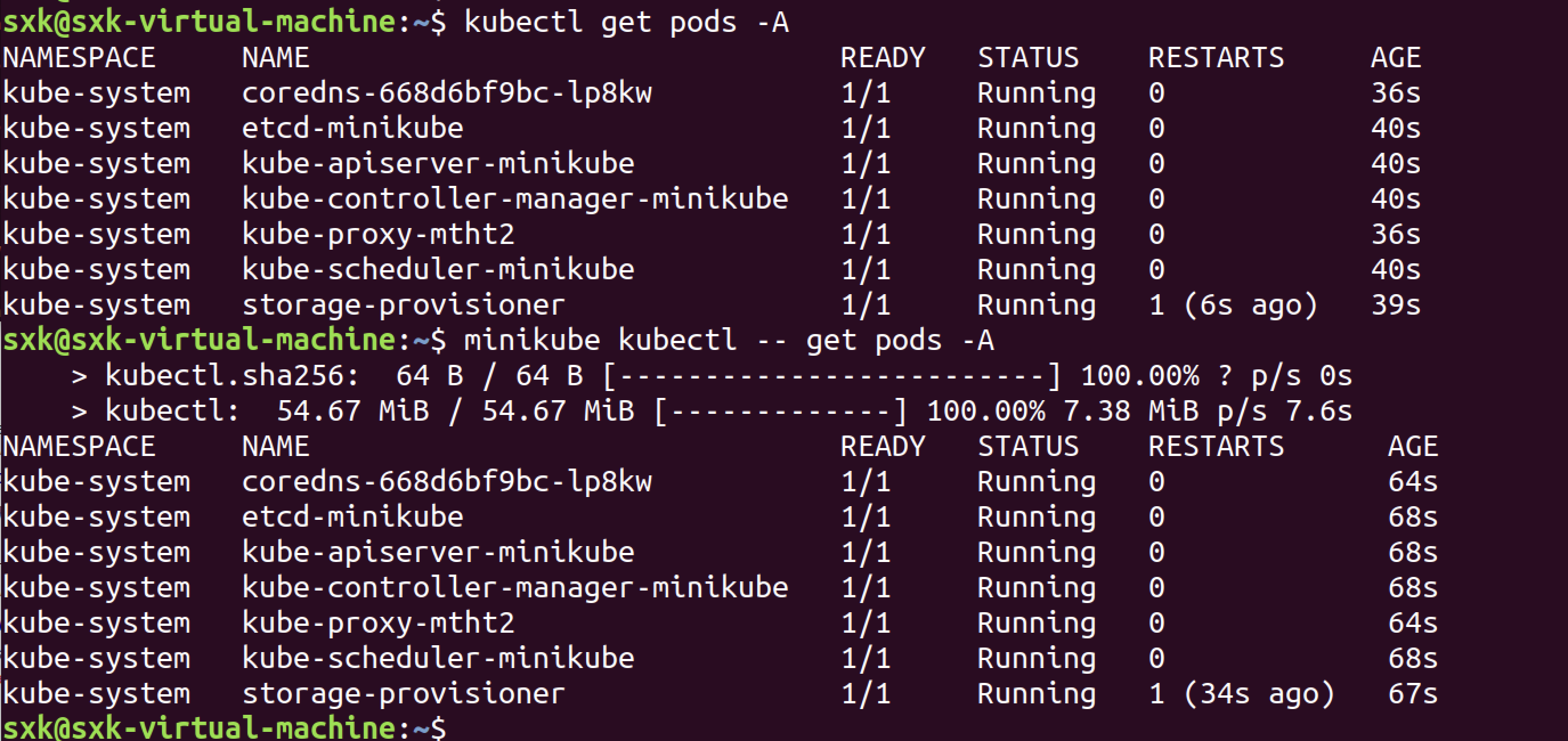

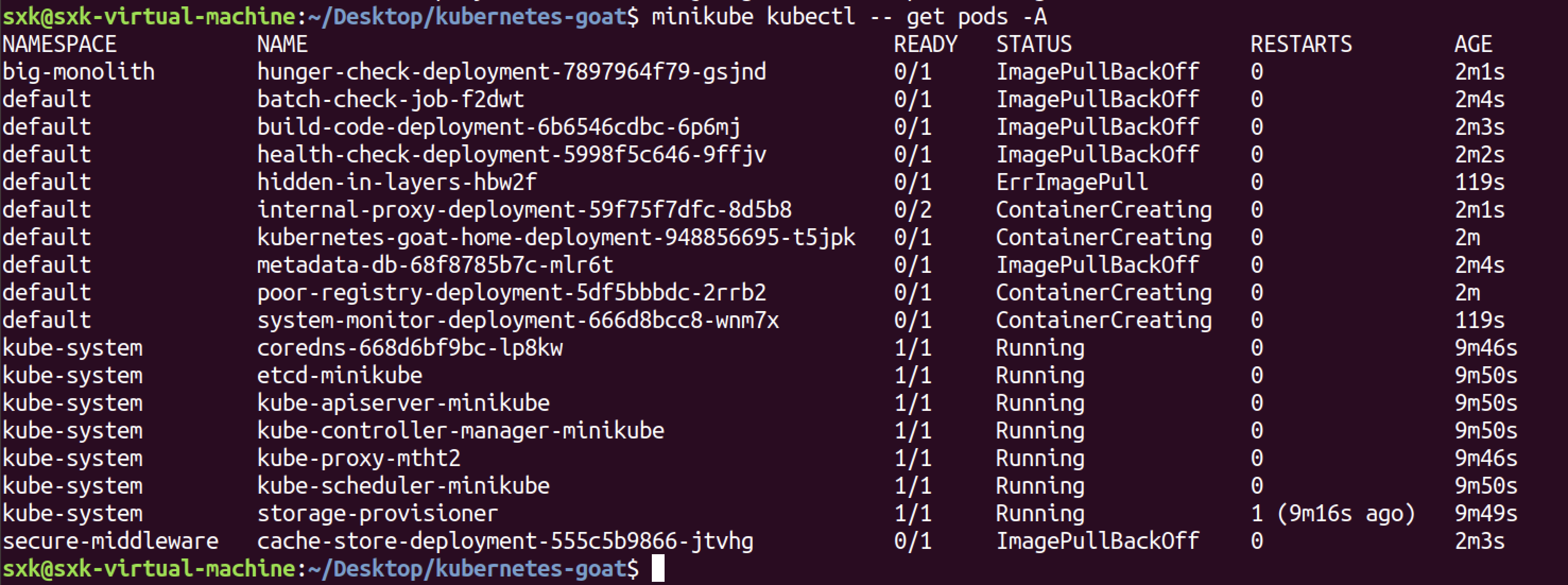

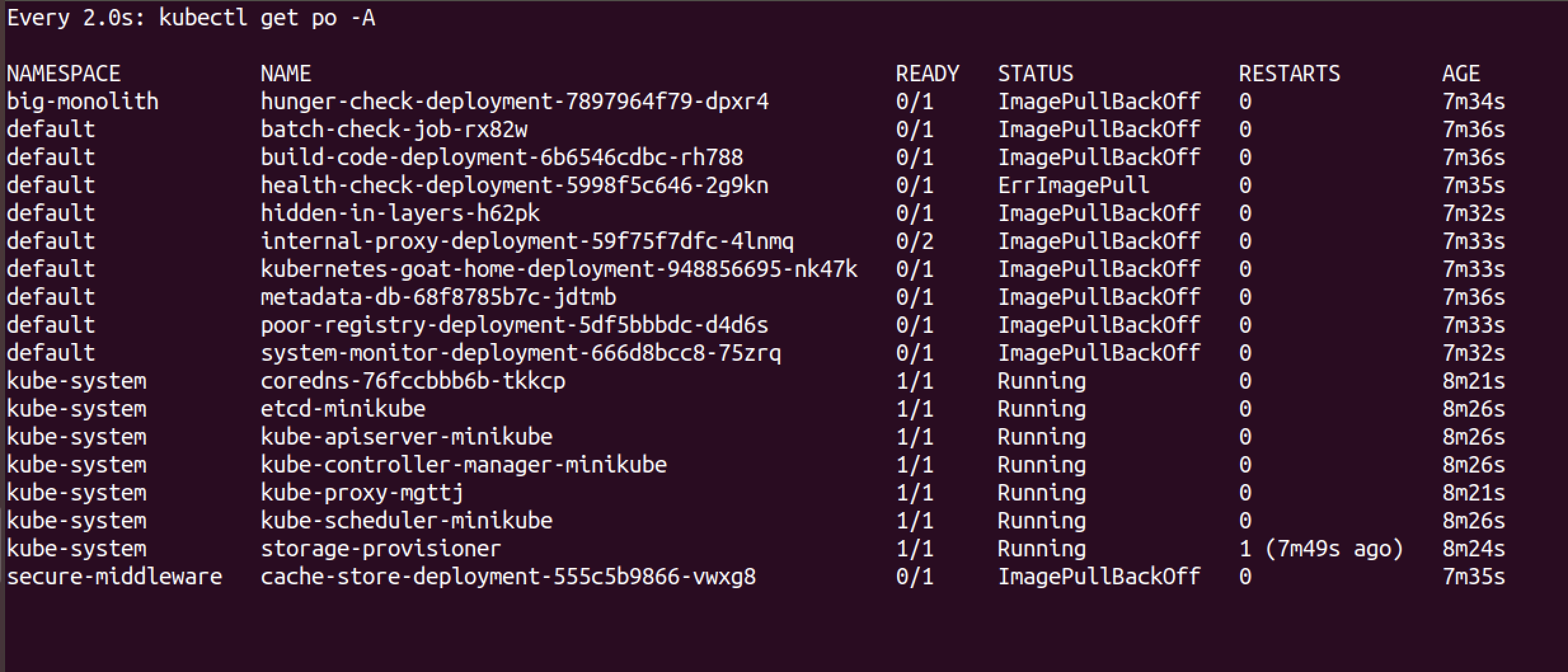

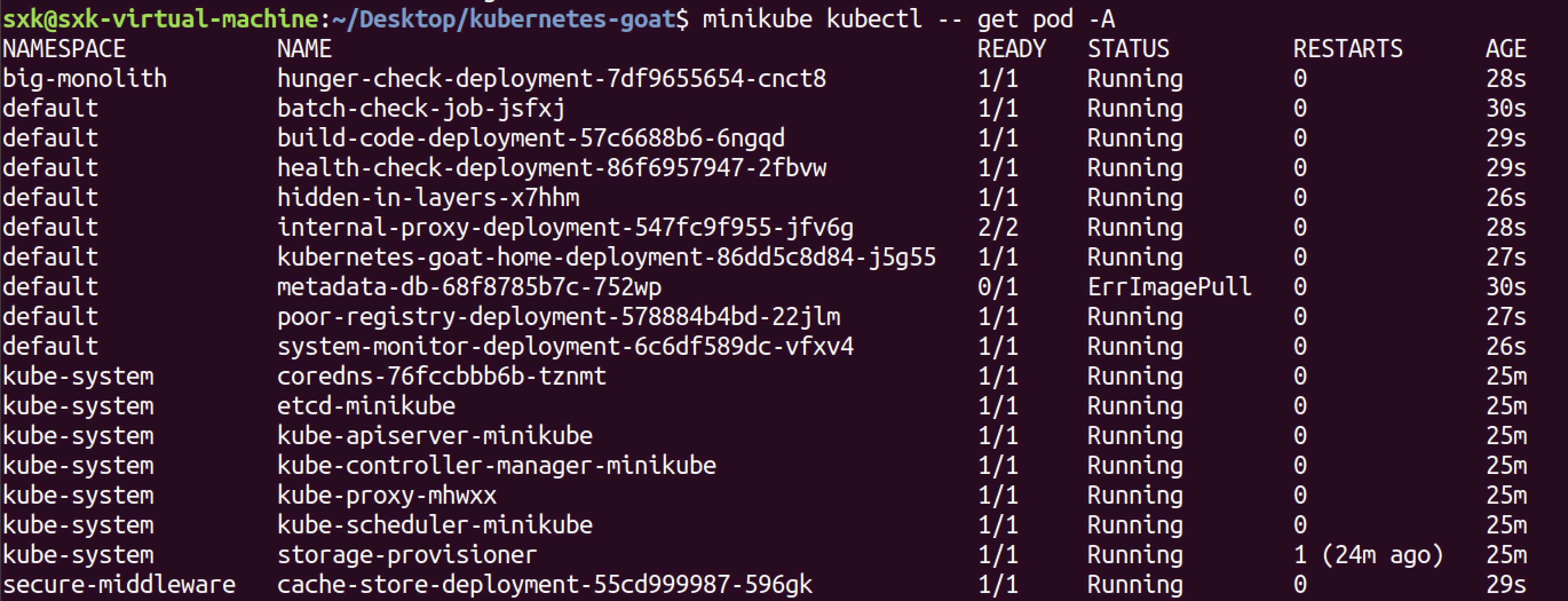

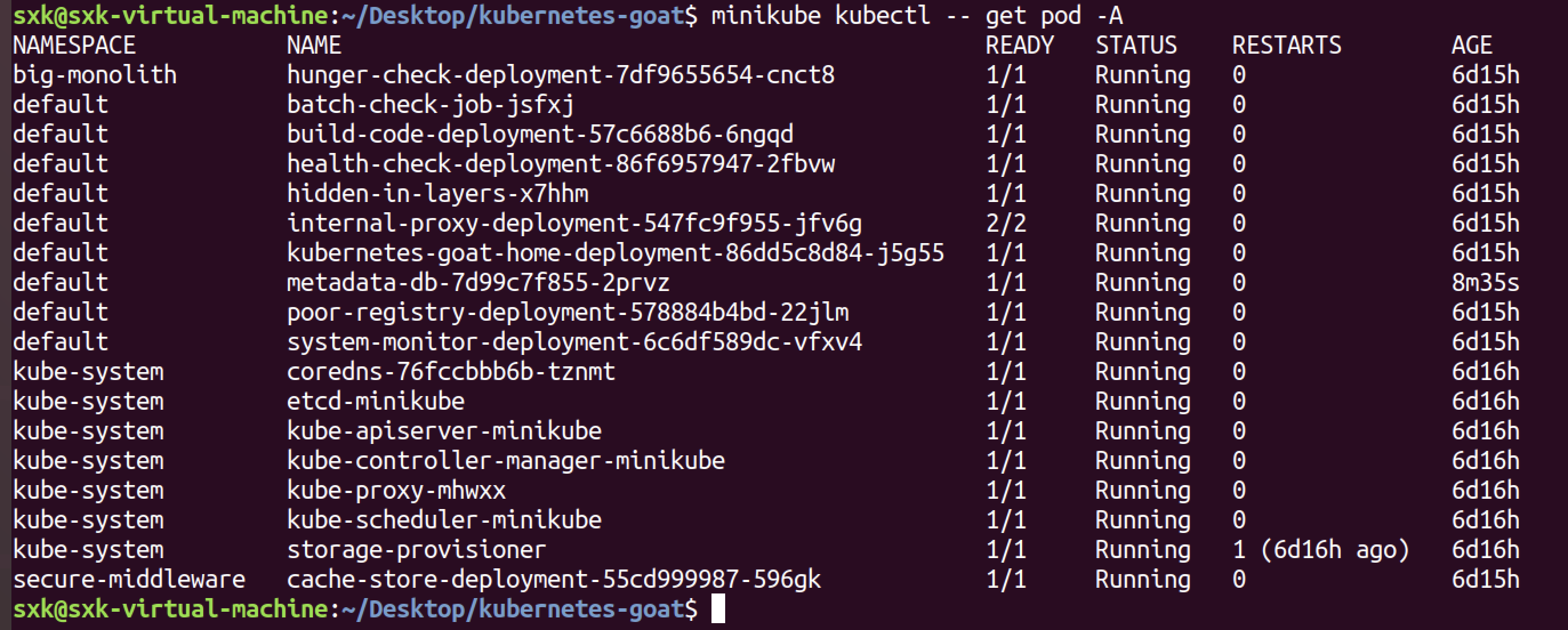

查看pods:

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ minikube kubectl -- get node -A

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane 10m v1.32.0

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ minikube kubectl -- get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

big-monolith hunger-check-deployment-7897964f79-gsjnd 0/1 ImagePullBackOff 0 3m22s

default batch-check-job-f2dwt 0/1 ErrImagePull 0 3m25s

default build-code-deployment-6b6546cdbc-6p6mj 0/1 ImagePullBackOff 0 3m24s

default health-check-deployment-5998f5c646-9ffjv 0/1 ImagePullBackOff 0 3m23s

default hidden-in-layers-hbw2f 0/1 ImagePullBackOff 0 3m20s

default internal-proxy-deployment-59f75f7dfc-8d5b8 0/2 ContainerCreating 0 3m22s

default kubernetes-goat-home-deployment-948856695-t5jpk 0/1 ImagePullBackOff 0 3m21s

default metadata-db-68f8785b7c-mlr6t 0/1 ImagePullBackOff 0 3m25s

default poor-registry-deployment-5df5bbbdc-2rrb2 0/1 ImagePullBackOff 0 3m21s

default system-monitor-deployment-666d8bcc8-wnm7x 0/1 ImagePullBackOff 0 3m20s

kube-system coredns-668d6bf9bc-lp8kw 1/1 Running 0 11m

kube-system etcd-minikube 1/1 Running 0 11m

kube-system kube-apiserver-minikube 1/1 Running 0 11m

kube-system kube-controller-manager-minikube 1/1 Running 0 11m

kube-system kube-proxy-mtht2 1/1 Running 0 11m

kube-system kube-scheduler-minikube 1/1 Running 0 11m

kube-system storage-provisioner 1/1 Running 1 (10m ago) 11m

secure-middleware cache-store-deployment-555c5b9866-jtvhg 0/1 ImagePullBackOff 0 3m24s

有很多镜像无法拉取。

执行了minikube kubectl – get pods -A,显示很多Pod的状态都是ImagePullBackOff或ErrImagePull,而kube-system命名空间下的核心组件如coredns、etcd等是运行正常的,说明Minikube本身的集群是正常的,问题出在应用Pod上。

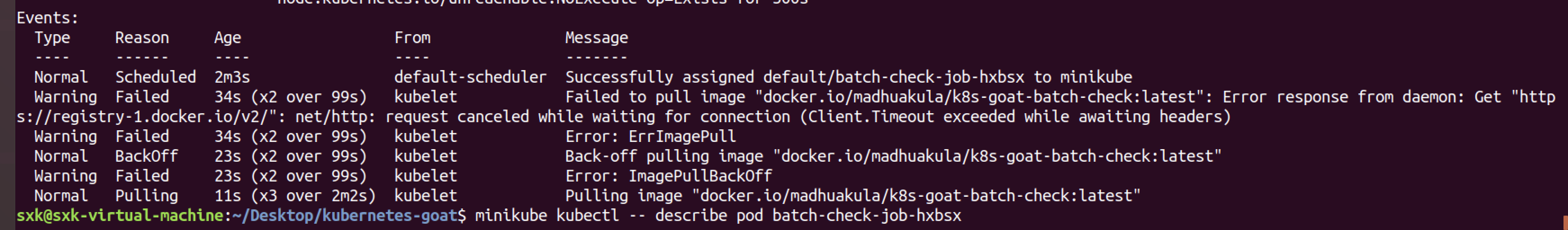

使用以下命令查看具体 Pod 的详细事件,定位错误类型:

minikube kubectl -- describe pod <Pod名称> -n <命名空间>

minikube kubectl -- describe pod batch-check-job-f2dwt -n defaultEvents:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned default/batch-check-job-f2dwt to minikube

Warning Failed 4m25s kubelet Failed to pull image "madhuakula/k8s-goat-batch-check": Error response from daemon: Get "https://registry-1.docker.io/v2/": dial tcp 31.13.91.33:443: i/o timeout

Warning Failed 99s (x3 over 9m55s) kubelet Failed to pull image "madhuakula/k8s-goat-batch-check": Error response from daemon: Get "https://registry-1.docker.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Warning Failed 99s (x4 over 9m55s) kubelet Error: ErrImagePull

Normal BackOff 32s (x10 over 9m55s) kubelet Back-off pulling image "madhuakula/k8s-goat-batch-check"

Warning Failed 32s (x10 over 9m55s) kubelet Error: ImagePullBackOff

Normal Pulling 17s (x5 over 10m) kubelet Pulling image "madhuakula/k8s-goat-batch-check"

重新指定cn镜像仓库然后构建集群:

minikube delete ; minikube start --base-image='registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46' --image-mirror-country=cn

重新安装goat相关的pod:

bash setup-kubernetes-goat.sh

镜像依然拉不下来。

watch kubectl get po -A

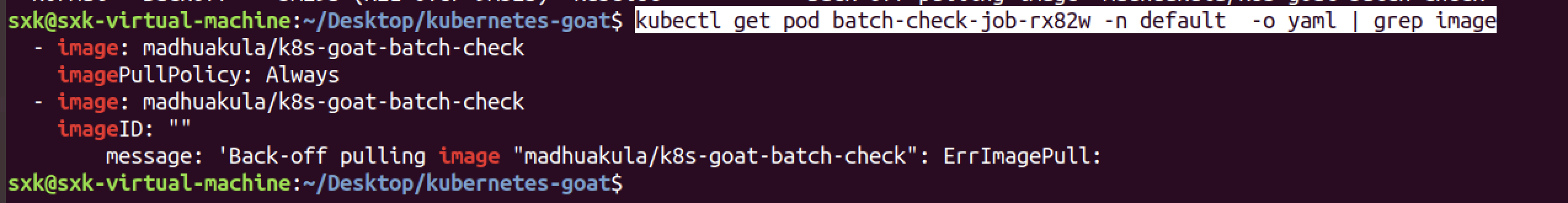

使用下面的命令找到这个pod具体使用的image名称:

kubectl get pod batch-check-job-rx82w -n default -o yaml | grep image

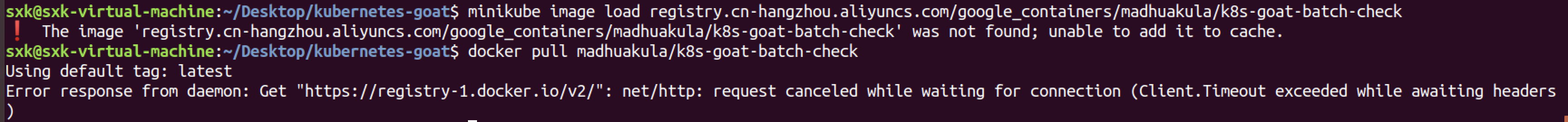

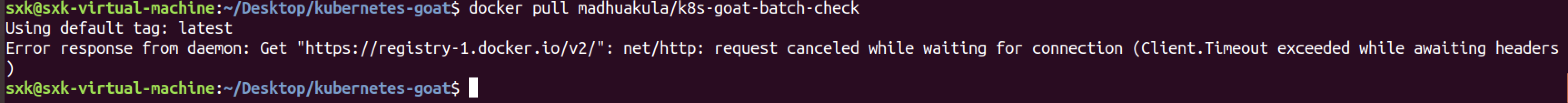

尝试独立拉取镜像:

宿主机是可以拉取到镜像的。

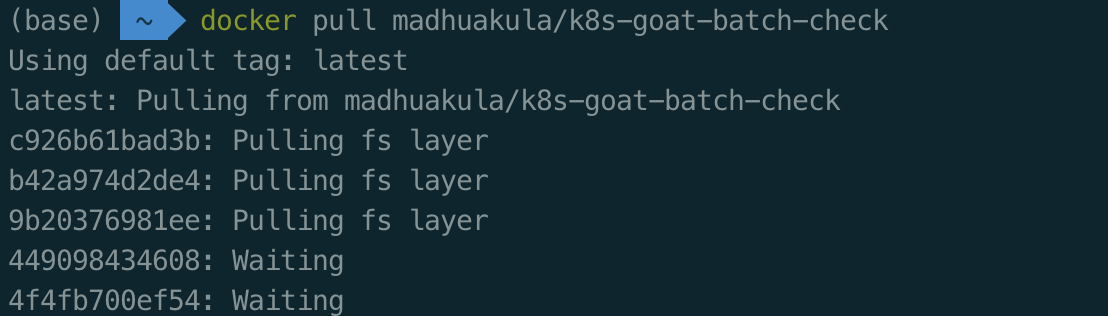

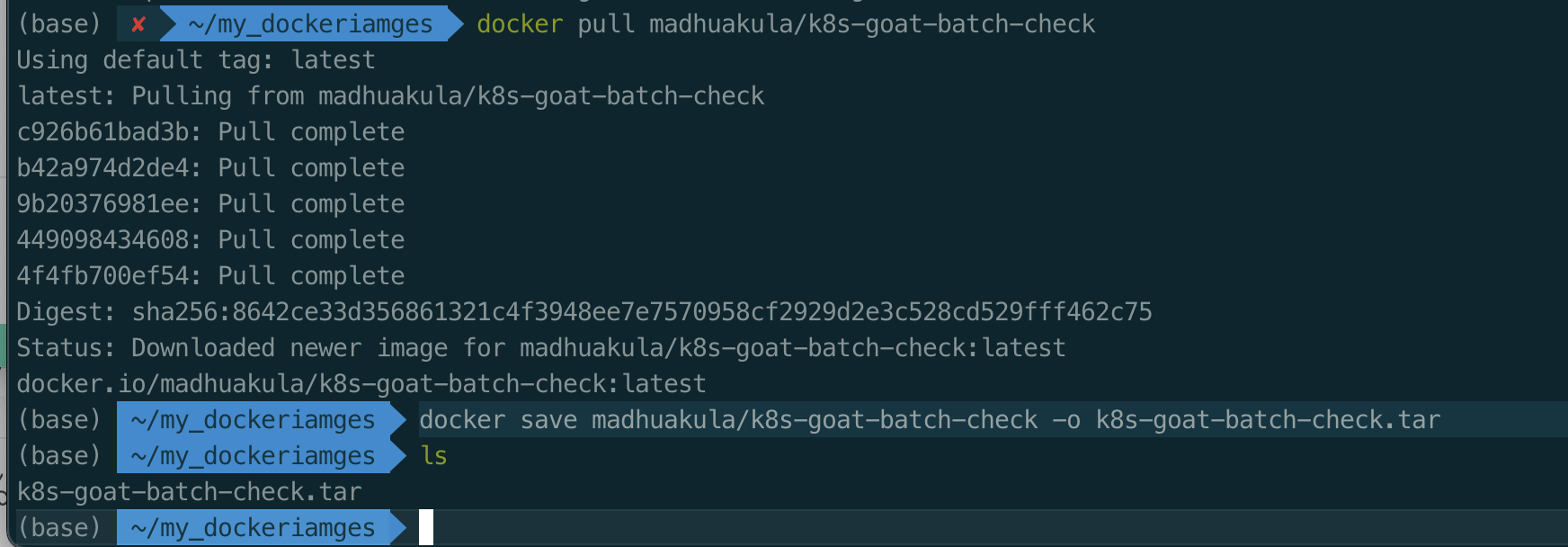

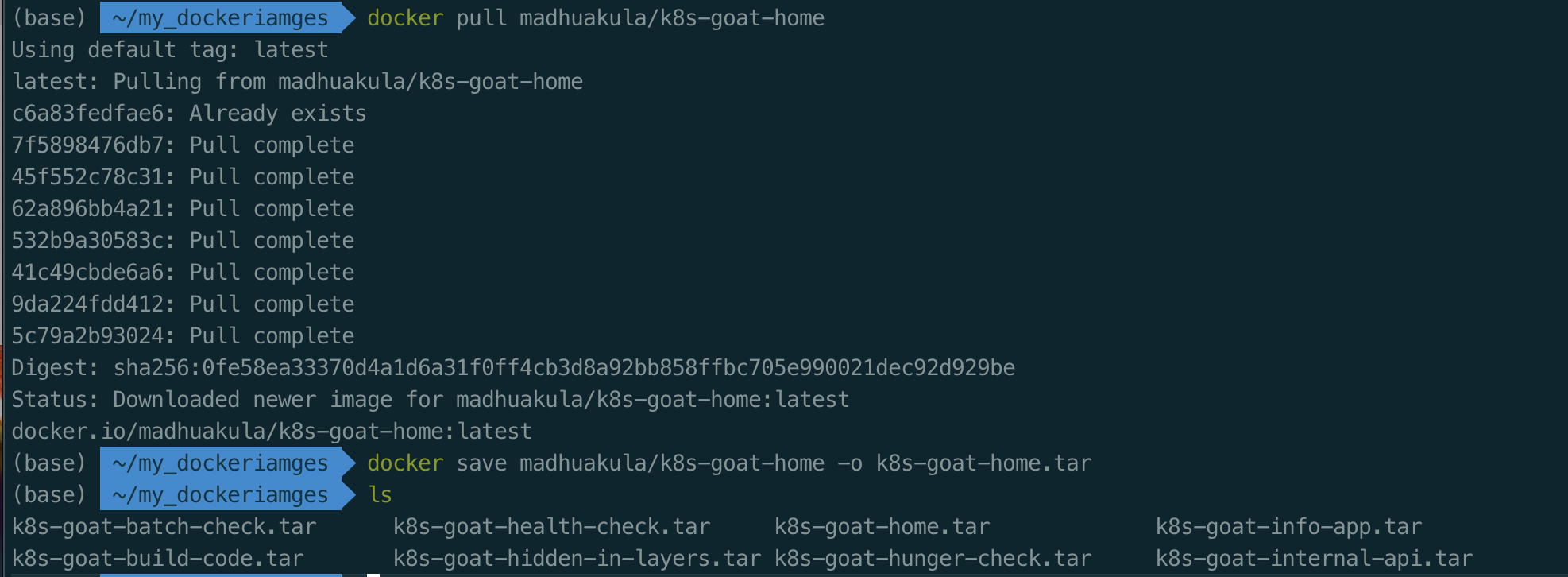

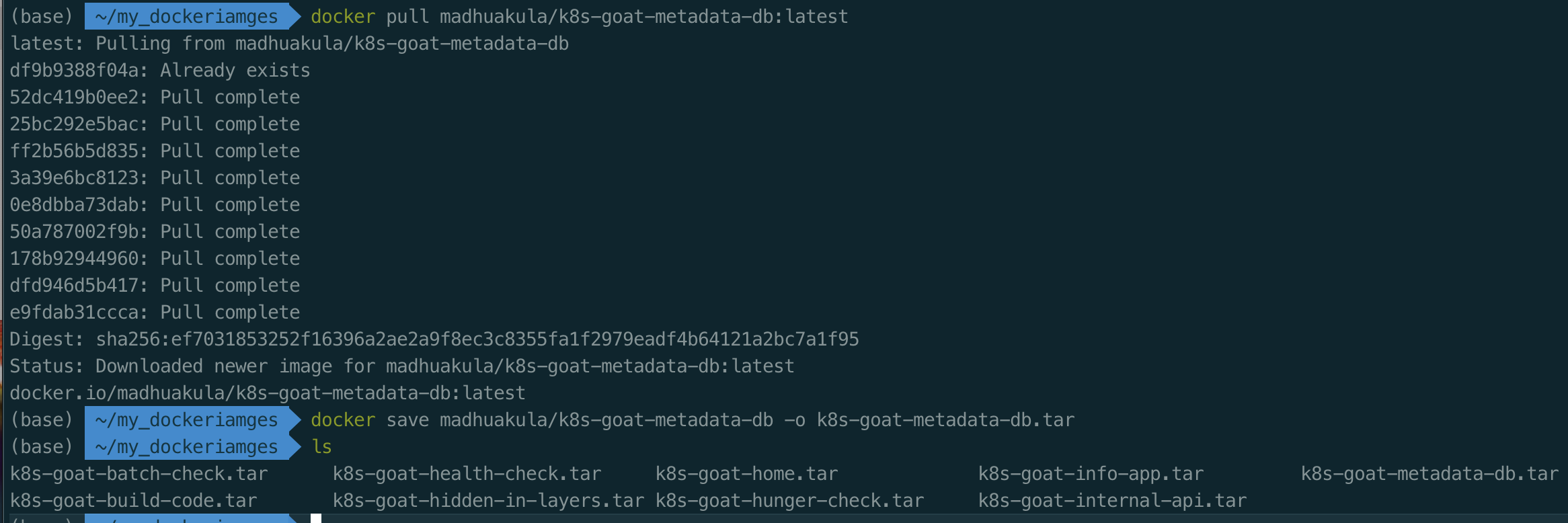

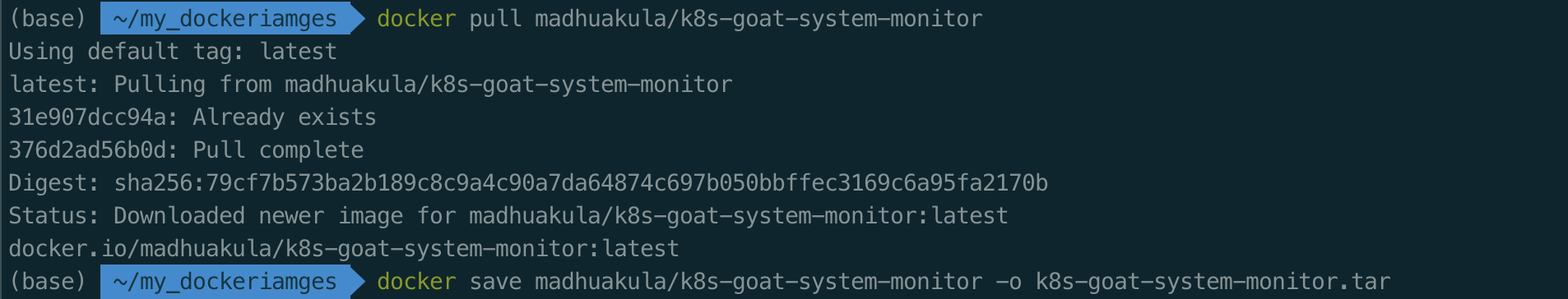

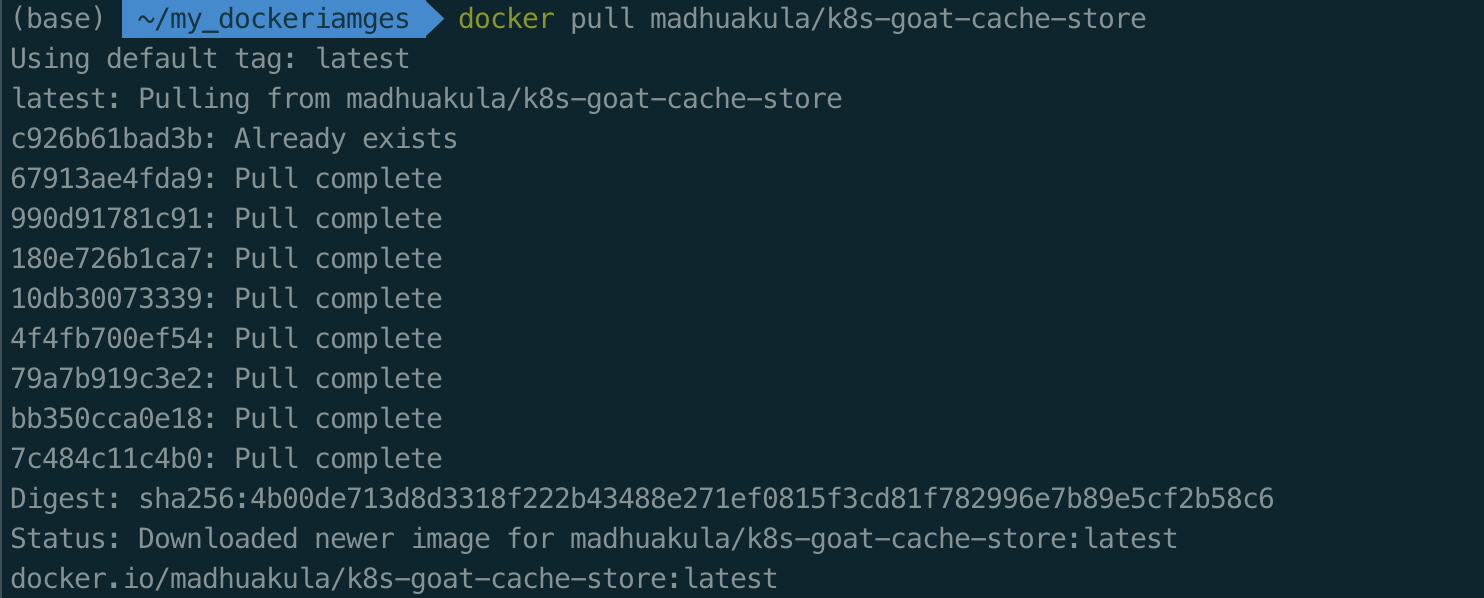

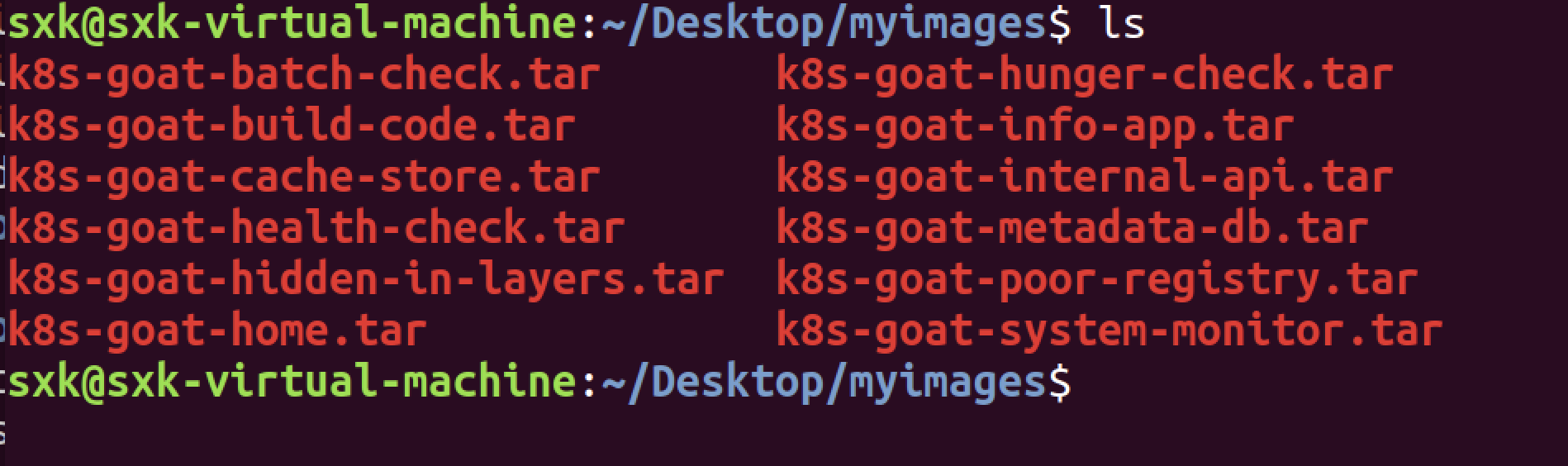

先尝试在宿主机拉取镜像,然后在传到虚拟机中加载:

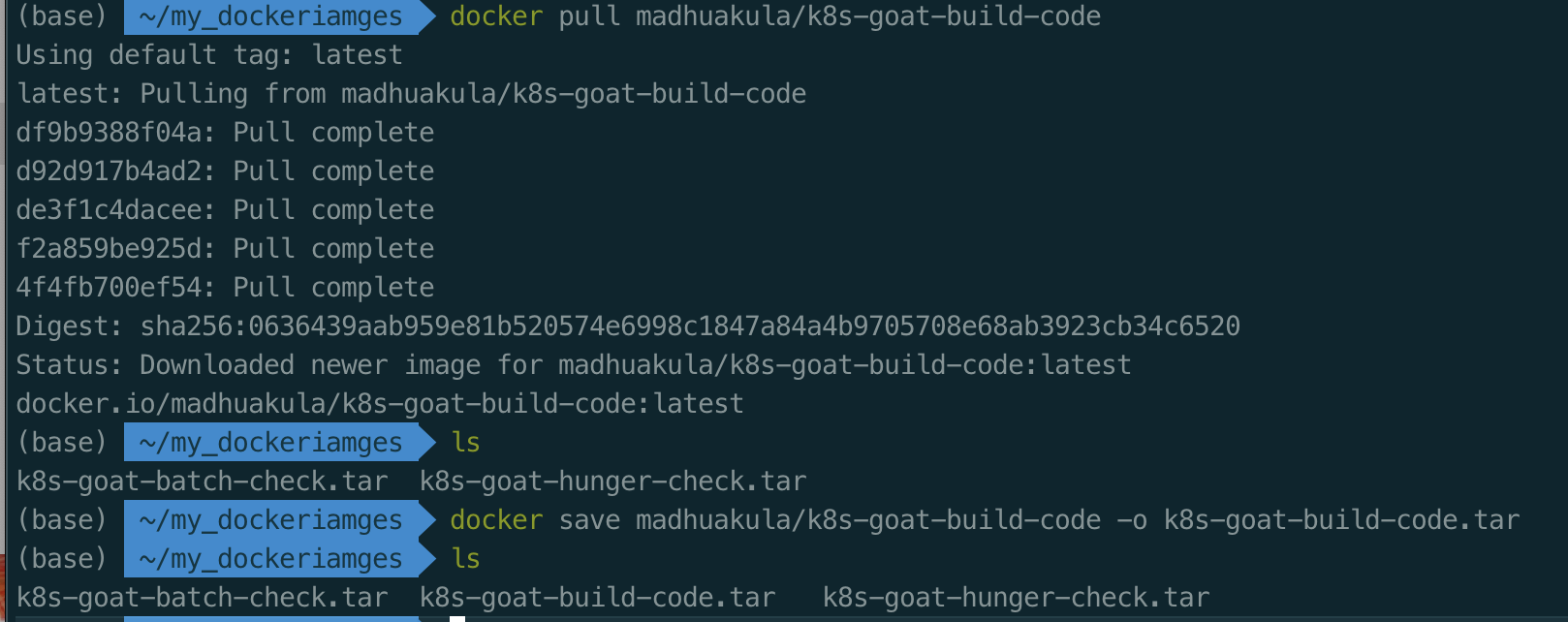

docker pull madhuakula/k8s-goat-batch-check

docker save madhuakula/k8s-goat-batch-check -o k8s-goat-batch-check.tar

ls

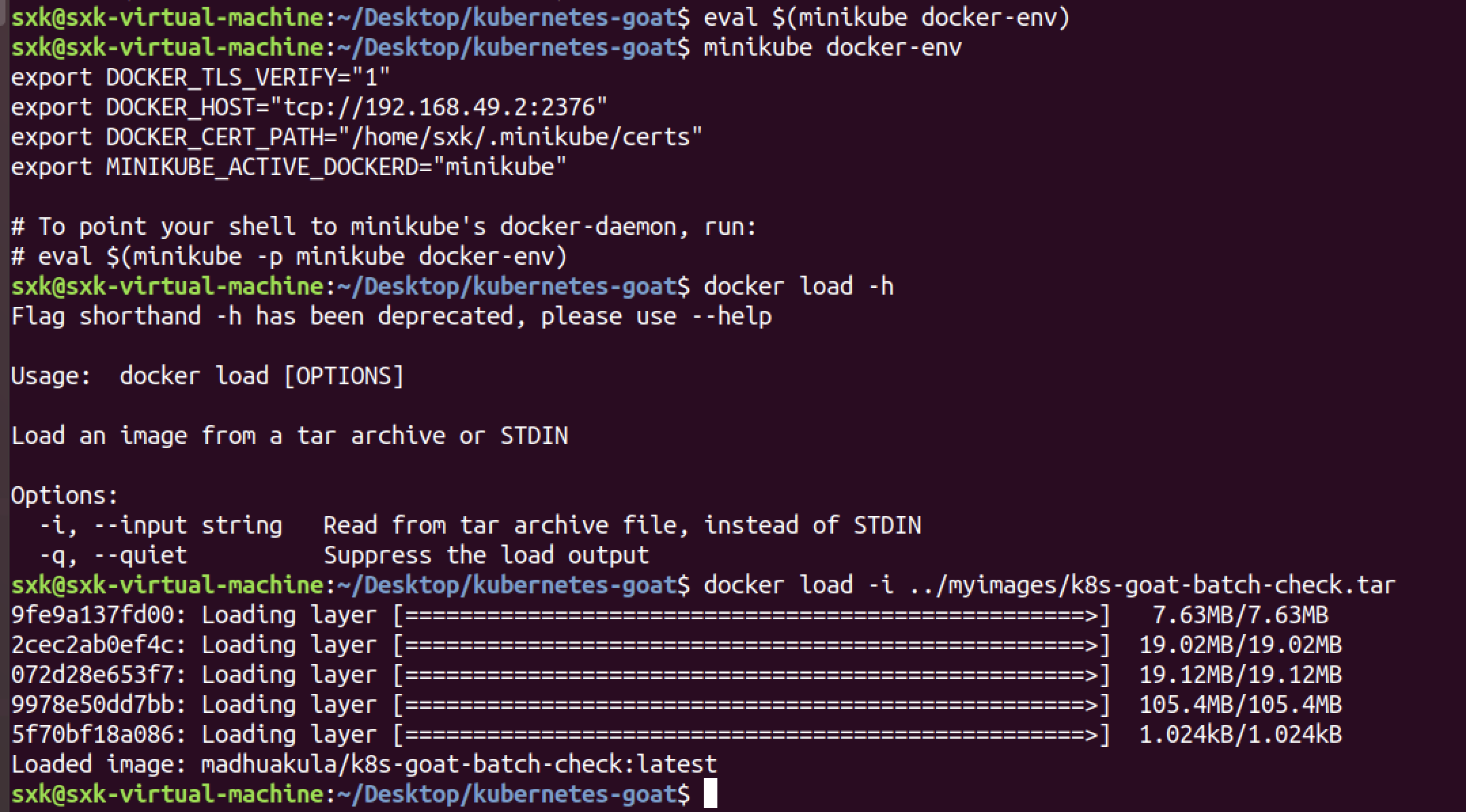

eval $(minikube docker-env)

这个命令的作用是什么?该命令会将当前终端的 Docker 客户端指向 Minikube 虚拟机内部的 Docker 守护进程。这意味着后续所有 docker 命令(如 docker build、docker push)操作的是 Minikube 内部的 Docker 环境,而非宿主机的 Docker。

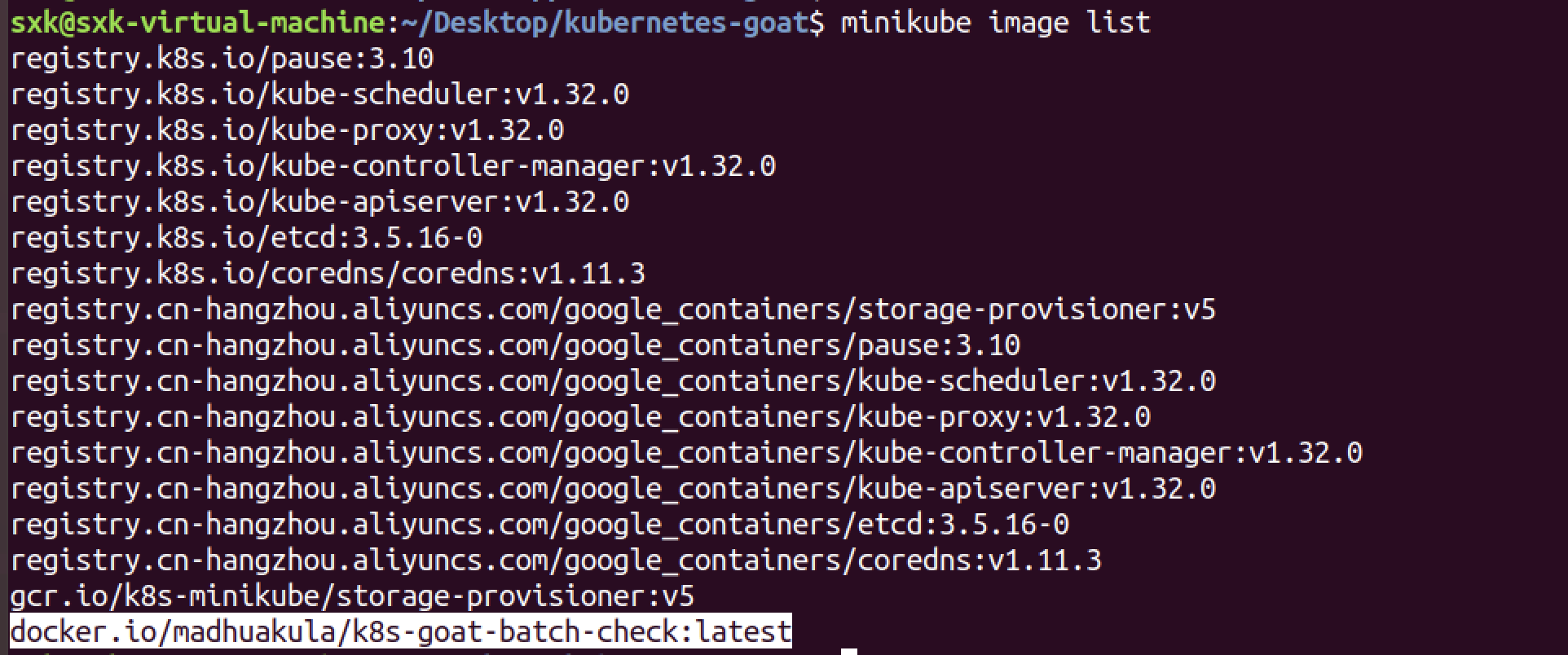

minikube iamge list

kubectl get deployments -A

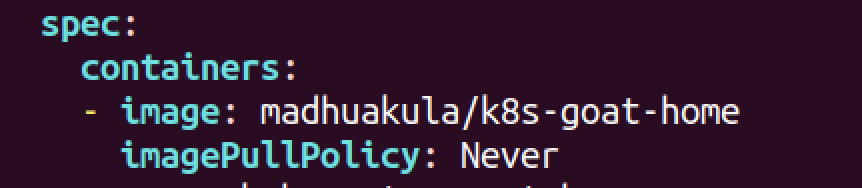

# 修改 Deployment 的拉取策略(避免远程拉取)

kubectl edit deployment <Deployment名称>

# 添加以下配置:

spec:

template:

spec:

containers:

- name: ...

imagePullPolicy: Never # 强制使用本地镜像kubectl edit deployment kubernetes-goat-home-deployment

如何确定pod对应的deployment?

kubectl get pod <Pod名称> -o jsonpath='{.metadata.ownerReferences[0].name}' | cut -d'-' -f1-$(kubectl get pod <Pod名称> -o jsonpath='{.metadata.ownerReferences[0].name}' | tr -cd '-' | wc -c | awk '{print $1+1}')

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod batch-check-job-rx82w -o jsonpath='{.metadata.ownerReferences[0].name}' | cut -d'-' -f1-$(kubectl get pod batch-check-job-rx82w -o jsonpath='{.metadata.ownerReferences[0].name}' | tr -cd '-' | wc -c | awk '{print $1+1}')

batch-check-job

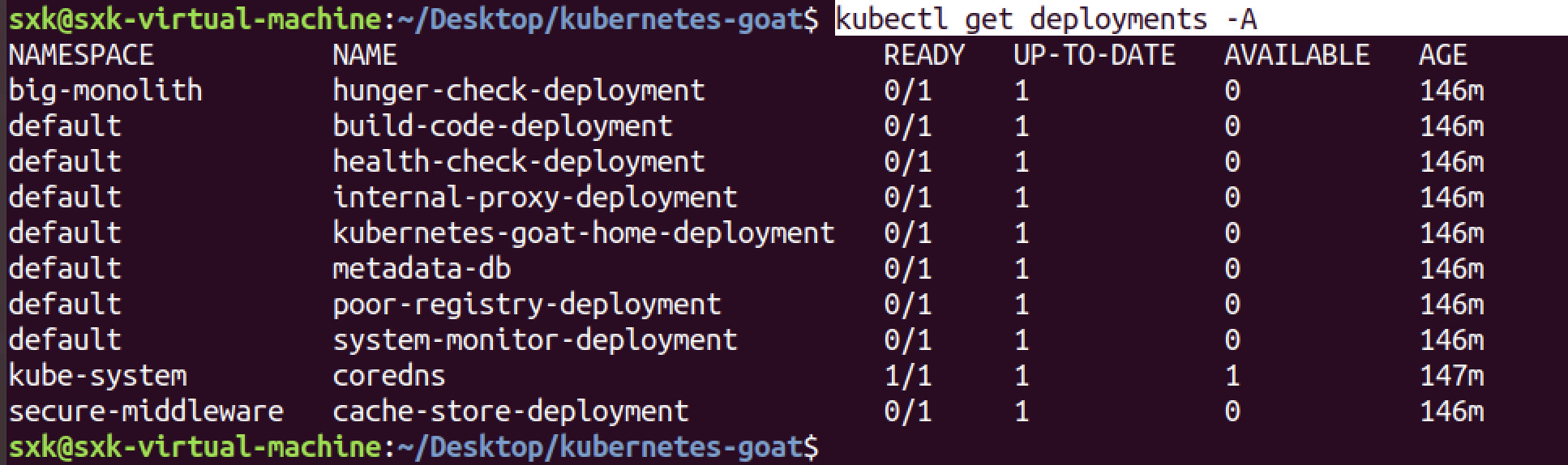

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get deployments -A

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

big-monolith hunger-check-deployment 0/1 1 0 157m

default build-code-deployment 0/1 1 0 157m

default health-check-deployment 0/1 1 0 157m

default internal-proxy-deployment 0/1 1 0 157m

default kubernetes-goat-home-deployment 0/1 1 0 157m

default metadata-db 0/1 1 0 157m

default poor-registry-deployment 0/1 1 0 157m

default system-monitor-deployment 0/1 1 0 157m

kube-system coredns 1/1 1 1 157m

secure-middleware cache-store-deployment 0/1 1 0 157m

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$

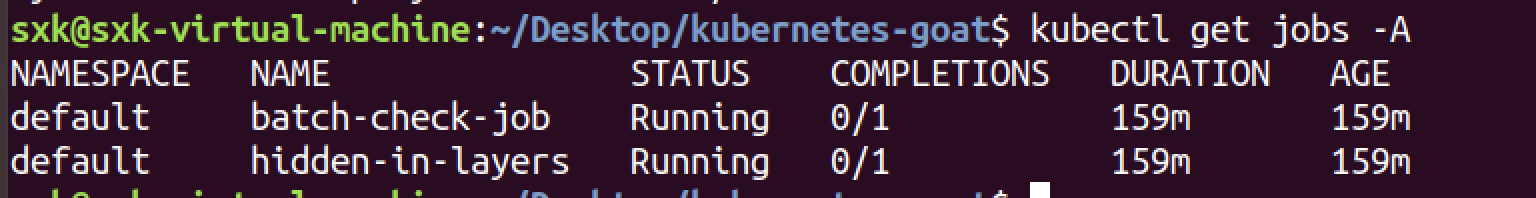

batch-check-job并不在kubectl get deployments -A的输出中呀Kubernetes 资源的分类:

- Deployment:用于管理长期运行的 Pod(如 Web 服务)。

- Job:用于运行一次性任务(如数据处理、批处理作业)。

- CronJob:用于周期性运行的 Job(类似 Linux 的 crontab)。

kubectl get jobs -A # 查看所有命名空间的 Job

折腾半天还是搞不定镜像的问题。

3.4 尝试4【成功✅】

3.4.1 问题深入分析

分析setup-kubernetes-goat.sh的文件:

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ cat setup-kubernetes-goat.sh

#!/bin/bash

# Author: Madhu Akula

# This program has been created as part of Kubernetes Goat

# Kubernetes Goat setup and manage vulnerable infrastructure

# Checking kubectl setup

kubectl version > /dev/null 2>&1

if [ $? -eq 0 ];

then

echo "kubectl setup looks good."

else

echo "Error: Could not find kubectl or an other error happened, please check kubectl setup."

exit;

fi

# Deprecated helm2 scenario

# Checking helm2 setup

#helm2 --help > /dev/null 2>&1

#if [ $? -eq 0 ];

#then

# echo "helm2 setup looks good."

#else

# echo "Error: Could not find helm2, please check helm2 setup."

# exit;

#fi

#

## helm2 setup

#echo "setting up helm2 rbac account and initialise tiller"

# kubectl apply -f scenarios/helm2-rbac/setup.yaml

#helm2 init --service-account tiller

# wait for tiller service to ready

# echo "waiting for helm2 tiller service to be active."

# sleep 50

# deploying insecure-rbac scenario

echo "deploying insecure super admin scenario"

kubectl apply -f scenarios/insecure-rbac/setup.yaml

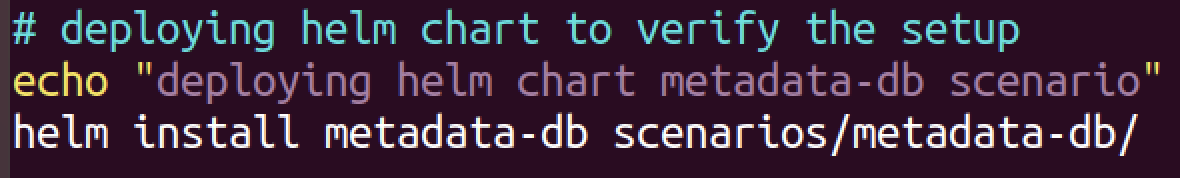

# deploying helm chart to verify the setup

echo "deploying helm chart metadata-db scenario"

helm install metadata-db scenarios/metadata-db/

# setup the scenarios/configurations

echo 'deploying the vulnerable scenarios manifests'

kubectl apply -f scenarios/batch-check/job.yaml

kubectl apply -f scenarios/build-code/deployment.yaml

kubectl apply -f scenarios/cache-store/deployment.yaml

kubectl apply -f scenarios/health-check/deployment.yaml

kubectl apply -f scenarios/hunger-check/deployment.yaml

kubectl apply -f scenarios/internal-proxy/deployment.yaml

kubectl apply -f scenarios/kubernetes-goat-home/deployment.yaml

kubectl apply -f scenarios/poor-registry/deployment.yaml

kubectl apply -f scenarios/system-monitor/deployment.yaml

kubectl apply -f scenarios/hidden-in-layers/deployment.yaml

echo 'Successfully deployed Kubernetes Goat. Have fun learning Kubernetes Security!'

echo 'Ensure pods are in running status before running access-kubernetes-goat.sh script'

echo 'Now run the bash access-kubernetes-goat.sh to access the Kubernetes Goat environment.'

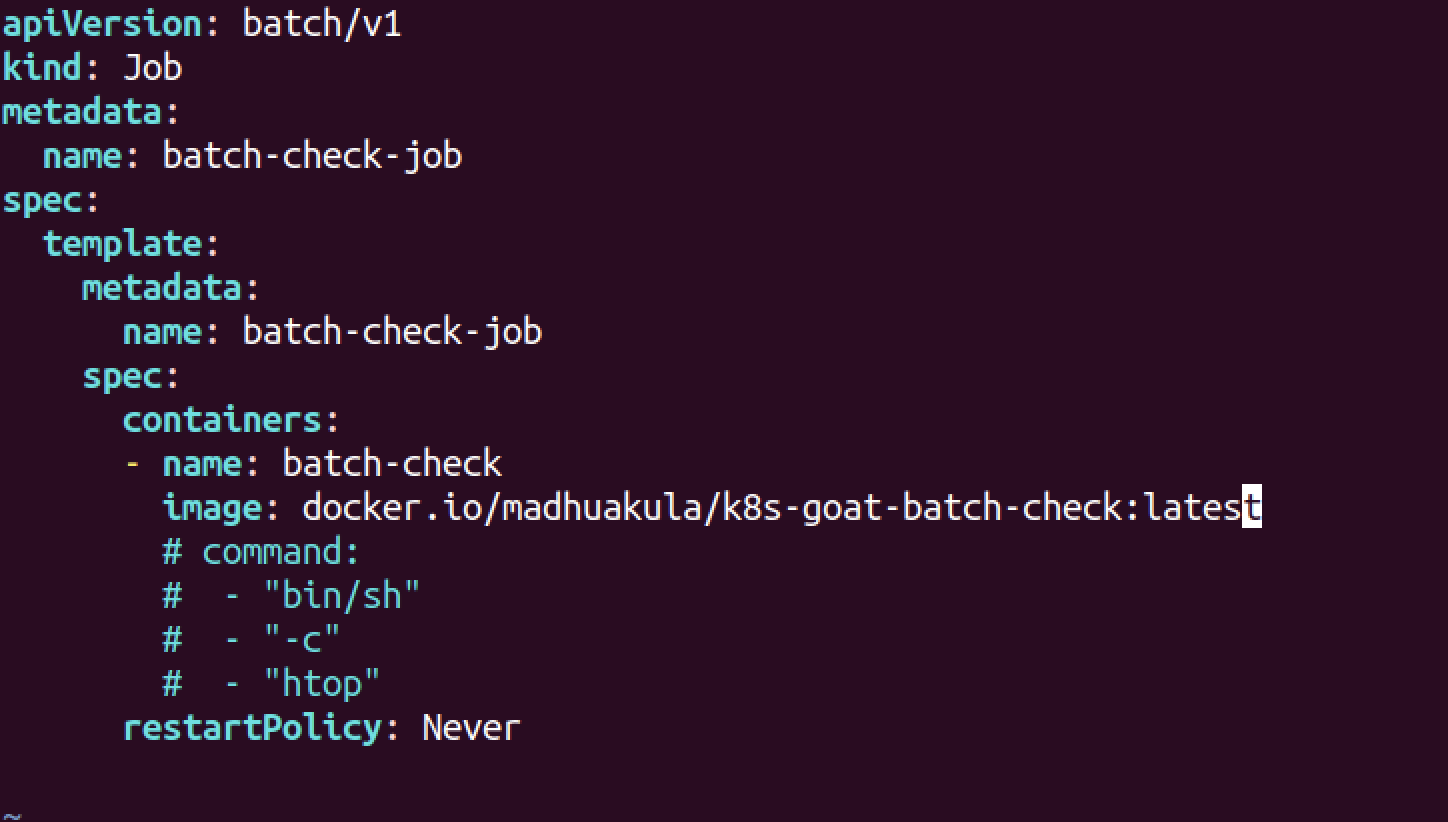

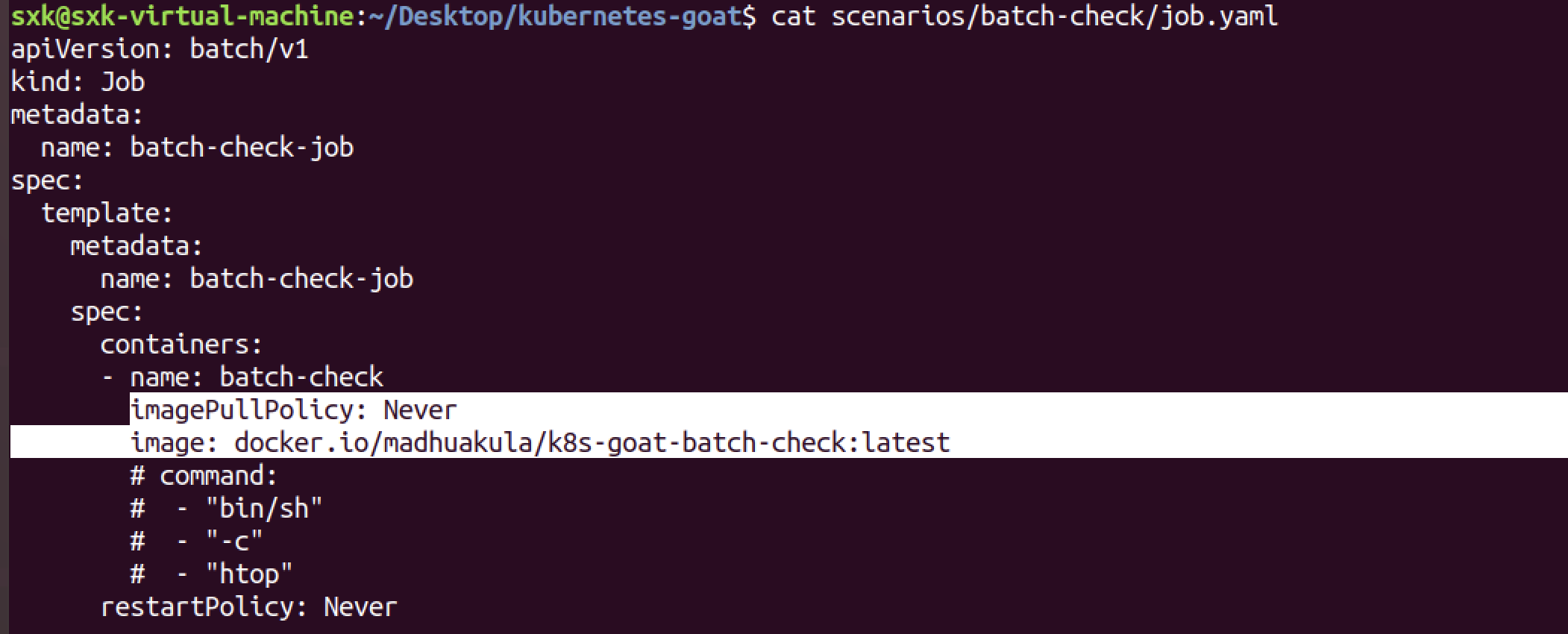

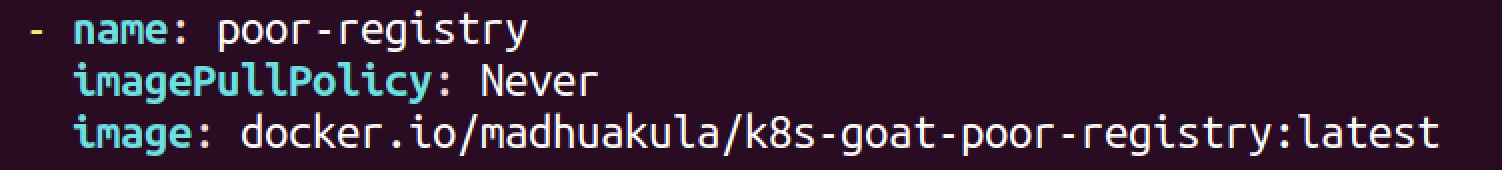

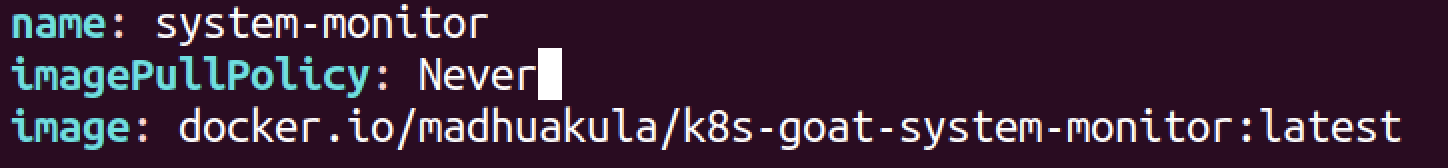

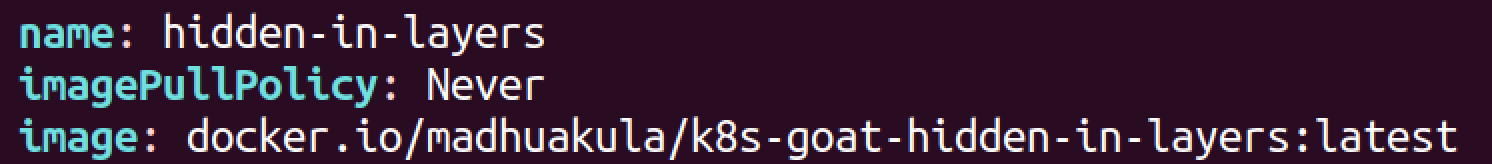

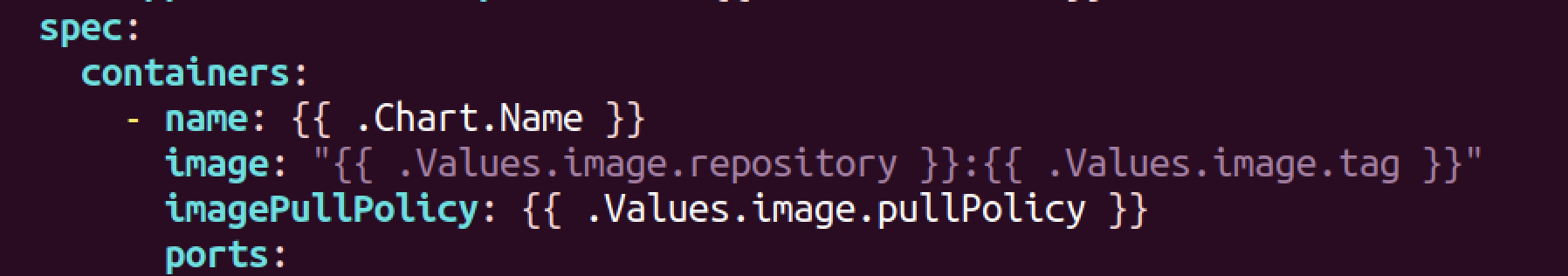

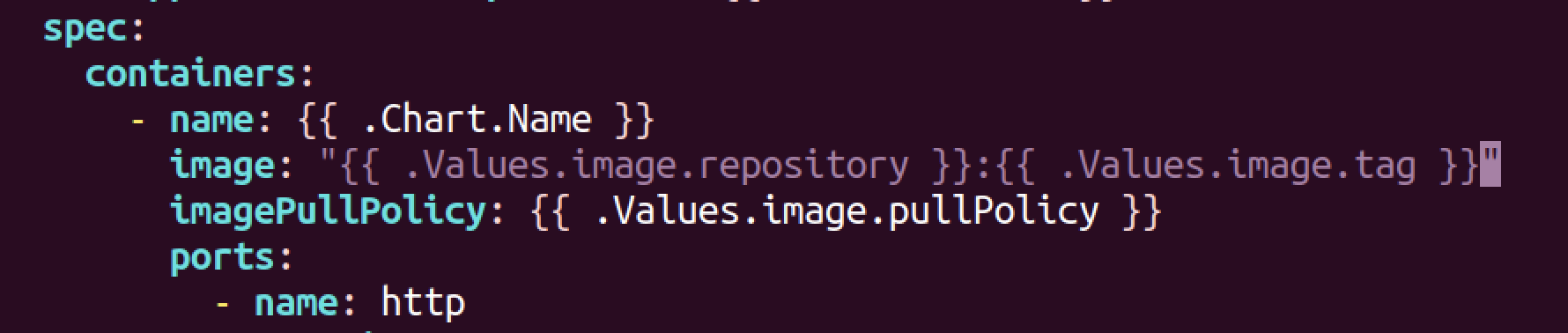

发现它是通过一些yaml配置文件来创建Job和Deployment资源的,我只需要修改其中image字段是否就可以?

但是还是发现去pull镜像去了。

再添加一个字段:imagePullPolicy: Never

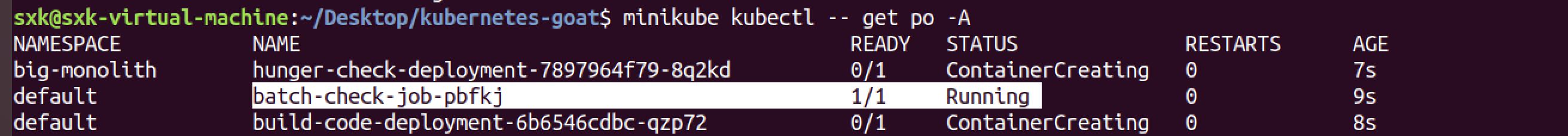

但是重新执行bash setup-kubernetes-goat.sh时会报错,重新执行minikube启动命令之后,记载镜像再执行安装命令,最后相关的pod成功运行起来了。

所以其他pod镜像拉取失败的情况也可以这么操作。

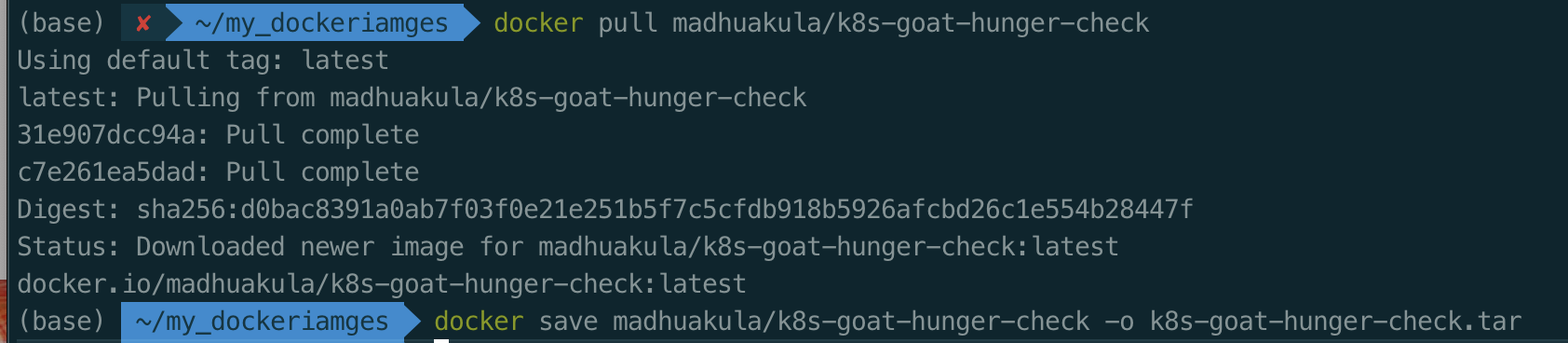

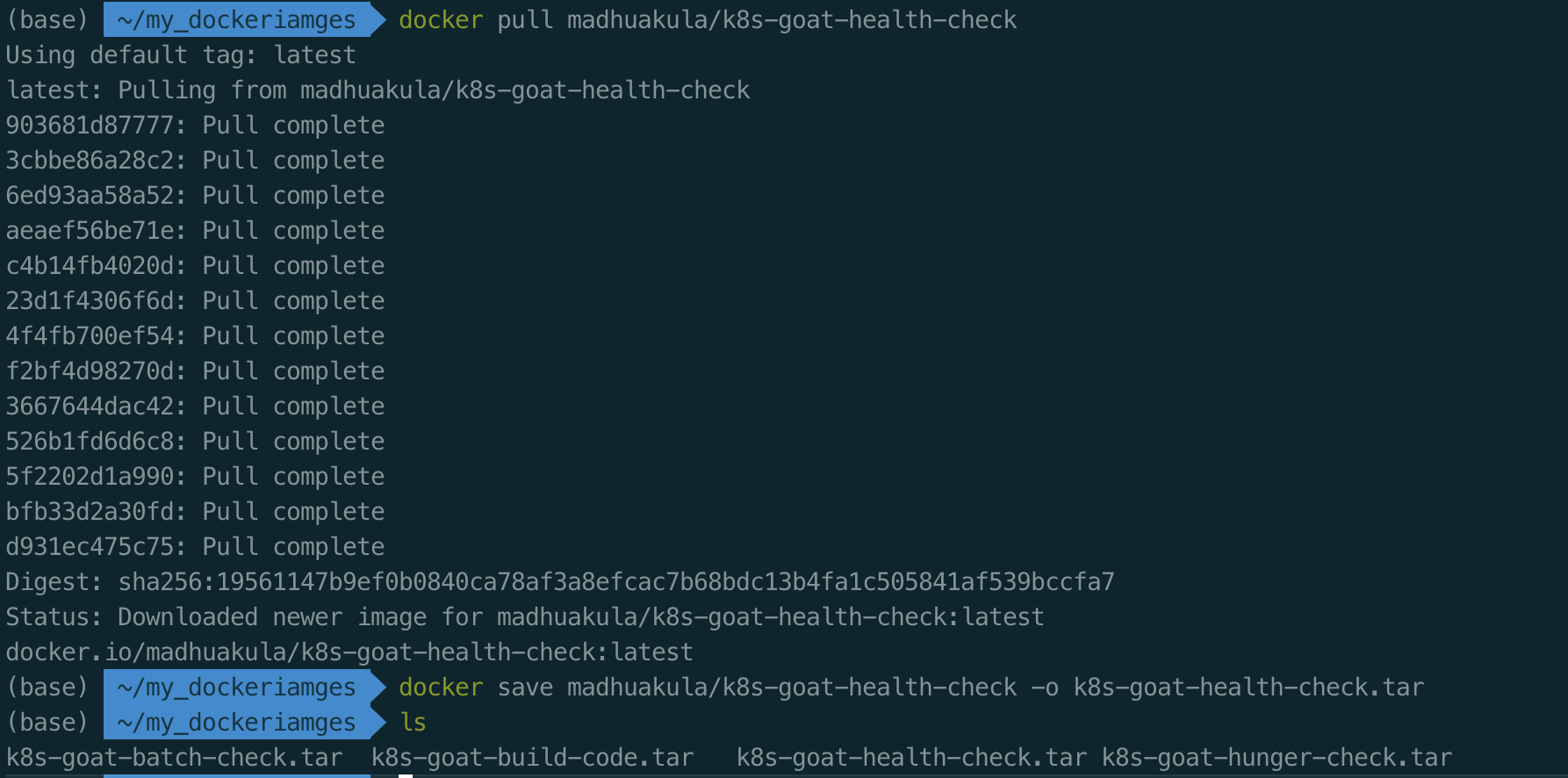

3.4.2 获取所有拉取失败的pod的镜像

提前在宿主机拉取所有的镜像:

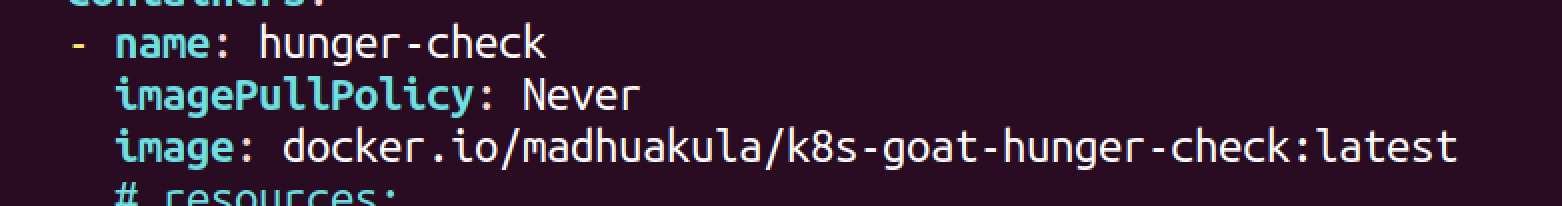

kubectl get pod <pod名称> -n <namespace> -o yaml | grep image #查看pod拉取失败的镜像是哪个- hunger-check-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod hunger-check-deployment-7897964f79-8q2kd -n big-monolith -o yaml | grep image

- image: madhuakula/k8s-goat-hunger-check

imagePullPolicy: Always

- image: madhuakula/k8s-goat-hunger-check

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-hunger-check": ErrImagePull:

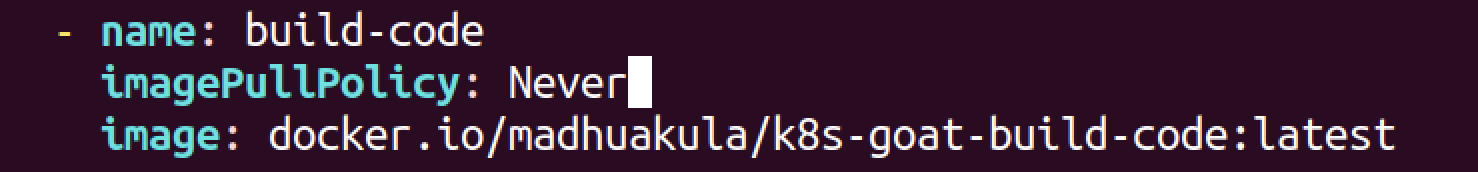

- build-code-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod build-code-deployment-6b6546cdbc-qzp72 -o yaml | grep image

- image: madhuakula/k8s-goat-build-code

imagePullPolicy: Always

- image: madhuakula/k8s-goat-build-code

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-build-code": ErrImagePull:

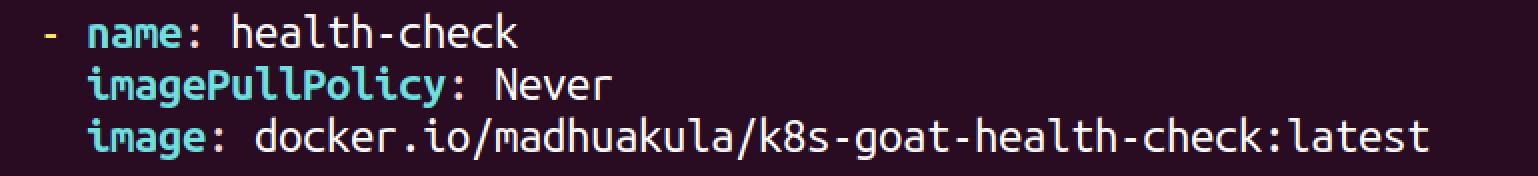

- health-check-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod health-check-deployment-5998f5c646-ncb6b -o yaml | grep image

- image: madhuakula/k8s-goat-health-check

imagePullPolicy: Always

- image: madhuakula/k8s-goat-health-check

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-health-check": ErrImagePull:

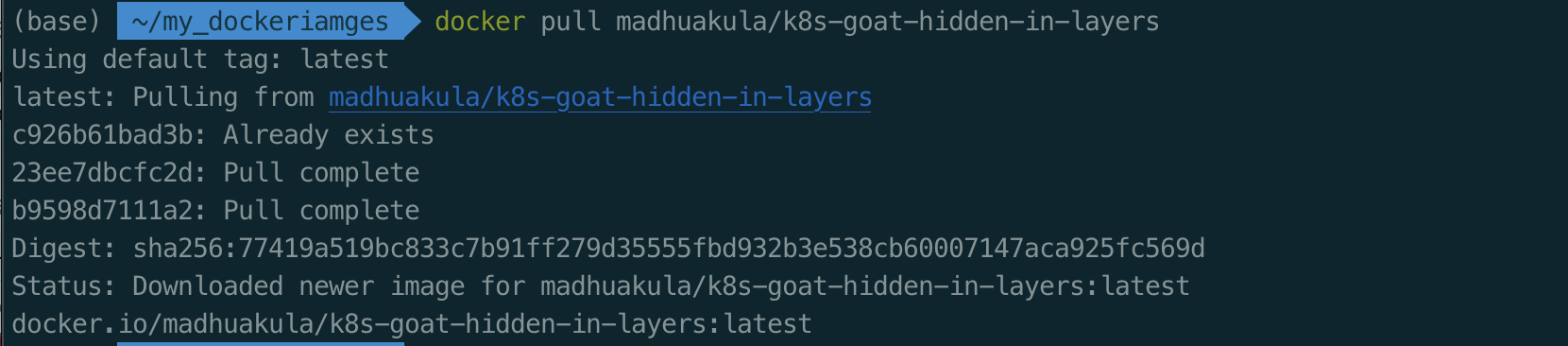

- hidden-in-layers

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod hidden-in-layers-xxwrj -o yaml | grep image

- image: madhuakula/k8s-goat-hidden-in-layers

imagePullPolicy: Always

- image: madhuakula/k8s-goat-hidden-in-layers

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-hidden-in-layers": ErrImagePull:

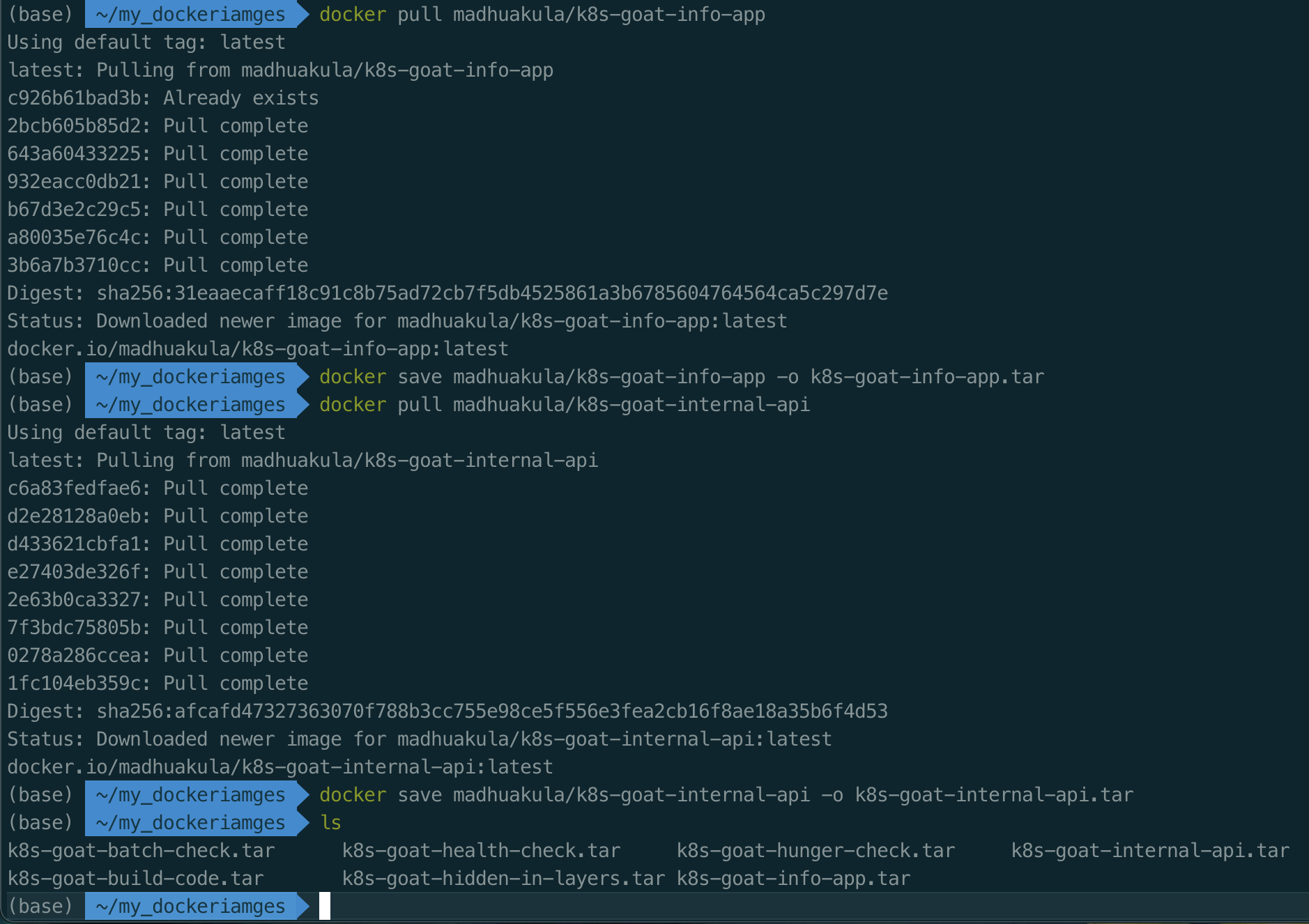

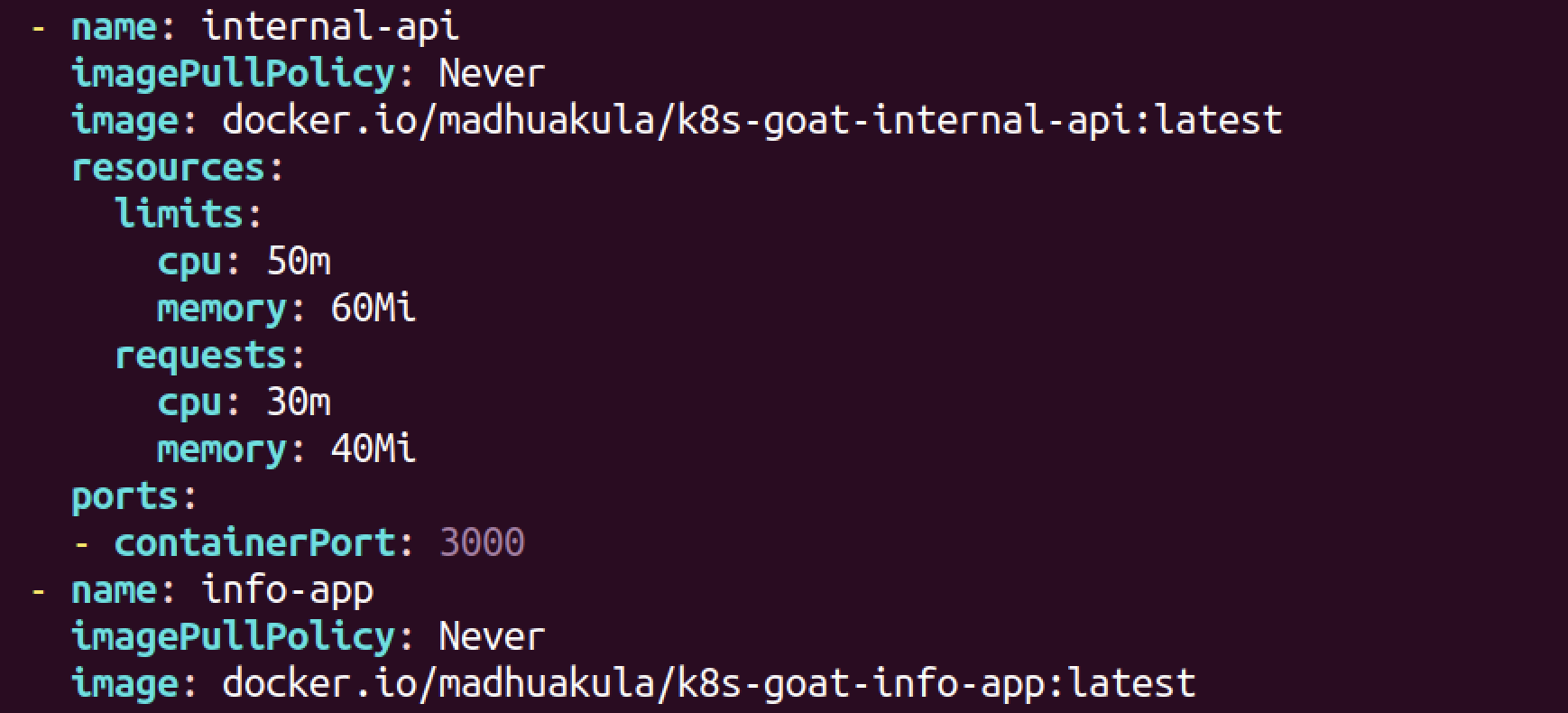

- internal-proxy

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod internal-proxy-deployment-59f75f7dfc-lw65q -o yaml | grep image

- image: madhuakula/k8s-goat-internal-api

imagePullPolicy: Always

- image: madhuakula/k8s-goat-info-app

imagePullPolicy: Always

- image: madhuakula/k8s-goat-info-app

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-info-app": ErrImagePull:

- image: madhuakula/k8s-goat-internal-api

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-internal-api": ErrImagePull:

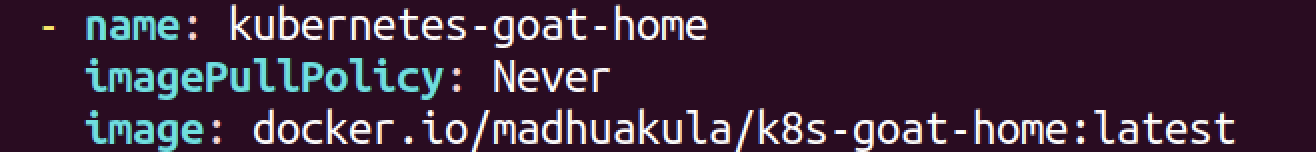

- kubernetes-goat-home-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod kubernetes-goat-home-deployment-948856695-qm7lc -o yaml | grep image

- image: madhuakula/k8s-goat-home

imagePullPolicy: Always

- image: madhuakula/k8s-goat-home

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-home": ErrImagePull:

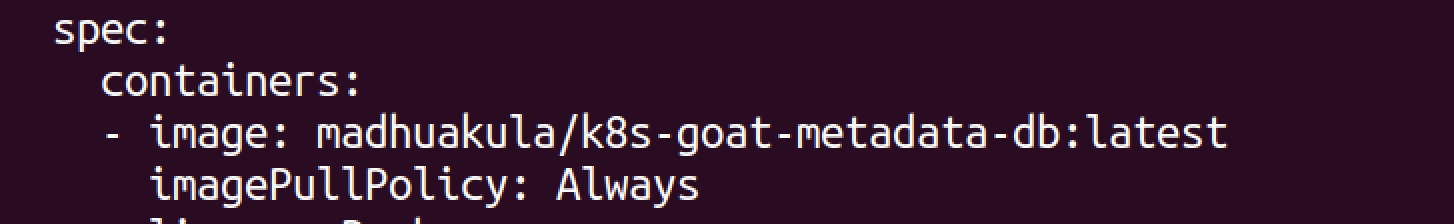

- metadata-db

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod metadata-db-68f8785b7c-szw9t -o yaml | grep image

- image: madhuakula/k8s-goat-metadata-db:latest

imagePullPolicy: Always

- image: madhuakula/k8s-goat-metadata-db:latest

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-metadata-db:latest":

- poor-registry-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod poor-registry-deployment-5df5bbbdc-676nn -o yaml | grep image

- image: madhuakula/k8s-goat-poor-registry

imagePullPolicy: Always

- image: madhuakula/k8s-goat-poor-registry

imageID: ""

- system-monitor-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod system-monitor-deployment-666d8bcc8-fkw9b -o yaml | grep image

image: madhuakula/k8s-goat-system-monitor

imagePullPolicy: Always

- image: madhuakula/k8s-goat-system-monitor

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-system-monitor": ErrImagePull:

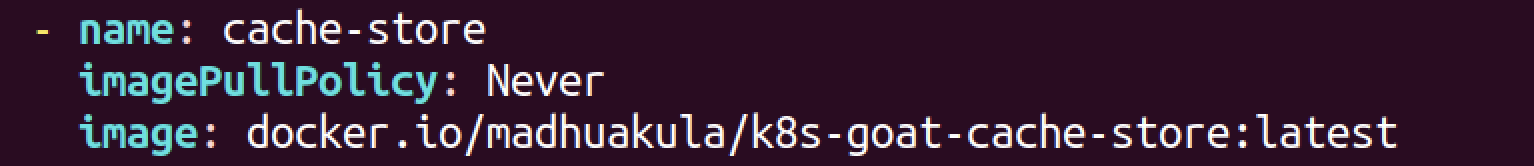

- cache-store-deployment

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ kubectl get pod cache-store-deployment-555c5b9866-9fjvn -n secure-middleware -o yaml | grep image

- image: madhuakula/k8s-goat-cache-store

imagePullPolicy: Always

- image: madhuakula/k8s-goat-cache-store

imageID: ""

message: 'Back-off pulling image "madhuakula/k8s-goat-cache-store": ErrImagePull:

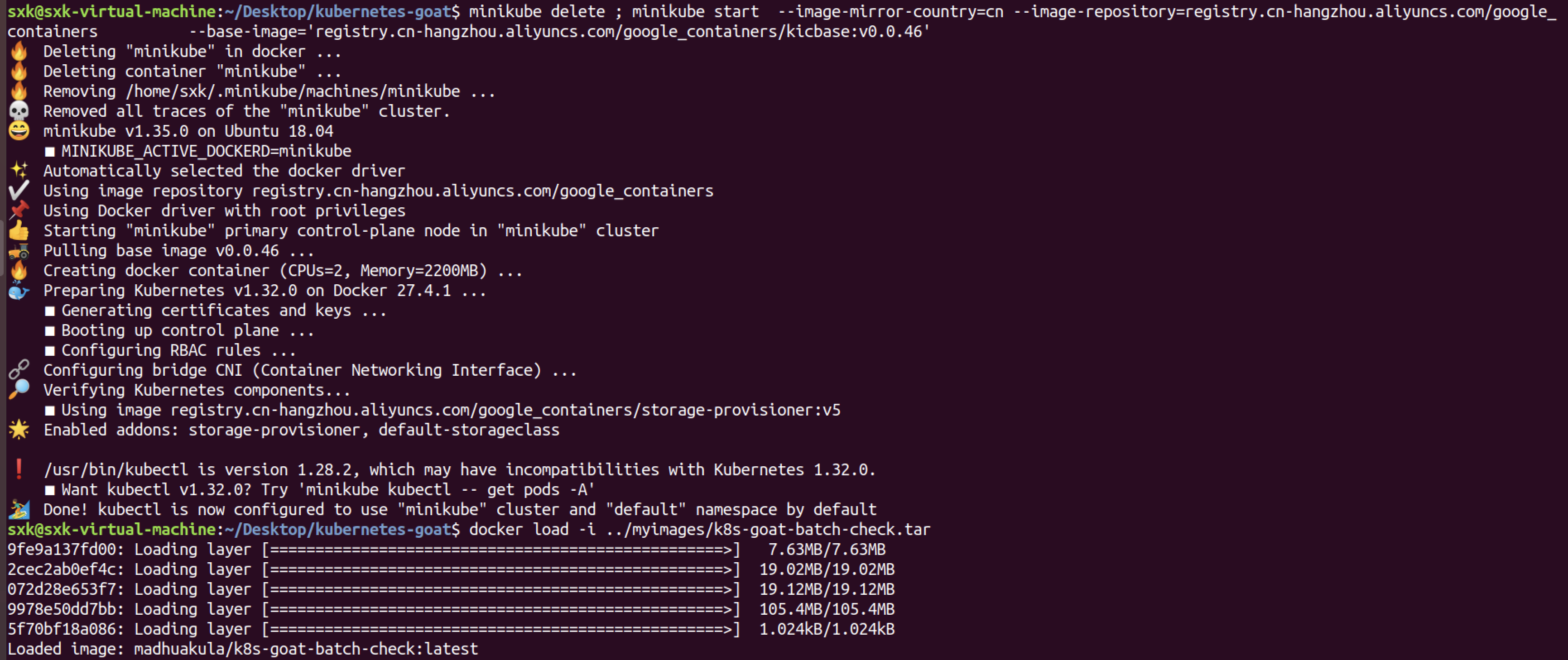

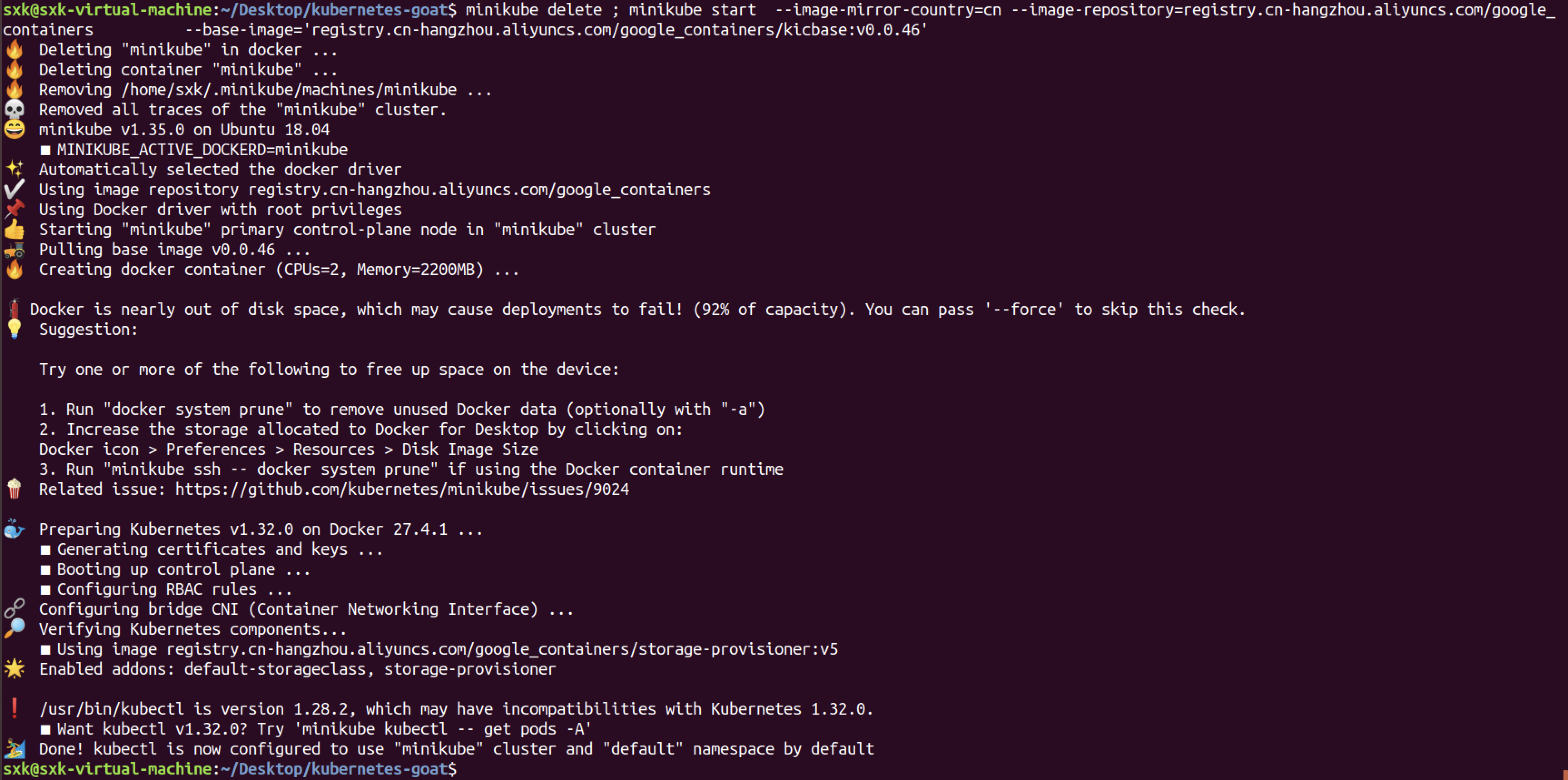

3.4.3 重构minikube集群

minikube delete; minikube start --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --base-image='registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.46'

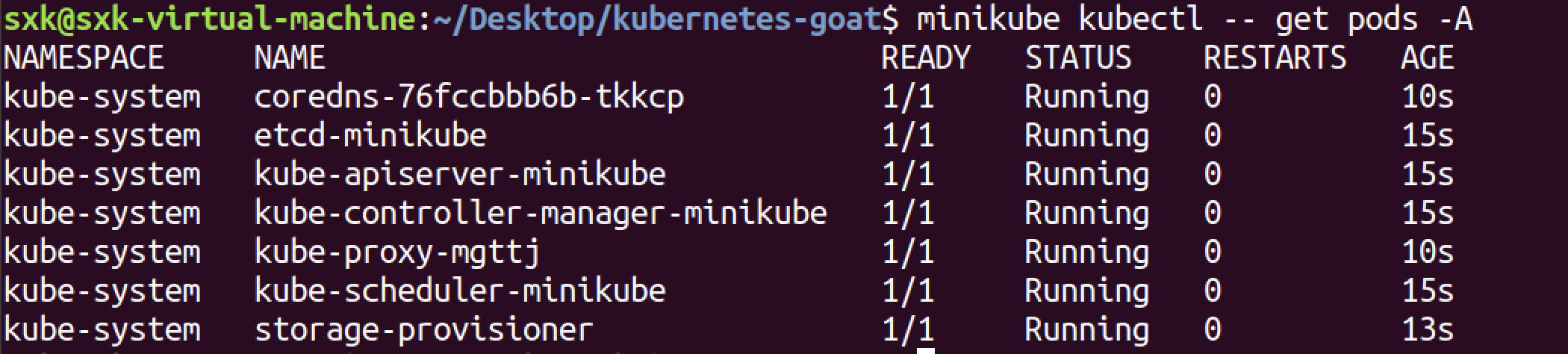

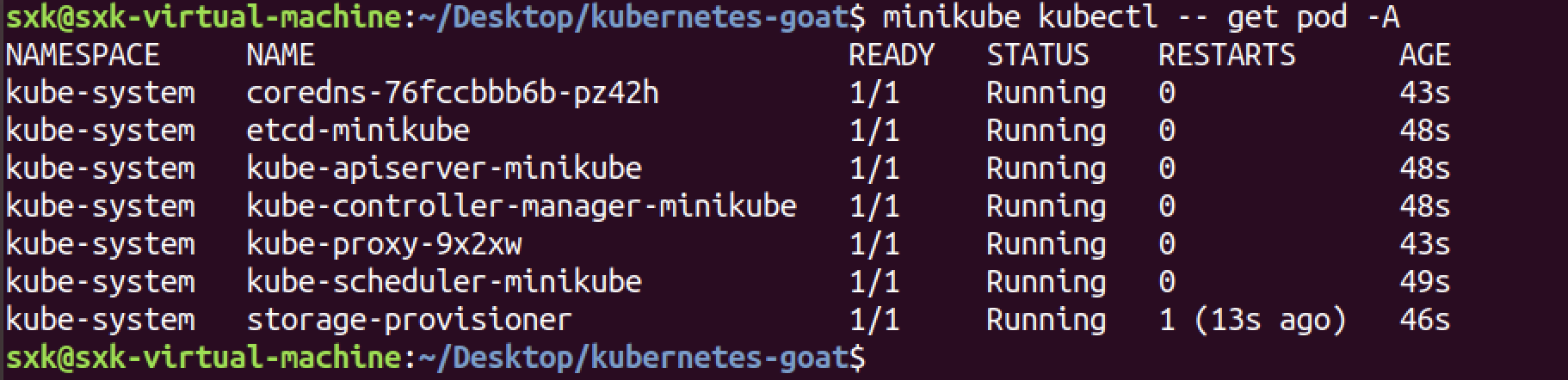

minikube kubectl -- get pod -A

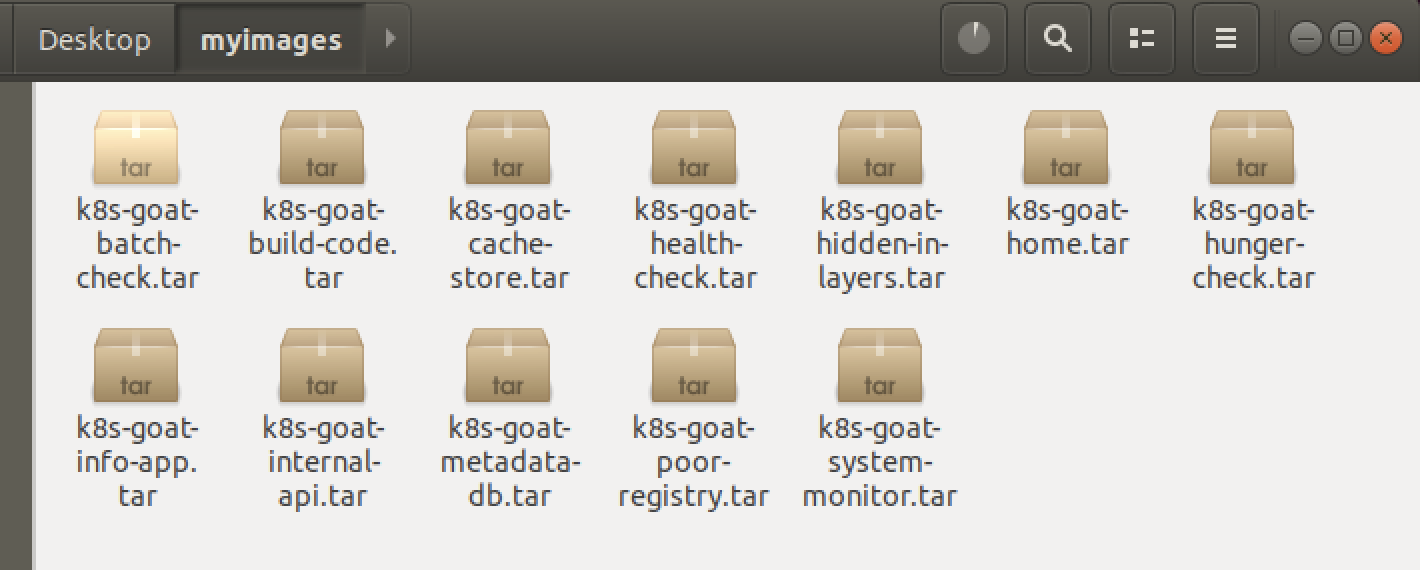

3.4.4 minikube加载镜像文件

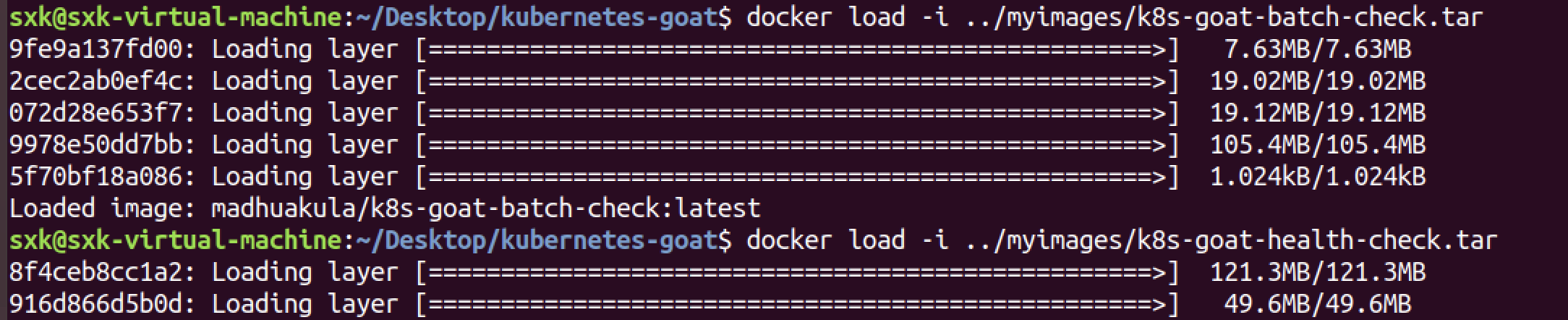

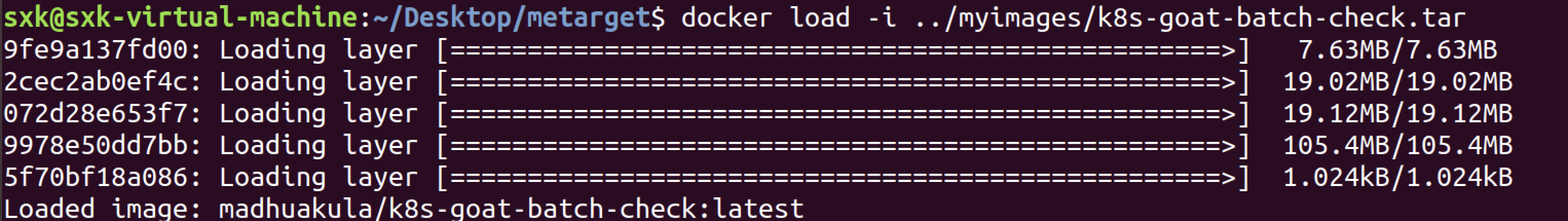

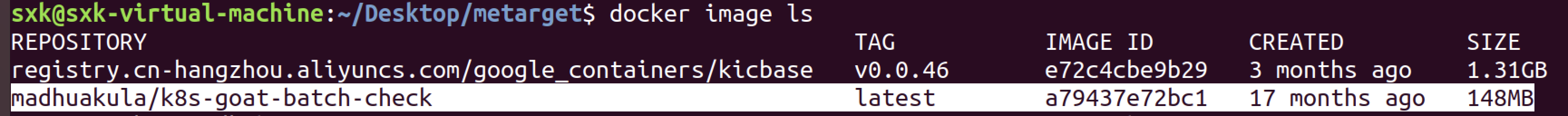

eval $(minikube docker-env)

docker load -i ../myimages/k8s-goat-batch-check.tar

把所有的tar镜像包全部加载一遍。

插曲:

虚拟机硬盘20G撑爆了,删除之间的快照关机之后扩展到100G,然后将分区扩展到100G。

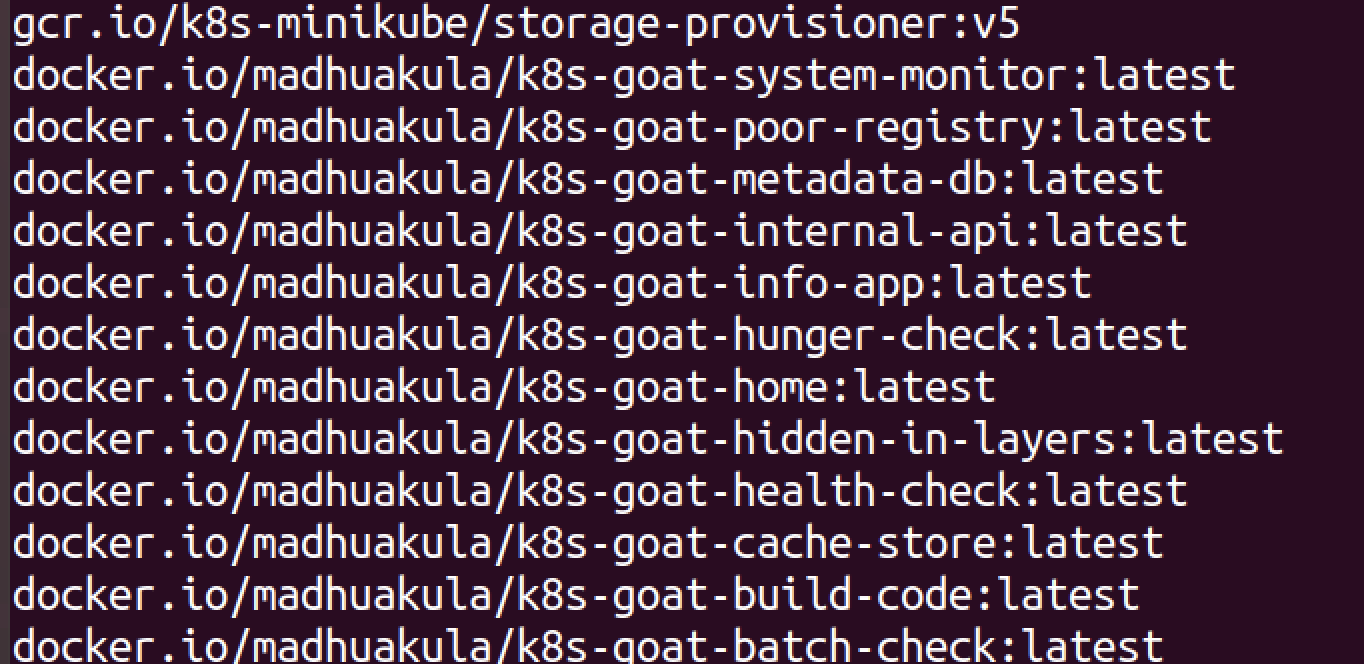

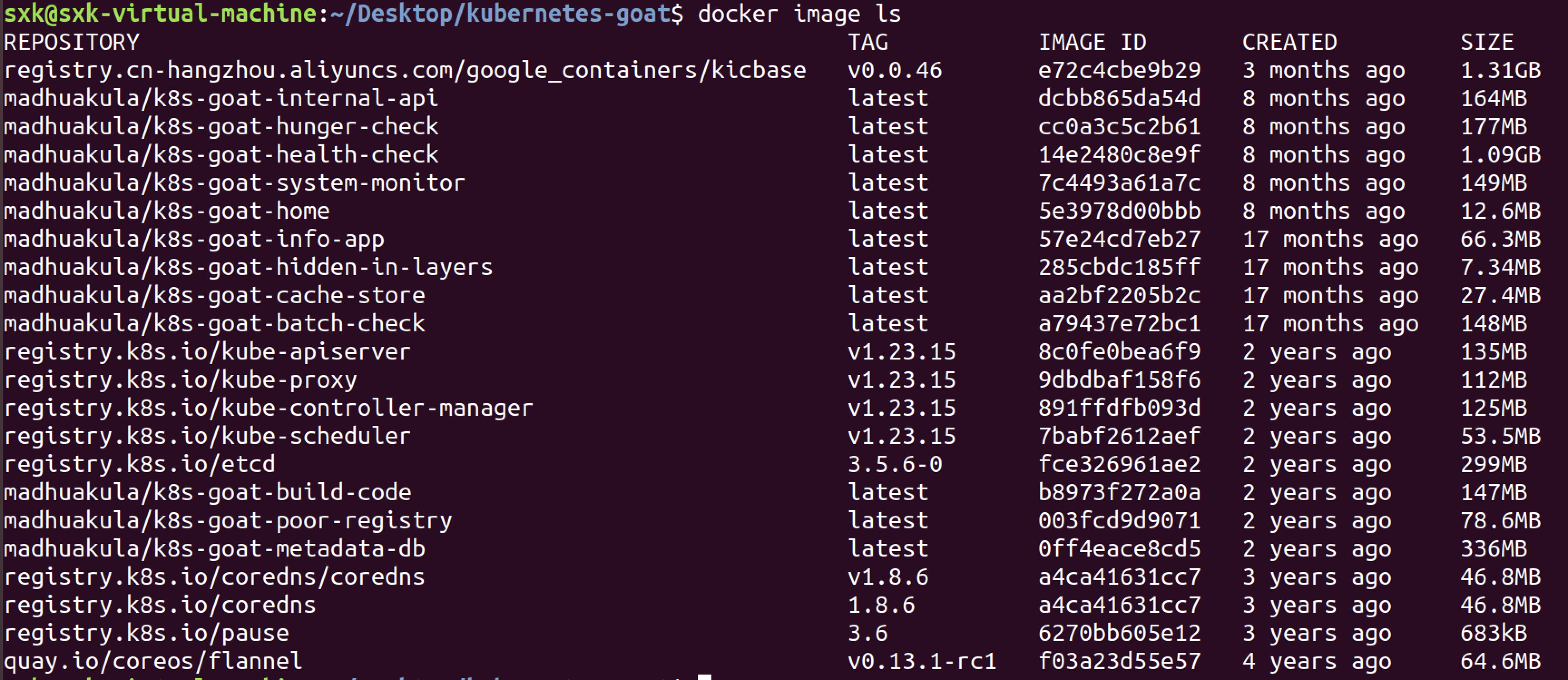

docker.io/madhuakula/k8s-goat-system-monitor:latest

docker.io/madhuakula/k8s-goat-poor-registry:latest

docker.io/madhuakula/k8s-goat-metadata-db:latest

docker.io/madhuakula/k8s-goat-internal-api:latest

docker.io/madhuakula/k8s-goat-info-app:latest

docker.io/madhuakula/k8s-goat-hunger-check:latest

docker.io/madhuakula/k8s-goat-home:latest

docker.io/madhuakula/k8s-goat-hidden-in-layers:latest

docker.io/madhuakula/k8s-goat-health-check:latest

docker.io/madhuakula/k8s-goat-cache-store:latest

docker.io/madhuakula/k8s-goat-build-code:latest

docker.io/madhuakula/k8s-goat-batch-check:latest3.4.5 修改goat相关的配置文件

goat安装中的关键内容如下:

kubectl apply -f scenarios/batch-check/job.yaml

kubectl apply -f scenarios/build-code/deployment.yaml

kubectl apply -f scenarios/cache-store/deployment.yaml

kubectl apply -f scenarios/health-check/deployment.yaml

kubectl apply -f scenarios/hunger-check/deployment.yaml

kubectl apply -f scenarios/internal-proxy/deployment.yaml

kubectl apply -f scenarios/kubernetes-goat-home/deployment.yaml

kubectl apply -f scenarios/poor-registry/deployment.yaml

kubectl apply -f scenarios/system-monitor/deployment.yaml

kubectl apply -f scenarios/hidden-in-layers/deployment.yaml

仿照3.4.1的形式修改各个job或deployment的配置文件,修改之前先备份一下。

imagePullPolicy: Never- scenarios/build-code/deployment.yaml

- scenarios/cache-store/deployment.yaml

- scenarios/health-check/deployment.yaml

- scenarios/hunger-check/deployment.yaml

- scenarios/internal-proxy/deployment.yaml

- scenarios/kubernetes-goat-home/deployment.yaml

- scenarios/poor-registry/deployment.yaml

- scenarios/system-monitor/deployment.yaml

- scenarios/hidden-in-layers/deployment.yaml

3.4.6 安装goat

bash setup-kubernetes-goat.sh

3.4.7 metadata-db镜像拉取失败问题解决

只剩metadat-db这个pod运行不起来。

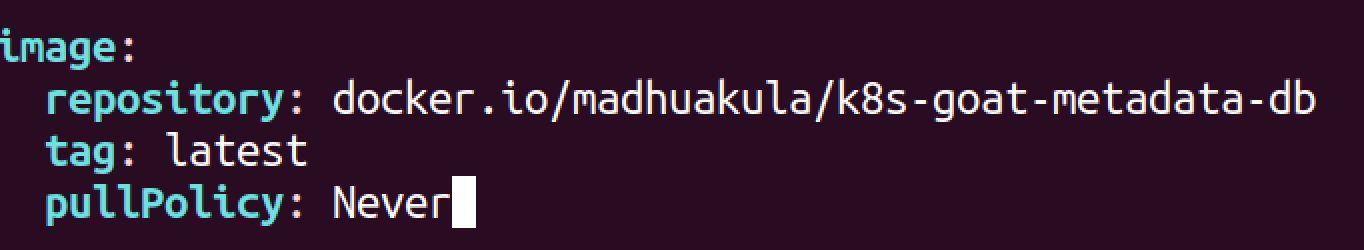

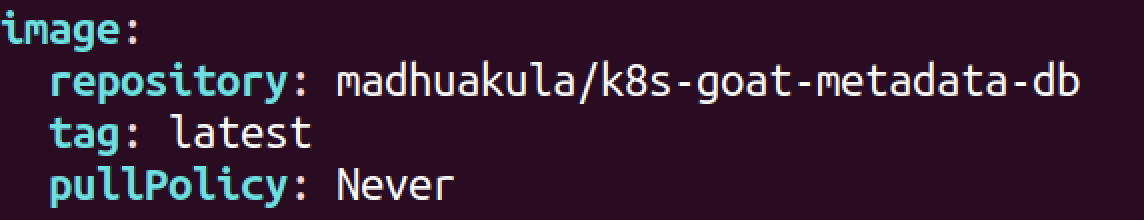

vim scenarios/metadata-db/values.yaml

kubectl delete pod metadata-db-68f8785b7c-752wp重建之后还是报同样的错误。

可能还需要修改一些配置:

# deploying insecure-rbac scenario

echo "deploying insecure super admin scenario"

kubectl apply -f scenarios/insecure-rbac/setup.yaml

# deploying helm chart to verify the setup

echo "deploying helm chart metadata-db scenario"

helm install metadata-db scenarios/metadata-db/cp scenarios/metadata-db/templates/deployment.yaml scenarios/metadata-db/templates/deployment.yaml.bak

vim scenarios/metadata-db/templates/deployment.yaml

先不改这个文件。

检查镜像名称是否完整(包括仓库地址/命名空间/标签)

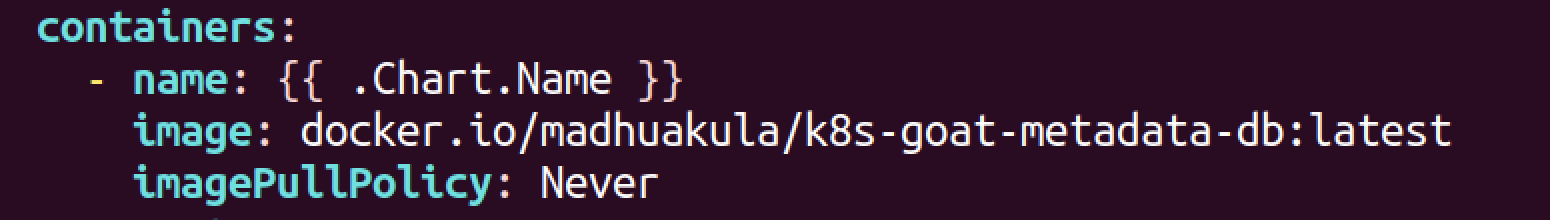

kubectl get pod metadata-db-68f8785b7c-4lm86 -o jsonpath='{.spec.containers[0].image}'

madhuakula/k8s-goat-metadata-db:latest #输出的并不是我改过的内容

改之前。

改之后。

重新删除,触发重构。但是没用。

kubectl get deployment metadata-db -o yaml

可能要结合helm使用一些其他的特殊方法来调用已经加载的模型。

五一后见!

修改values.yaml文件

kubectl delete pod之后还是没用,重新构建pod时还是会去拉镜像。

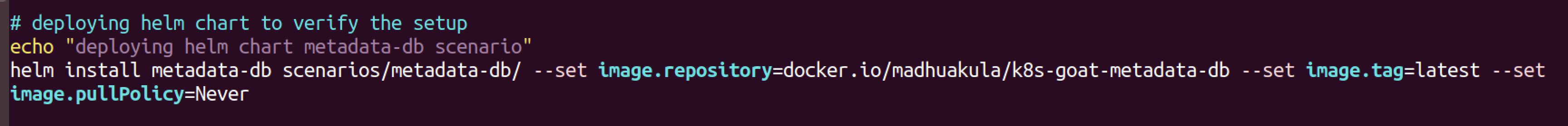

修改setup-kubernetes-goat.sh安装脚本尝试在安装时指定参数:

helm install metadata-db scenarios/metadata-db/ \

--set image.repository=docker.io/madhuakula/k8s-goat-metadata-db \

--set image.tag=latest \

--set image.pullPolicy=IfNotPresent # 或 Never修改前:

修改后:

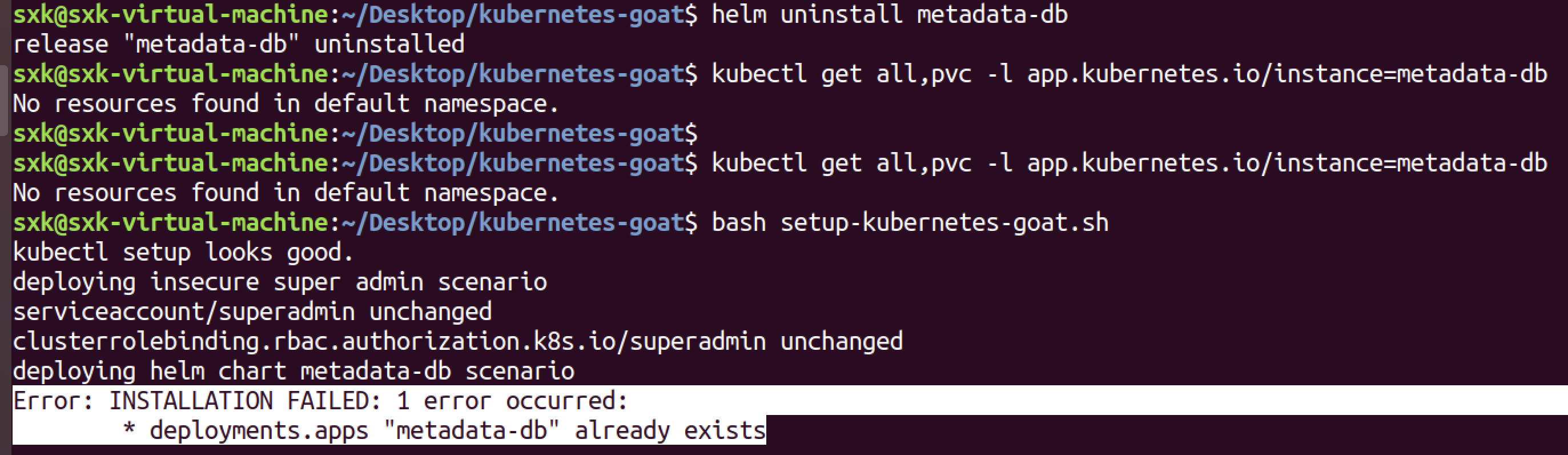

添加了相应的set配置之后重新执行安装脚本,helm install命令时报错,Error: INSTALLATION FAILED: cannot re-use a name that is still in use

# 先卸载旧的 Release

helm uninstall metadata-db

# 确认卸载是否成功(如果存在残留资源,可能需要手动清理)

kubectl get all,pvc -l app.kubernetes.io/instance=metadata-db

# 重新安装

helm install metadata-db scenarios/metadata-db/ \

--set image.repository=docker.io/madhuakula/k8s-goat-metadata-db \

--set image.tag=latest \

--set image.pullPolicy=IfNotPresent

# 删除残留的 Deployment

kubectl delete deployment metadata-db

# 检查并删除其他相关资源(Service、PVC 等)

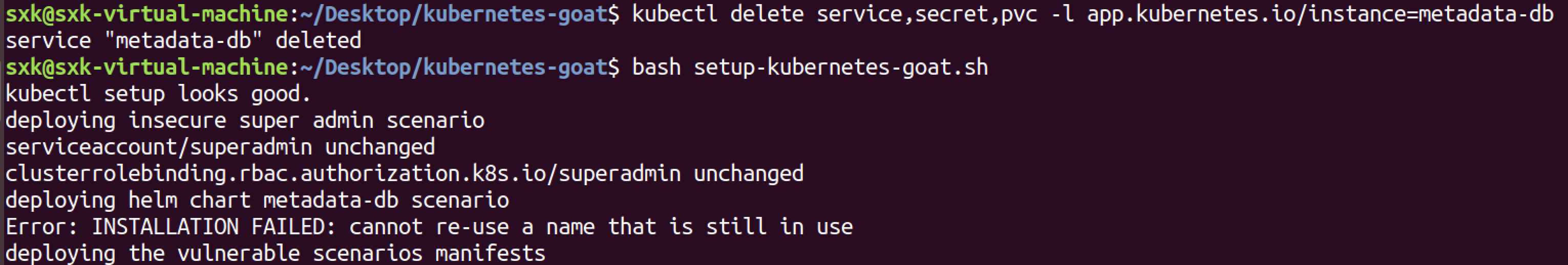

kubectl delete service,secret,pvc -l app.kubernetes.io/instance=metadata-db

# 强制检查所有资源(包括可能遗漏的)

kubectl get all,pvc,secret -l app.kubernetes.io/instance=metadata-db -o name | xargs kubectl delete

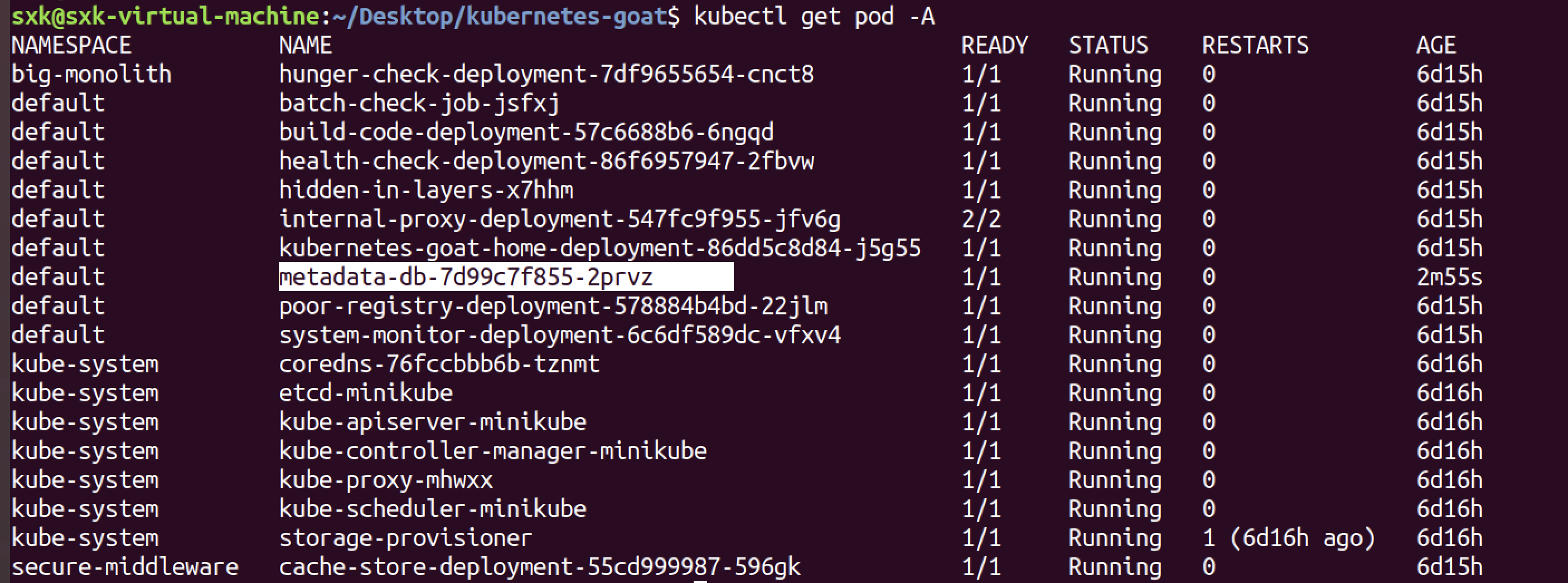

执行delete命令后重新查看pod,发现成功运行了。

不知道具体是哪一步奏效的。

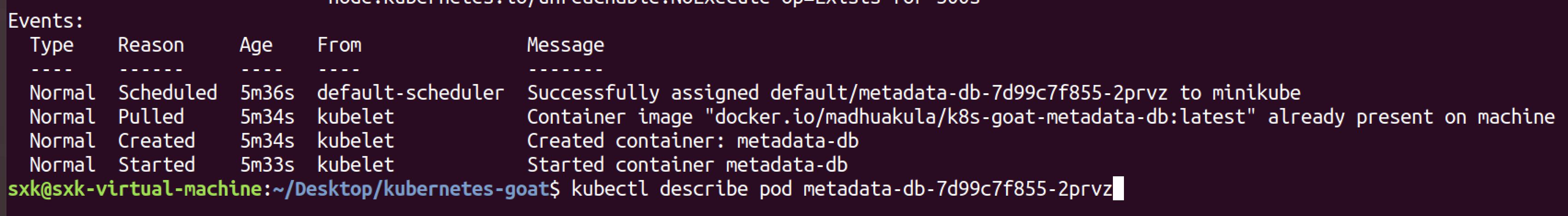

kubectl describe pod metadata-db-7d99c7f855-2prvz #查看pod详情可以看到成功应用了本地的镜像。

3.4.8 资源公开访问

访问测试。

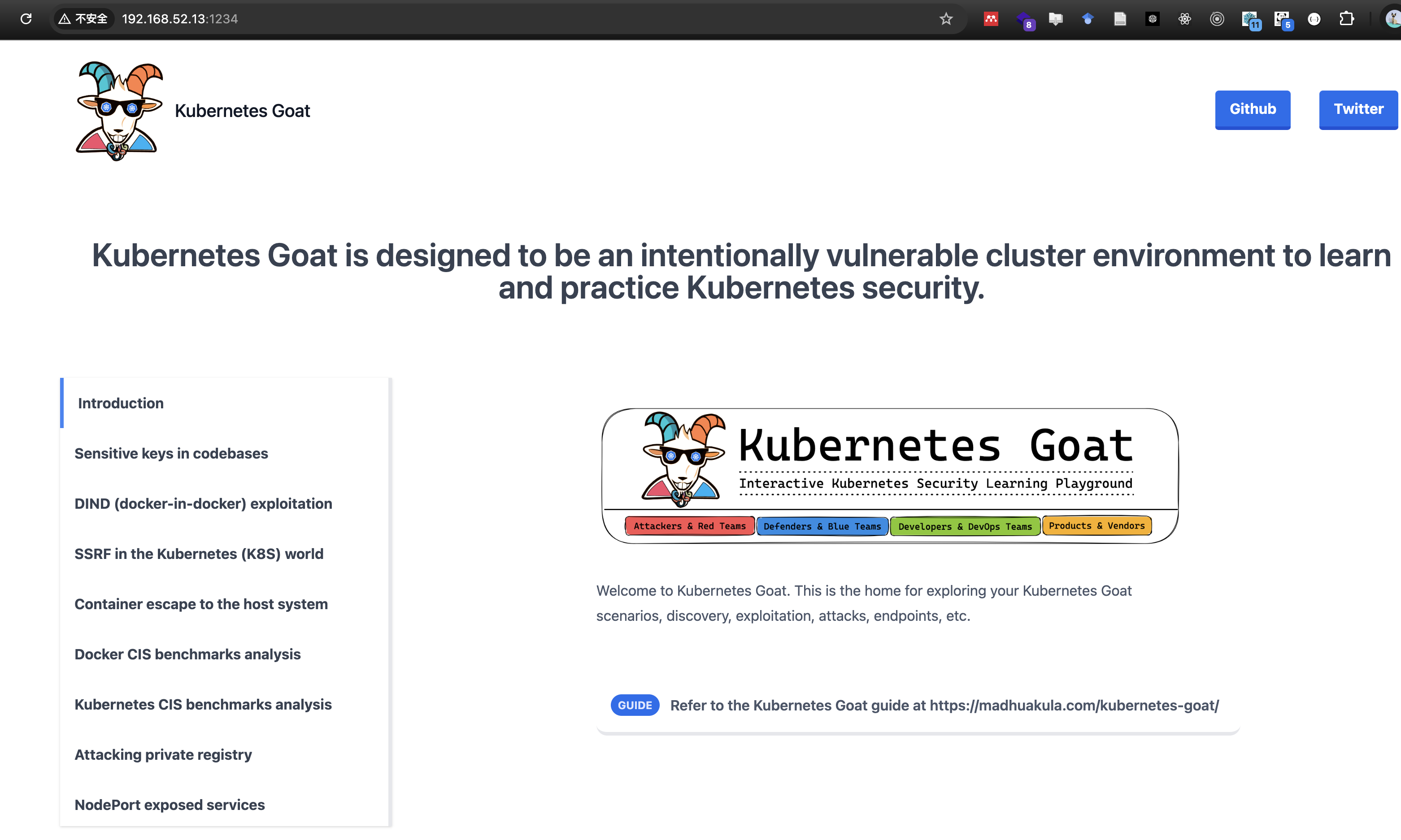

k8s goat靶场搭建成功!

四、metarget搭建KubeGoat靶场【✅】

minikube搭建的靶场在实际复现各场景漏洞的时候,容易复现失败,比如DIND (docker-in-docker) exploitation和SSRF in the Kubernetes (K8S) world(在实践过程中,按照官方文档前三个有两个都复现失败了),并且minikube并不是goat官方推荐的安装方法,可能需要探索更接近底层的安装方法。进行一步骤之前,之前minikube安装的靶场已通过快照保存。

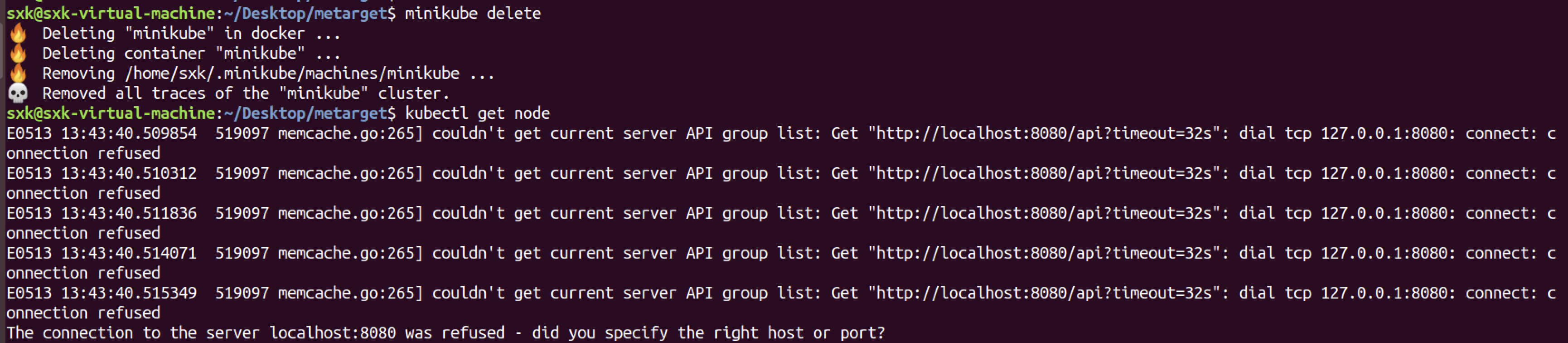

4.1 删除minikube

minikube delete

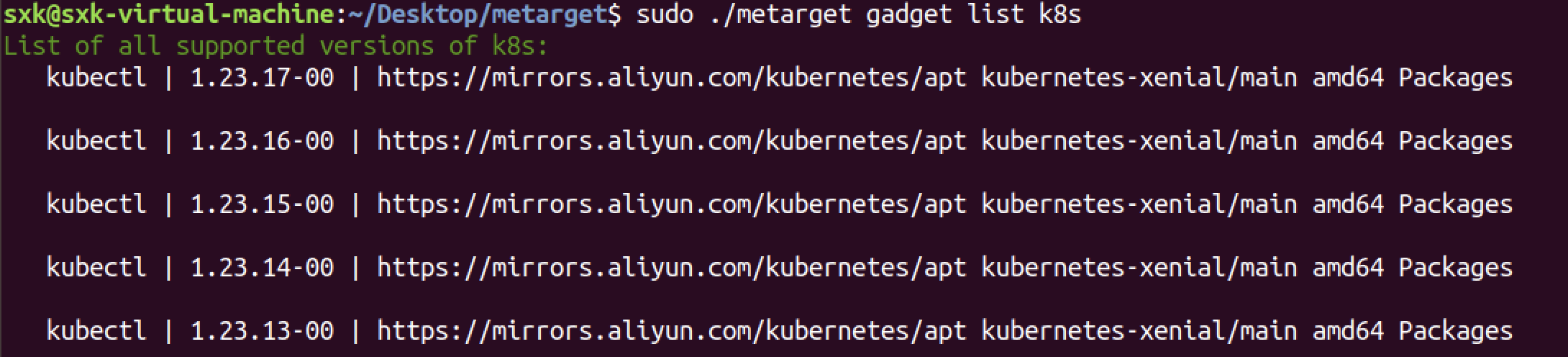

4.2 查看metarget支持的k8s版本

sudo ./metarget gadget list k8s

这里选择安装1.23.15。

4.3 安装指定版本的k8s

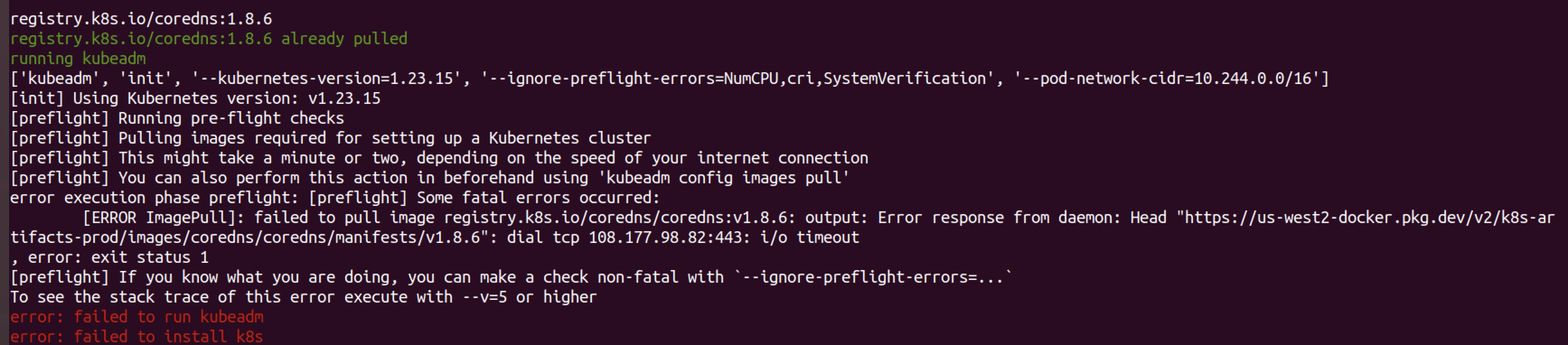

sudo ./metarget gadget install k8s --version=1.23.15 --verbose --domestic报错

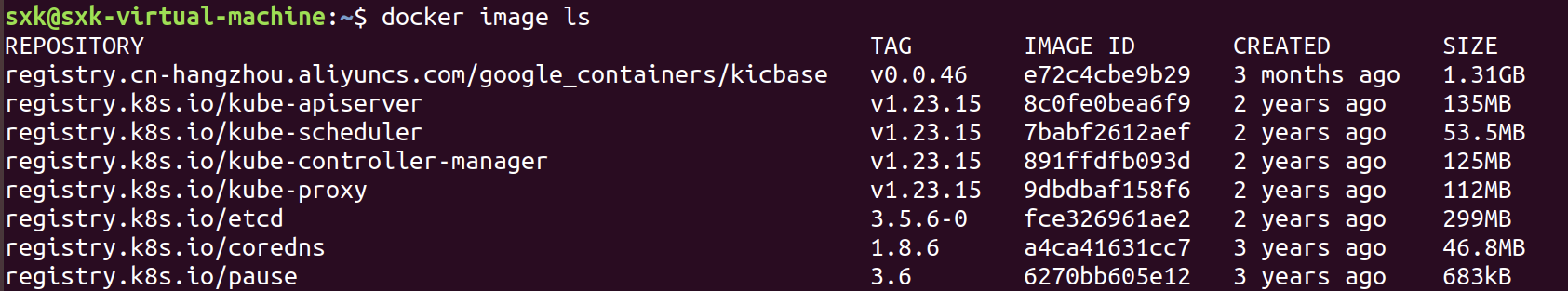

['kubeadm', 'init', '--kubernetes-version=1.23.15', '--ignore-preflight-errors=NumCPU,cri,SystemVerification', '--pod-network-cidr=10.244.0.0/16'] [init] Using Kubernetes version: v1.23.15 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR ImagePull]: failed to pull image registry.k8s.io/coredns/coredns:v1.8.6: output: Error response from daemon: Head "https://us-west2-docker.pkg.dev/v2/k8s-artifacts-prod/images/coredns/coredns/manifests/v1.8.6": dial tcp 108.177.98.82:443: i/o timeout , error: exit status 1 [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` To see the stack trace of this error execute with --v=5 or higher error: failed to run kubeadm error: failed to install k8s

但是docker image ls看到coredns的镜像存在。

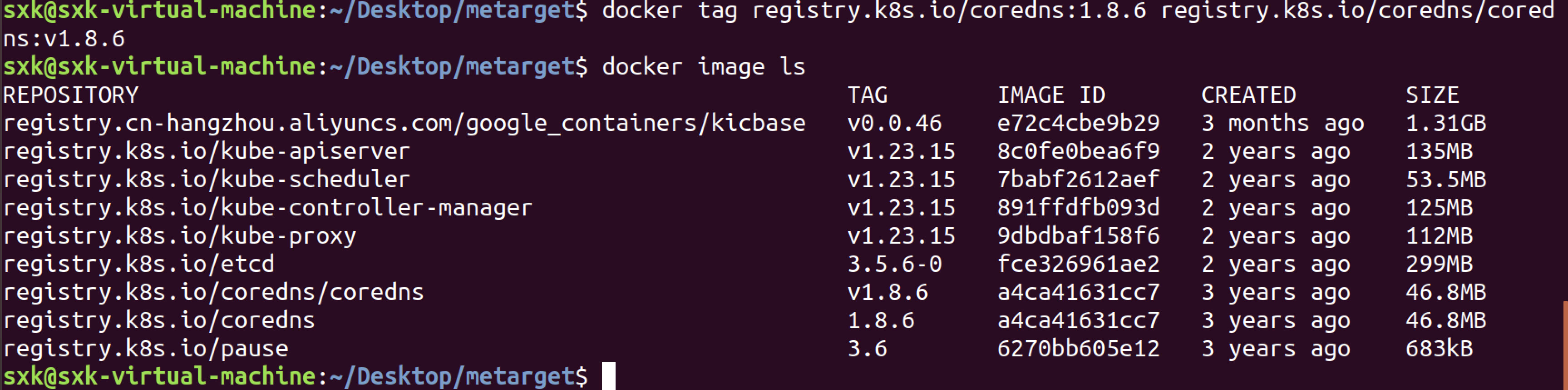

CoreDNS 镜像的路径是 registry.k8s.io/coredns:1.8.6,而 kubeadm 默认要求的是 registry.k8s.io/coredns/coredns:v1.8.6,重新打标签:

docker tag registry.k8s.io/coredns:1.8.6 registry.k8s.io/coredns/coredns:v1.8.6

重新运行安装命令。

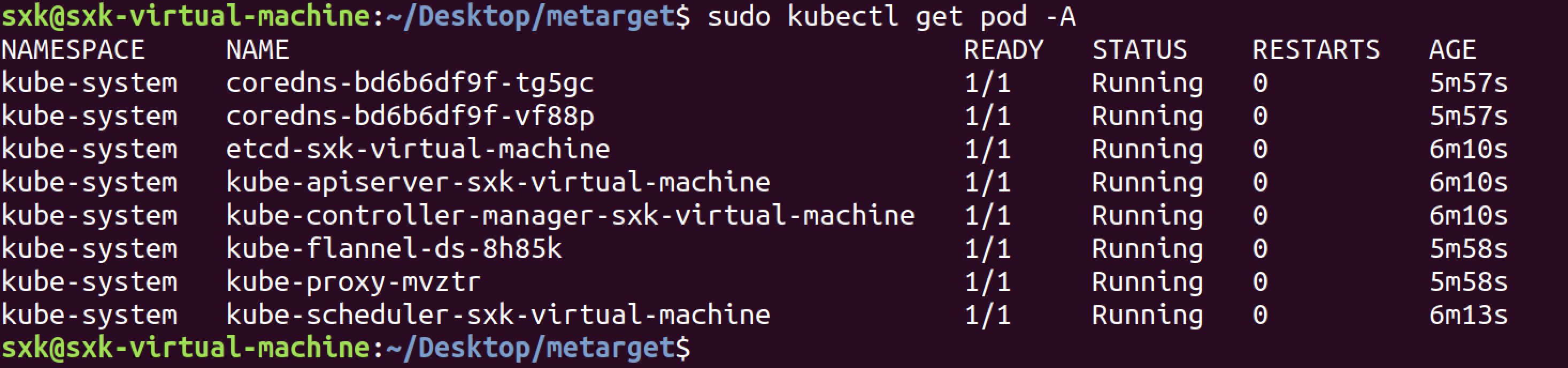

查看当前pod:

sxk@sxk-virtual-machine:~/Desktop/metarget$ sudo kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-bd6b6df9f-tg5gc 0/1 Pending 0 107s

kube-system coredns-bd6b6df9f-vf88p 0/1 Pending 0 107s

kube-system etcd-sxk-virtual-machine 1/1 Running 0 2m

kube-system kube-apiserver-sxk-virtual-machine 1/1 Running 0 2m

kube-system kube-controller-manager-sxk-virtual-machine 1/1 Running 0 2m

kube-system kube-flannel-ds-8h85k 0/1 Init:0/1 0 108s

kube-system kube-proxy-mvztr 1/1 Running 0 108s

kube-system kube-scheduler-sxk-virtual-machine 1/1 Running 0 2m3s根据 kubectl get pod -A 的输出,当前 Kubernetes 集群的核心组件已启动,但 CoreDNS 和 Flannel 网络插件 的 Pod 未能正常运行。

问题分析

- CoreDNS 处于 Pending 状态

- 根本原因:Pod 网络未就绪(Flannel 未成功部署),导致 CoreDNS 无法分配 IP 地址。

- 现象:所有需要网络的组件(如 CoreDNS、Flannel)均卡在初始化阶段。

- Flannel 处于

Init:0/1状态- 可能原因:

- Flannel 镜像拉取失败(网络问题或镜像路径错误)。

- 节点防火墙阻止了 Flannel 所需的端口(如 UDP 8472)。

- CNI 配置文件未正确生成(

/etc/cni/net.d/目录为空)。

- 可能原因:

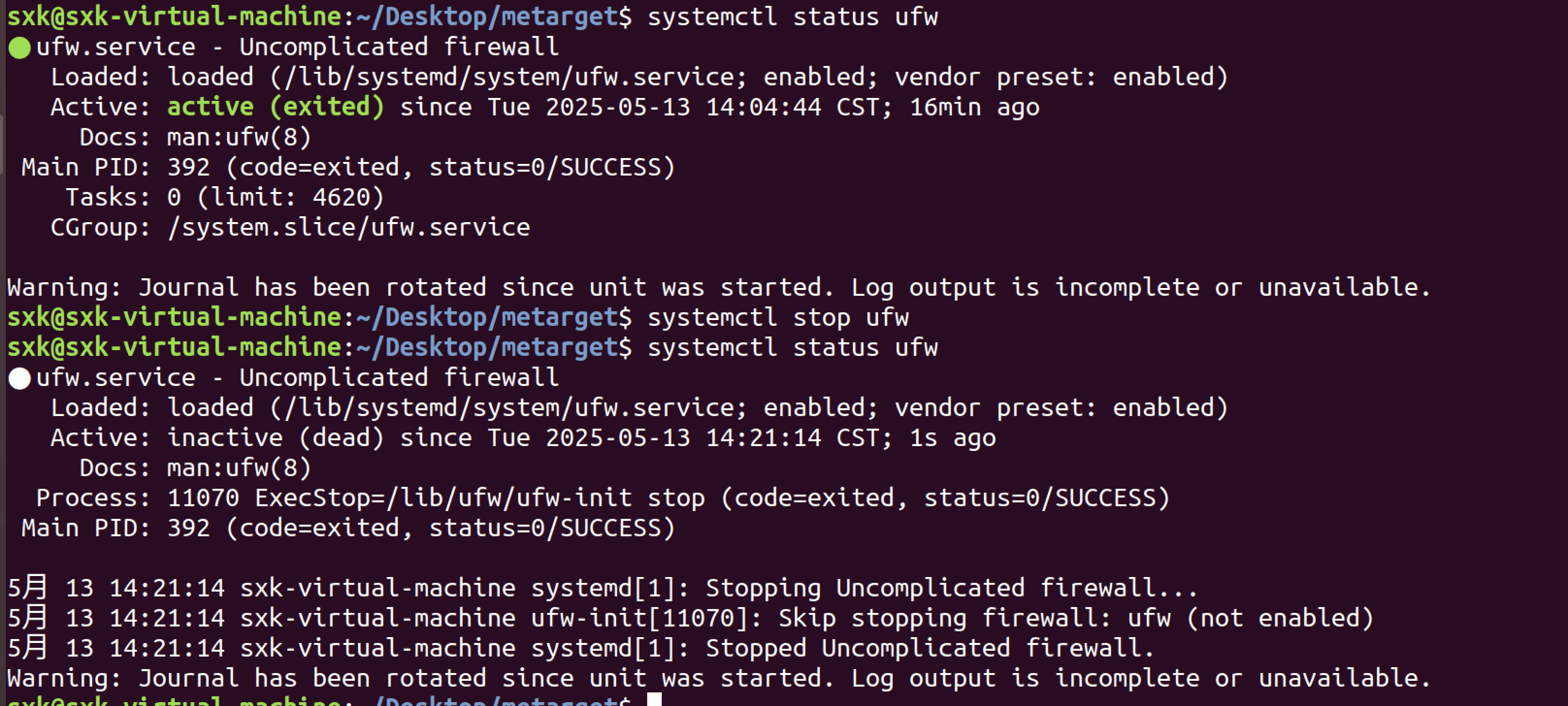

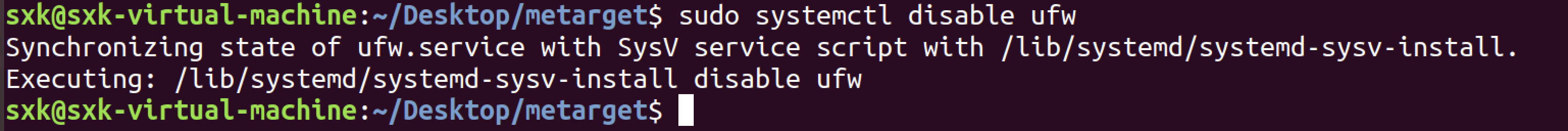

彻底disable防火墙:

重新查看pod状态,所有pod已经成功运行。

4.4 安装goat

4.4.1 load本地goat镜像

在之前使用minikube安装goat时,所有缺少的镜像都已经放在了本机。

docker load -i 镜像tar包路径

4.4.2 安装

bash setup-kubernetes-goat.sh⚠️:这个setup-kubernetes-goat.sh文件在用minikube搭建靶场时做过修改,此处延用即可。

权限问题

sudo bash setup-kubernetes-goat.sh安装成功。

sxk@sxk-virtual-machine:~/Desktop/kubernetes-goat$ sudo bash setup-kubernetes-goat.sh

kubectl setup looks good.

deploying insecure super admin scenario

serviceaccount/superadmin created

clusterrolebinding.rbac.authorization.k8s.io/superadmin created

deploying helm chart metadata-db scenario

Error: INSTALLATION FAILED: 1 error occurred:

* deployments.apps "metadata-db" already exists

deploying the vulnerable scenarios manifests

job.batch/batch-check-job created

deployment.apps/build-code-deployment created

service/build-code-service created

namespace/secure-middleware created

service/cache-store-service created

deployment.apps/cache-store-deployment created

deployment.apps/health-check-deployment created

service/health-check-service created

namespace/big-monolith created

role.rbac.authorization.k8s.io/secret-reader created

rolebinding.rbac.authorization.k8s.io/secret-reader-binding created

serviceaccount/big-monolith-sa created

secret/vaultapikey created

secret/webhookapikey created

deployment.apps/hunger-check-deployment created

service/hunger-check-service created

deployment.apps/internal-proxy-deployment created

service/internal-proxy-api-service created

service/internal-proxy-info-app-service created

deployment.apps/kubernetes-goat-home-deployment created

service/kubernetes-goat-home-service created

deployment.apps/poor-registry-deployment created

service/poor-registry-service created

secret/goatvault created

deployment.apps/system-monitor-deployment created

service/system-monitor-service created

job.batch/hidden-in-layers created

Successfully deployed Kubernetes Goat. Have fun learning Kubernetes Security!

Ensure pods are in running status before running access-kubernetes-goat.sh script

Now run the bash access-kubernetes-goat.sh to access the Kubernetes Goat environment.

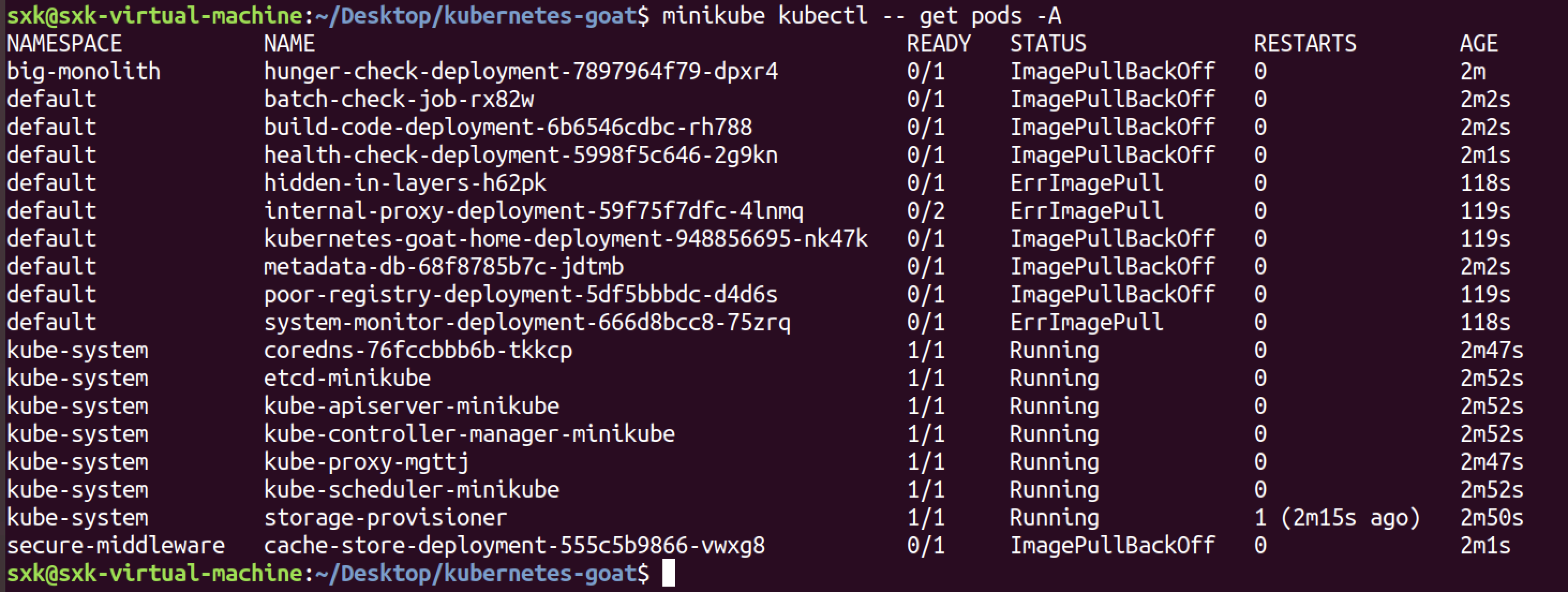

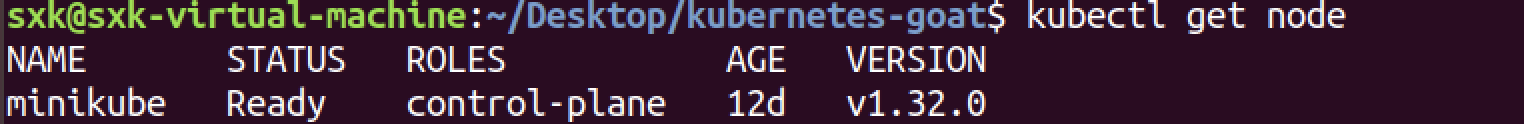

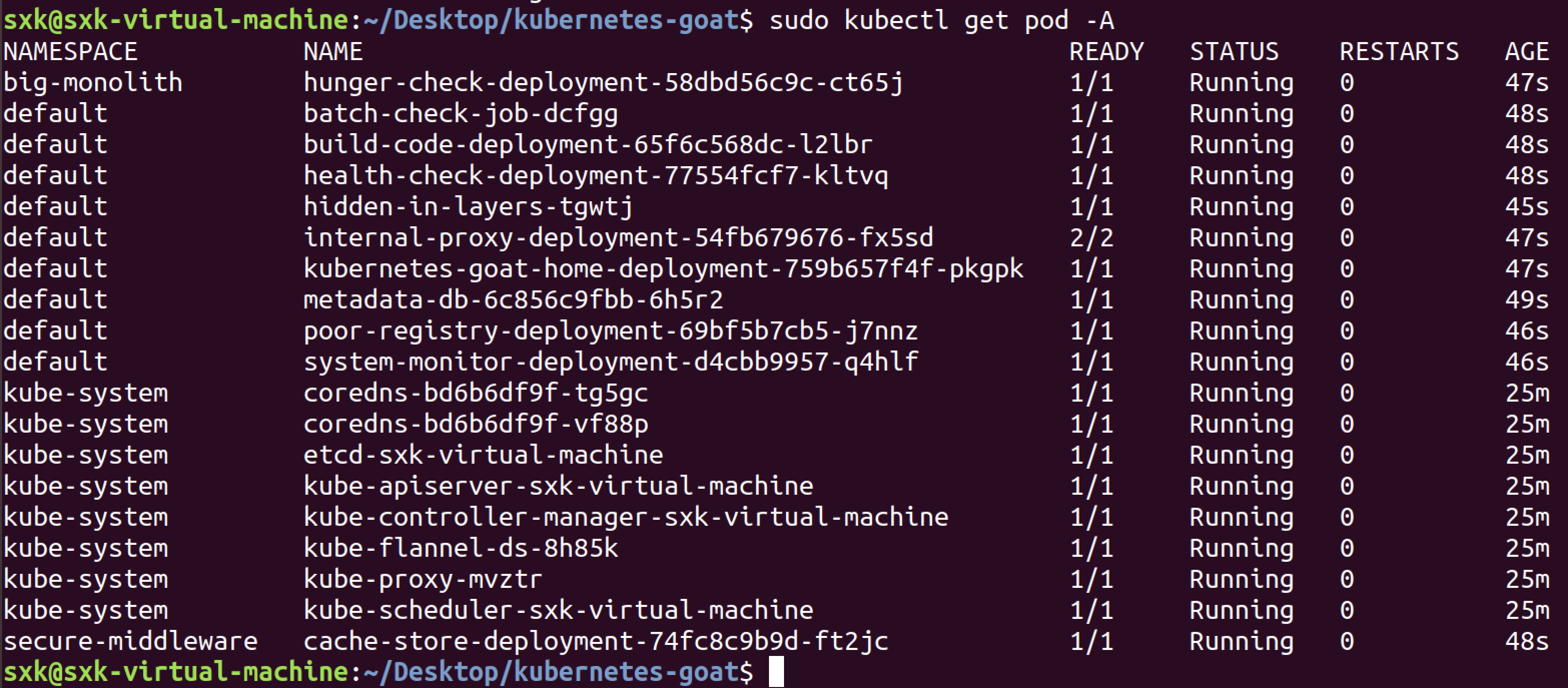

查看节点:

4.4.3 暴露服务

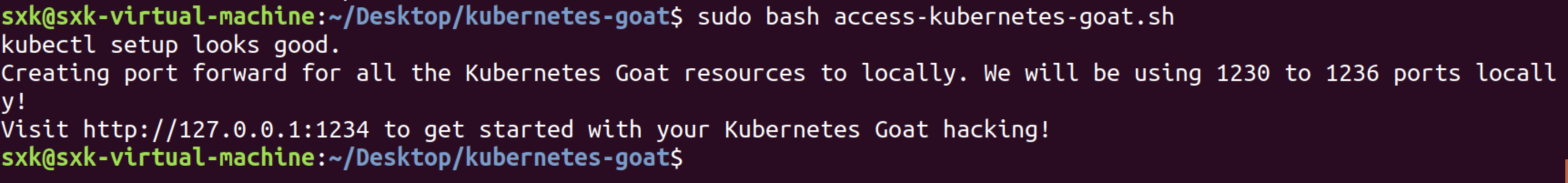

sudo bash access-kubernetes-goat.sh

4.5 访问测试

搭建成功✅!

参考

https://gugesay.com/archives/536#google_vignette 【云原生安全学习笔记】

https://zone.huoxian.cn/d/1153-k8s 【浅析K8S各种未授权攻击方法】

https://github.com/neargle/my-re0-k8s-security 【从零开始的Kubernetes攻防】

https://blog.csdn.net/C3245073527/article/details/140278749 【使用kubeadm在VMware虚拟机从0开始搭建k8s集群,踩完所有坑】

https://www.freebuf.com/articles/container/389506.html 【一个易懂、完整、实战的K8S攻防靶场演练】

https://cloud.tencent.com/developer/article/2160045 【云原生安全系列(一) | Kubernetes云原生靶场搭建】

https://dockerworld.cn/?id=384 【Kubernetes架构最全详解(8大架构组件)】

https://cloud.tencent.com/developer/article/1987405【Kubernetes CRI-O引擎逃逸CVE-2022-0811漏洞复现】

https://blog.csdn.net/weixin_65176861/article/details/136404271 【从零开始学网安:云原生攻防靶场Metarget安装步骤】

https://blog.nsfocus.net/metarget/ 【Metarget:云原生攻防靶场开源啦!】

https://github.com/Metarget/metarget/blob/master/README-zh.md 【Metarget项目文档】

https://blog.csdn.net/ylfmsn/article/details/129894101 【如何在Ubuntu 18.04上安装Docker】

https://mp.weixin.qq.com/s/H2Z95FBsJnXZUsbgmt7Wbw 【【云安全】k8s漏洞合集 | k8s安全攻防】

https://mp.weixin.qq.com/s/qzxjZyXO3hCADK1MKtRZOg 【K8S靶场KubeGoat部署】

https://blog.csdn.net/thinkthewill/article/details/117655879 【minikube start 国内服务器无法启动,搞了两天才搞成功】

https://xz.aliyun.com/news/12367 【K8s集群安全攻防(上)】

https://xz.aliyun.com/news/12376 【K8s集群安全攻防(下)】

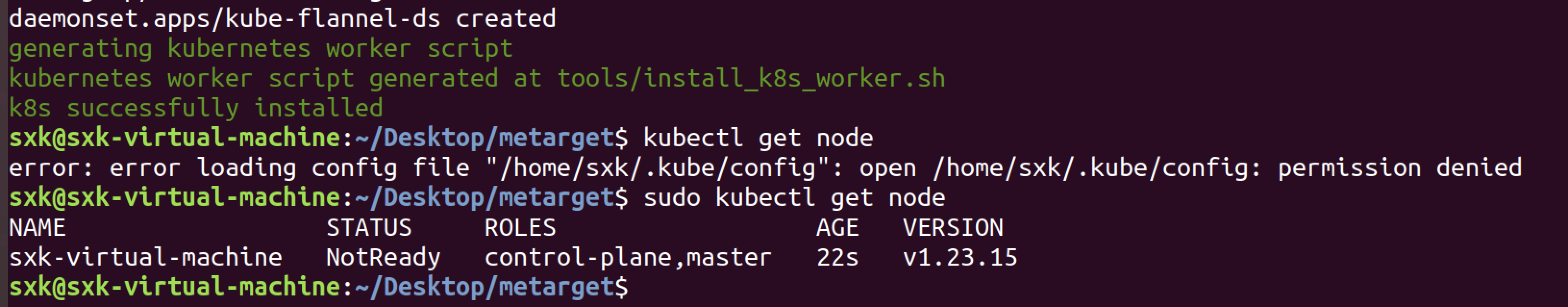

https://madhuakula.com/kubernetes-goat/docs/ 【KubeGoat官方文档】